Back

Sanskar

Keen Learner and Exp... • 5m

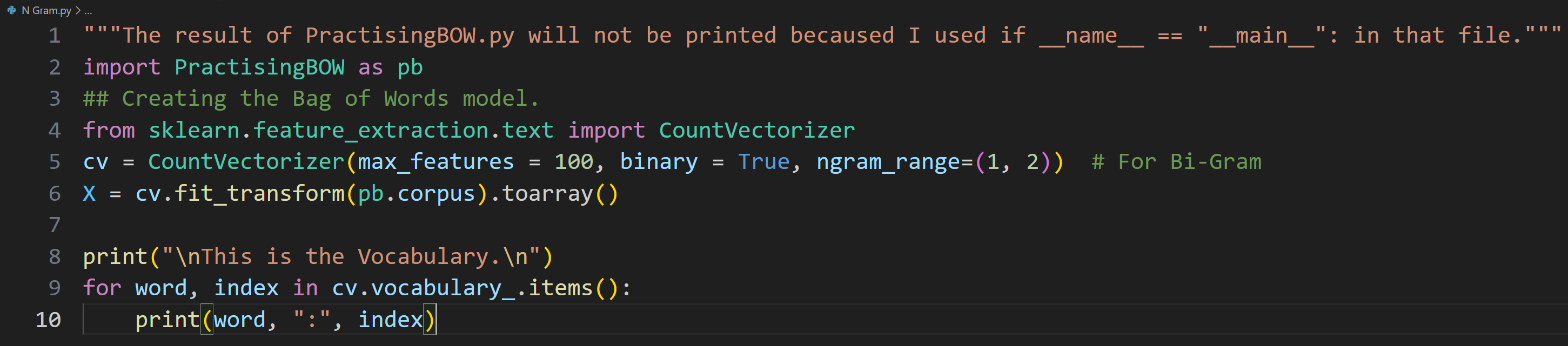

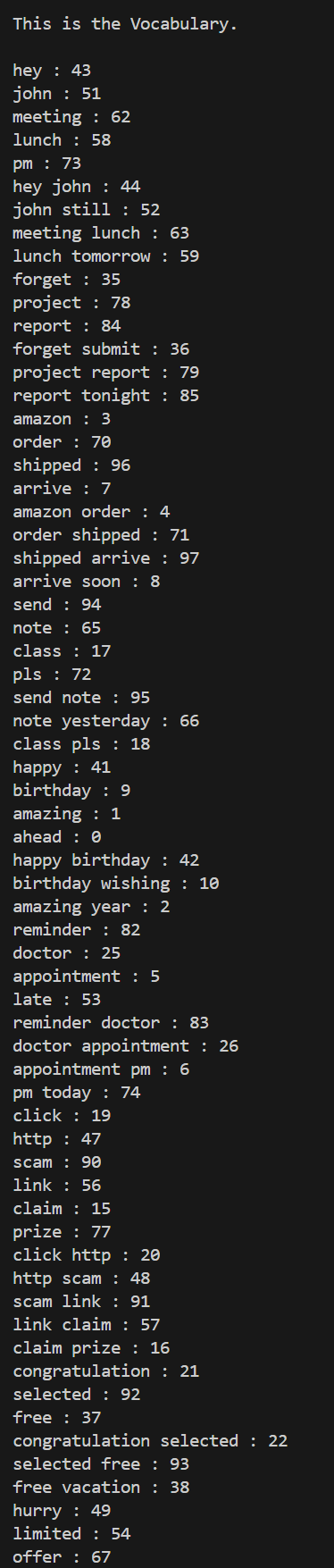

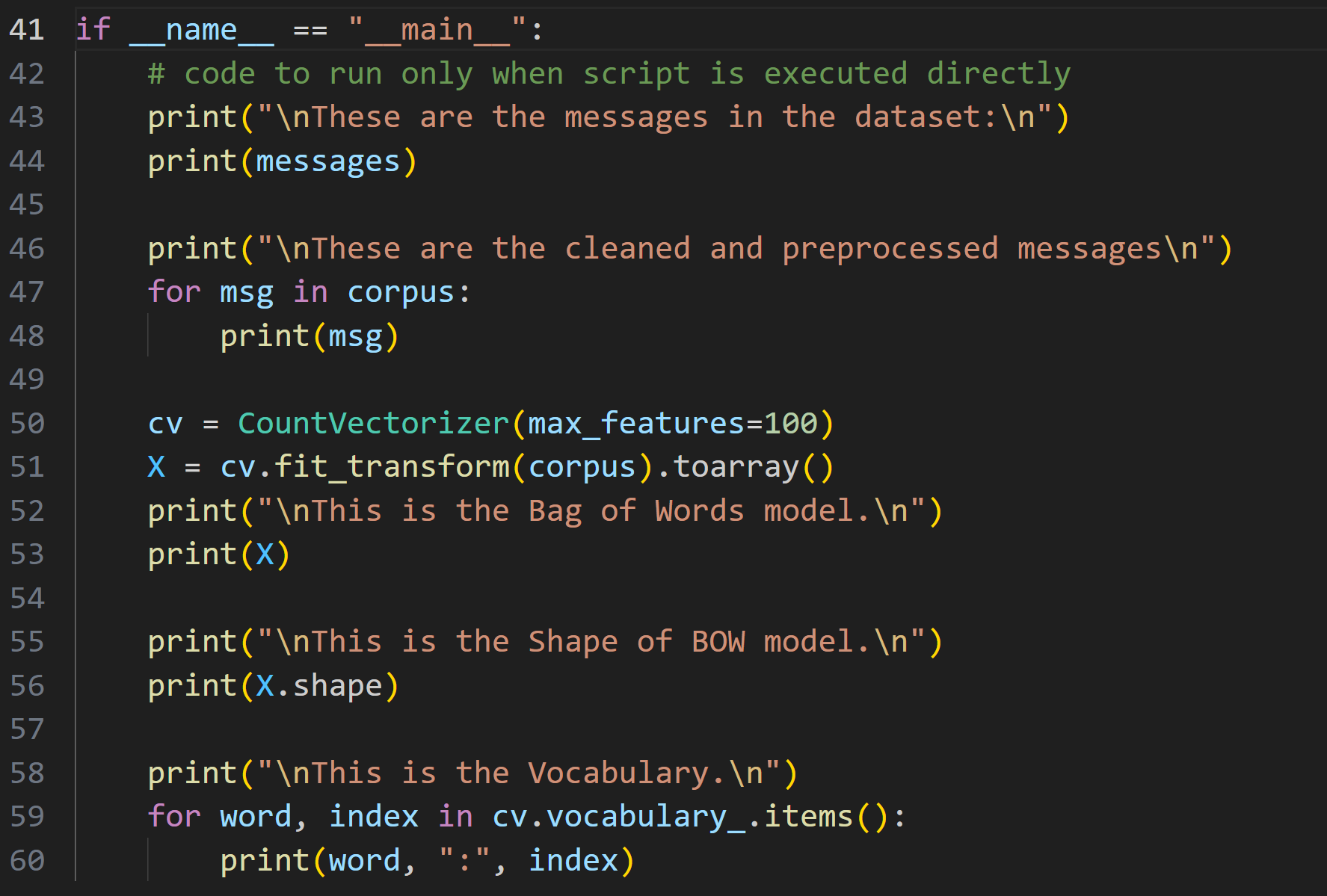

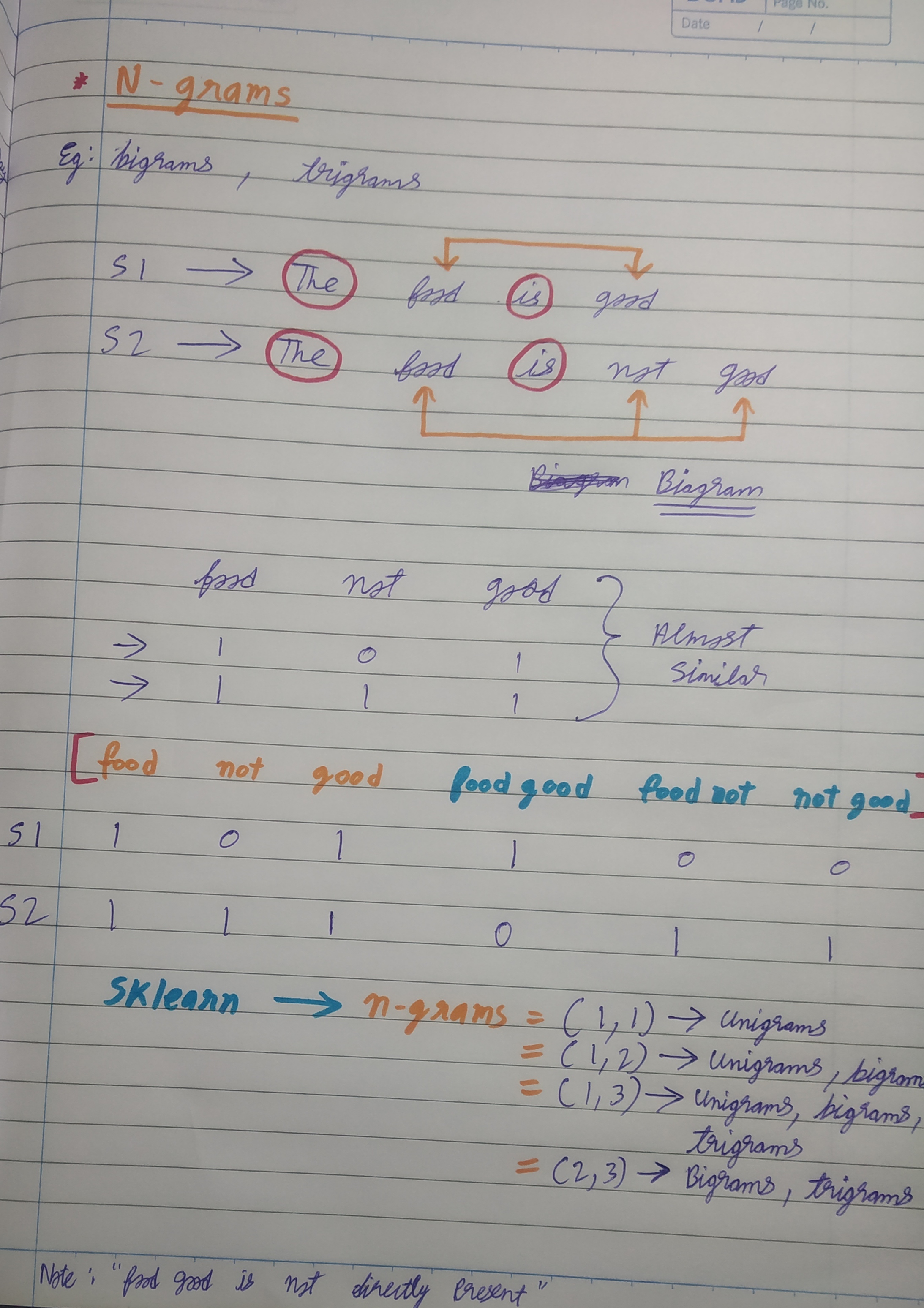

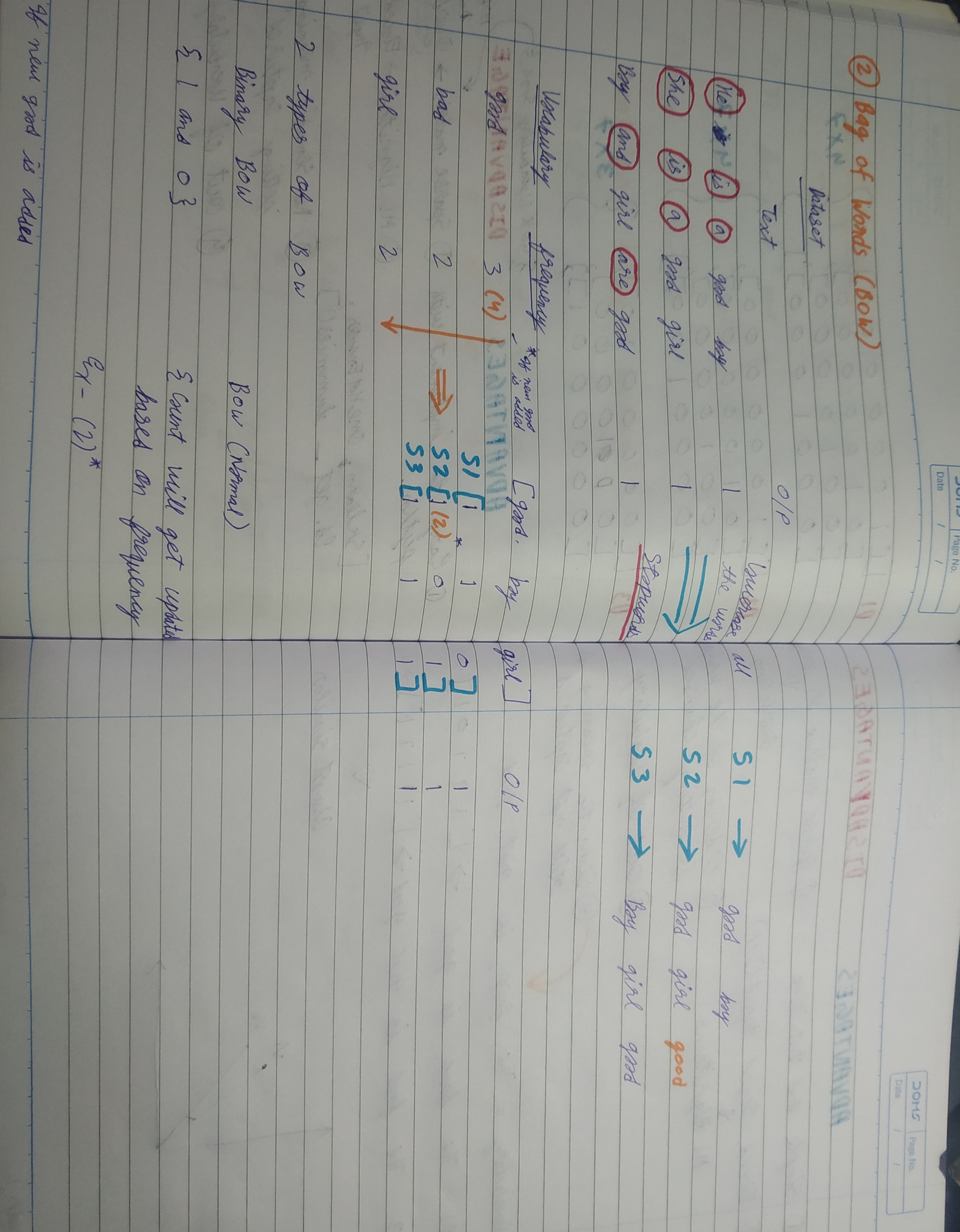

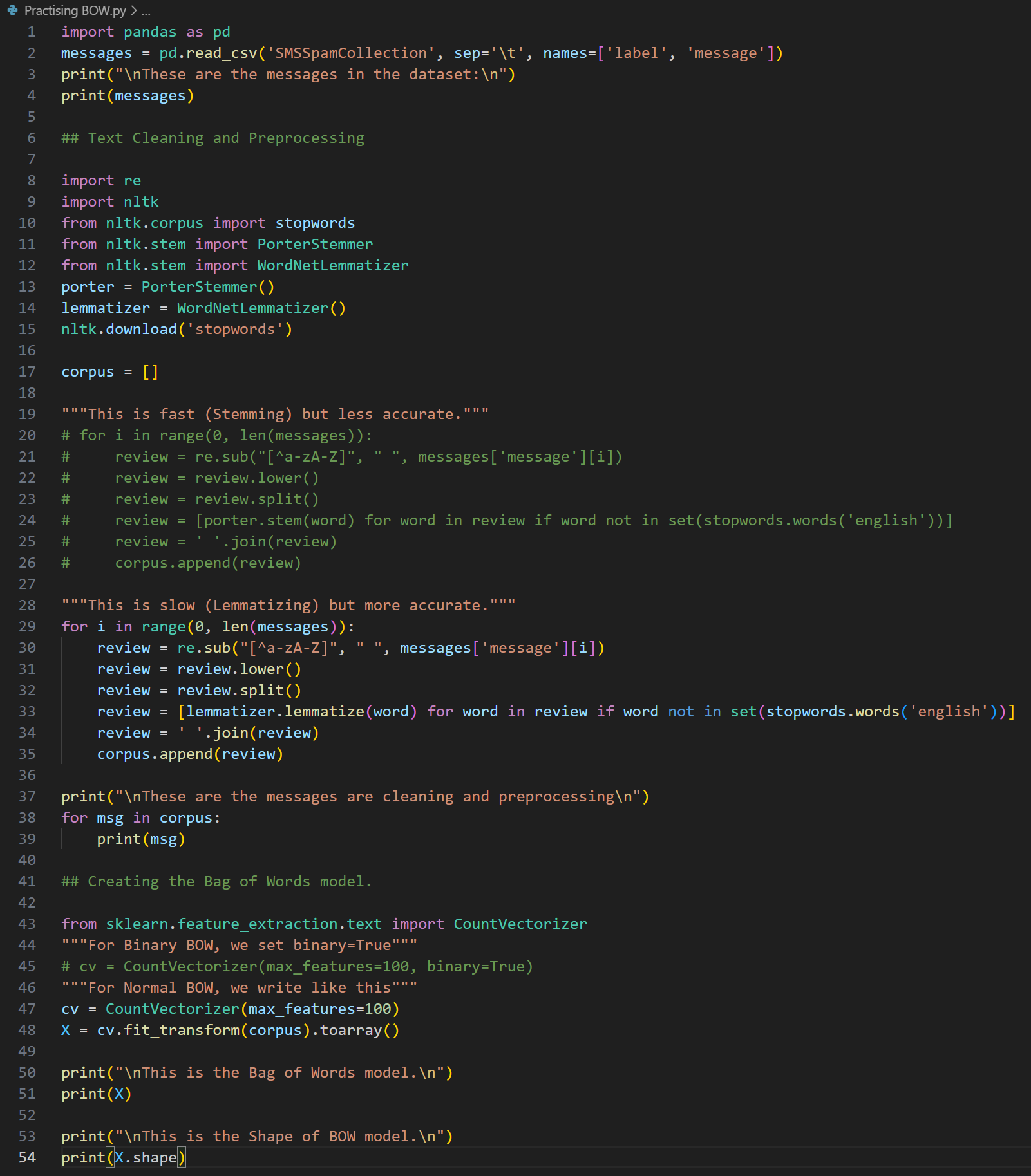

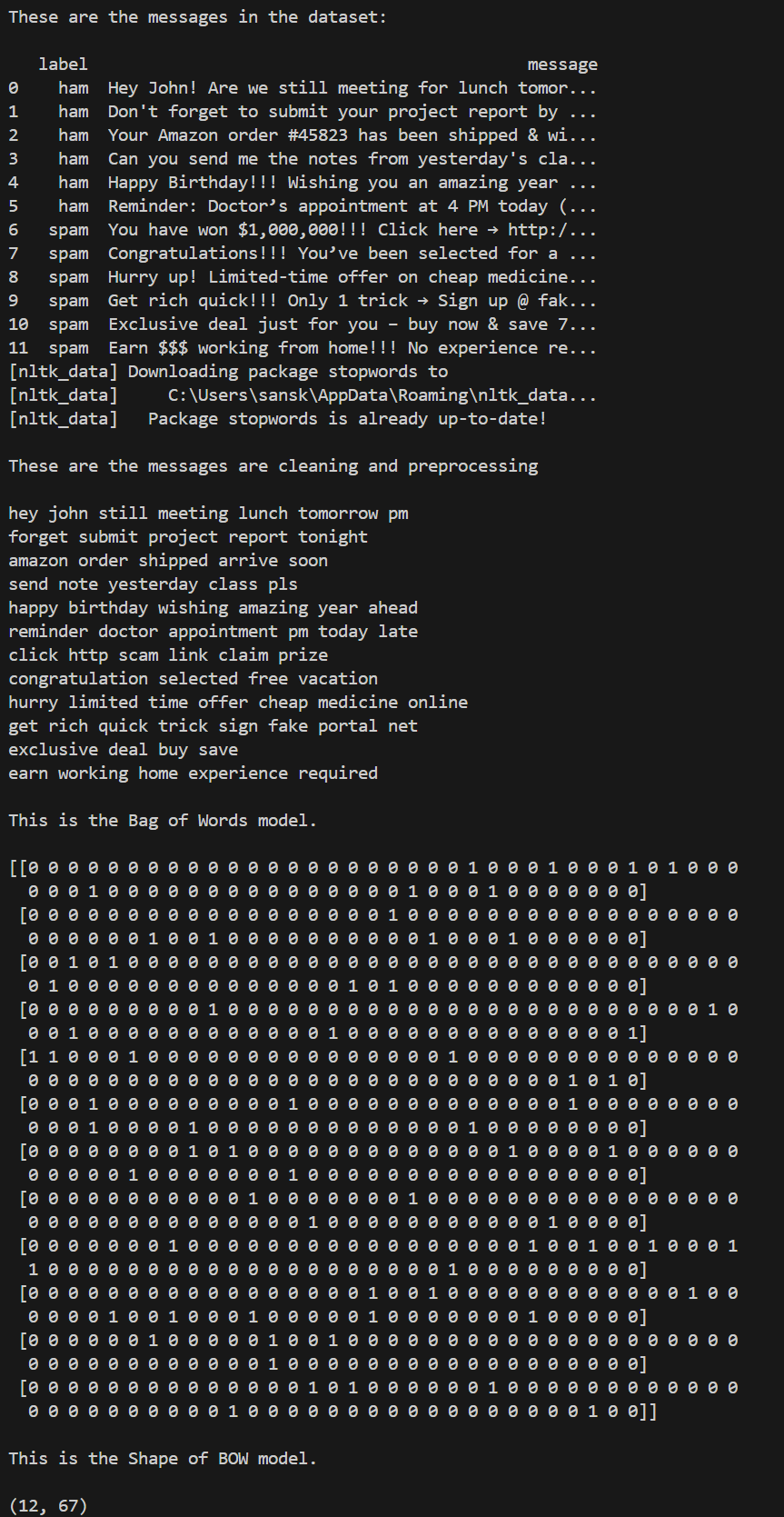

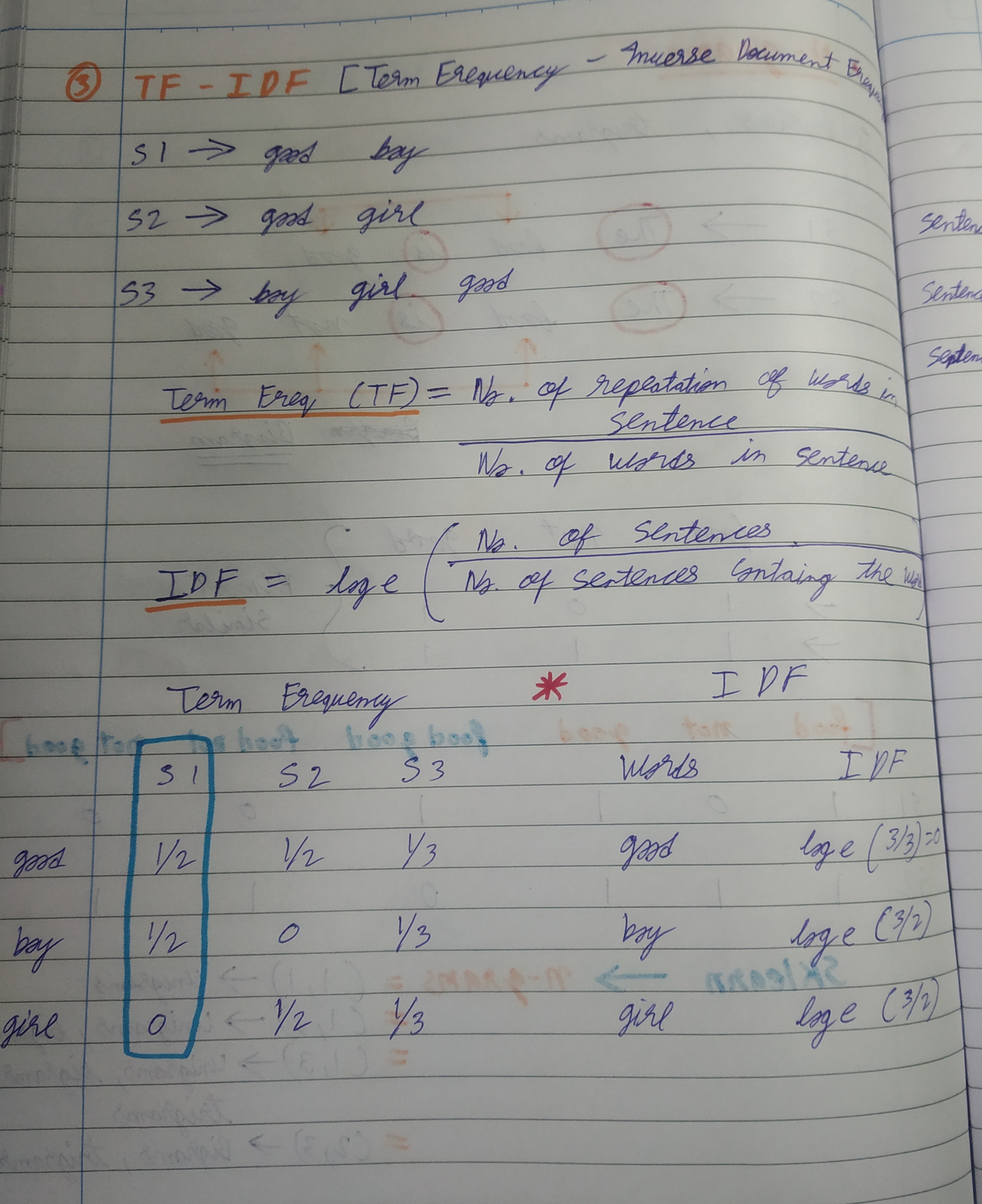

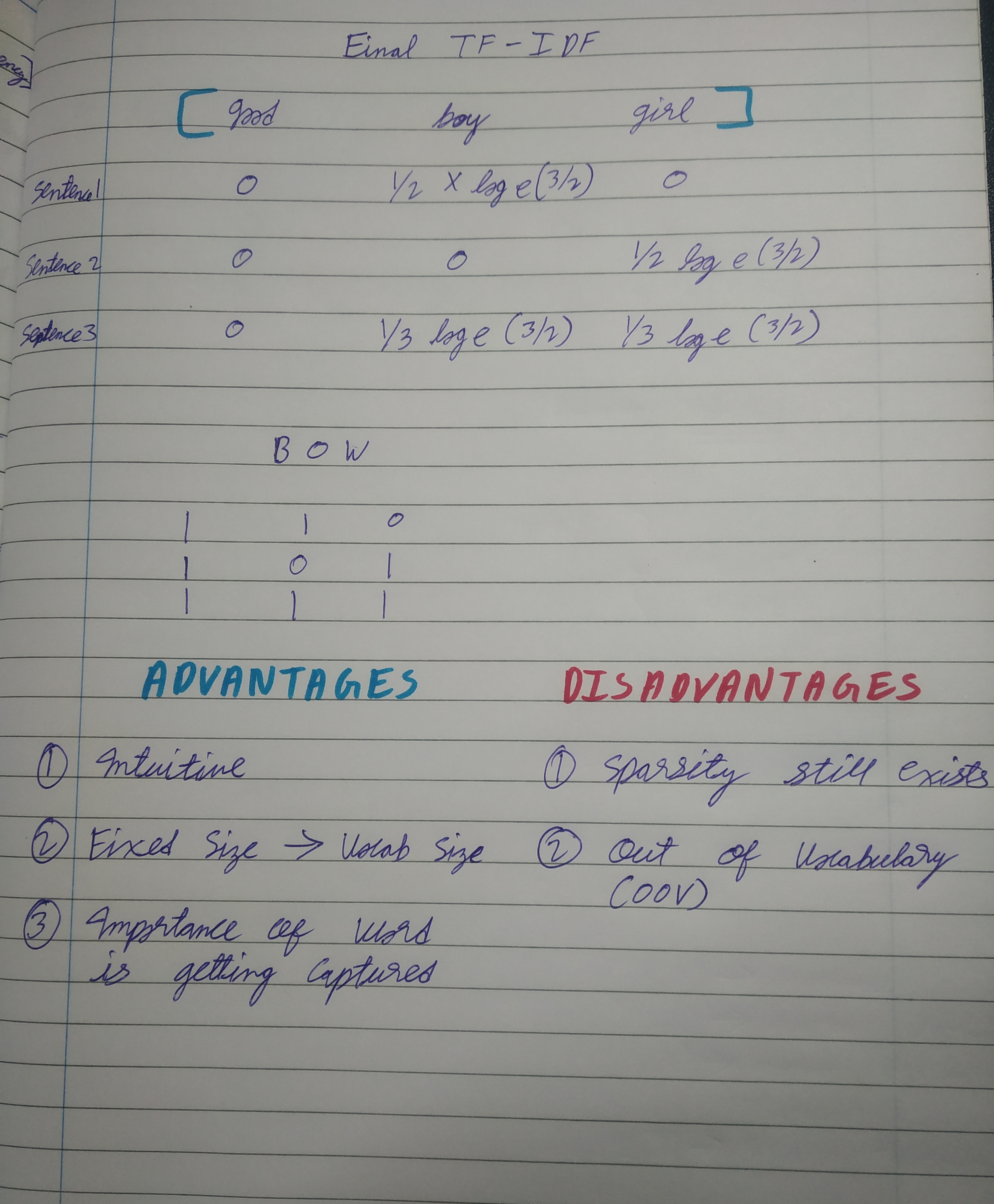

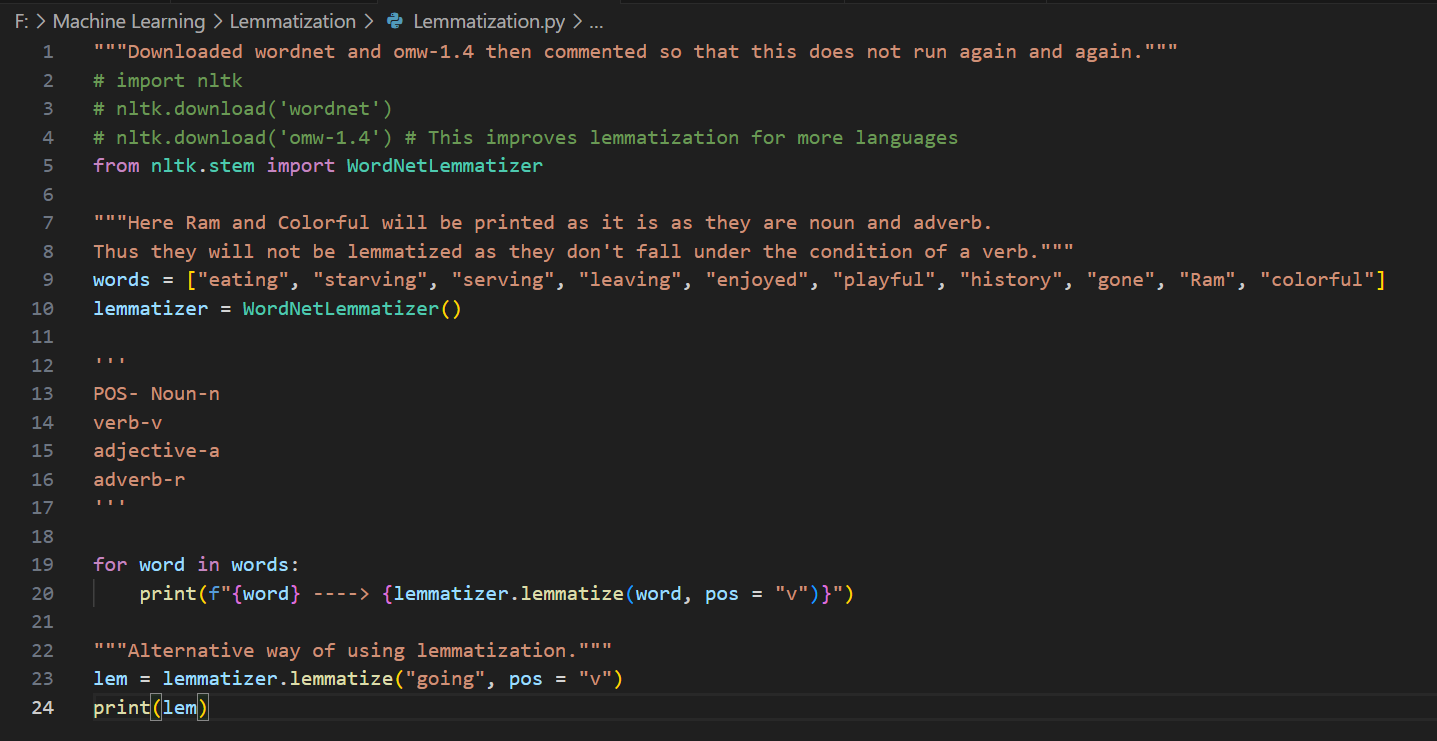

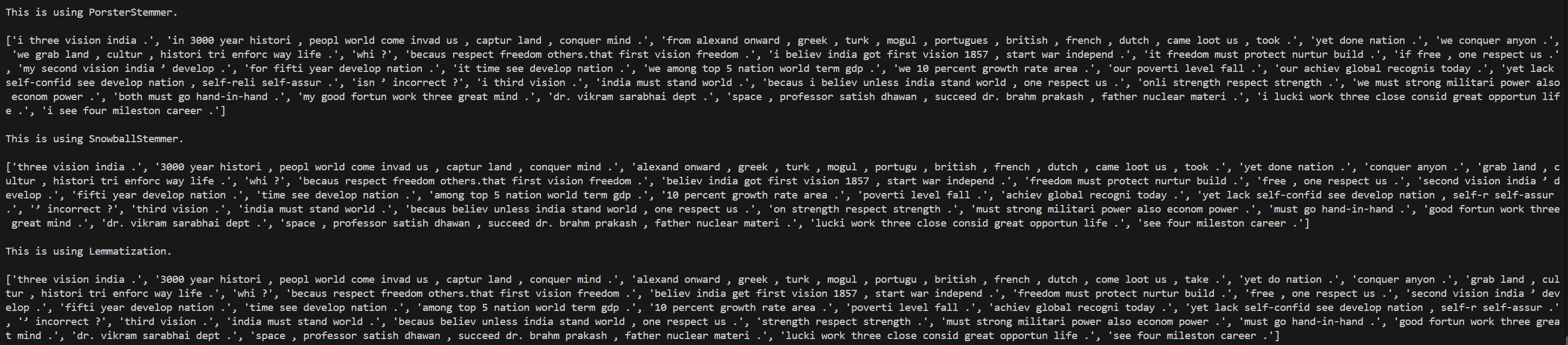

Day 10 of learning AI/ML as a beginner. Topic: N-Grams in Bag of Words (BOW). Yesterday I have talked about an amazing text to vector converter in machine learning i.e. Bag of Words (BOW). N-Gram is just a part of BOW. In BOW the program sees sentences with different meaning as similar which can be a big issue as it is relating the positive and negative things similar which should not happen. N-grams allows us to over come this limitation by grouping the words with next words so that is can give more accurate results for example in a sentence "The food is good" it will group "food" and "good" (assuming we have applied stopwords) together and will then compare it with the actual sentence and this will help the program distinguish between two different sentences and also lets the program understand what the user is saying. You can understand this better by seeing my notes that I have attached at last. I have also performed practical of this as n-gram is a part of BOW I decided to reuse my code and have imported the code in my BOW file (I also used if __name__ == "__main__": so that the results of previous code did not run in the new file). For using n-gram you just need to add this ngram_range=(1, 2) in the CountVectorizer. You can also change the range for getting bigram and trigram etc based on your need. I then used for loop to print all the group of words. Here's my code, its result and the notes I made of N-gram.

Replies (3)

More like this

Recommendations from Medial

Sanskar

Keen Learner and Exp... • 5m

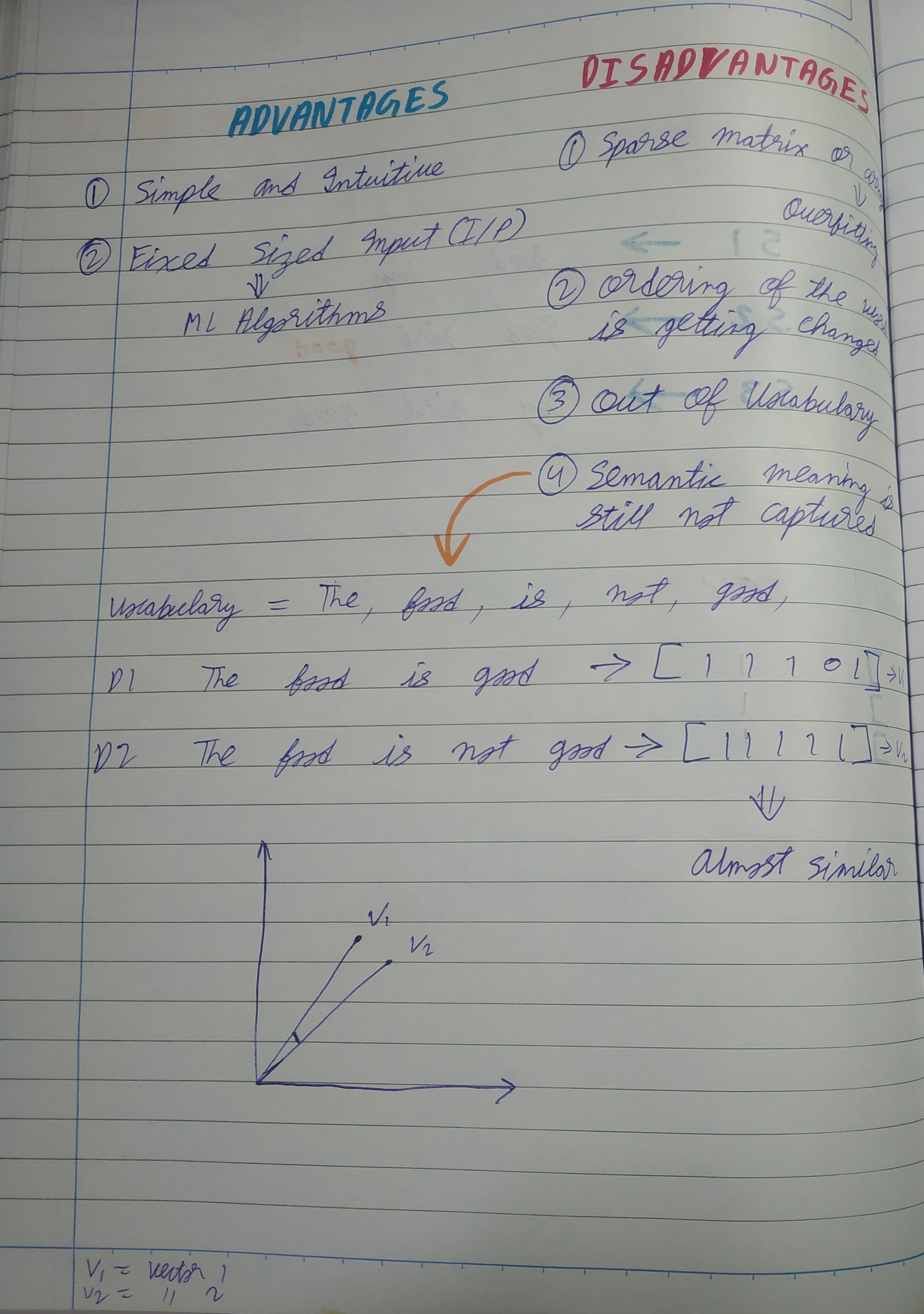

Day 8 of learning AI/ML as a beginner. Topic: Bag of Words (BOW) Yesterday I told you guys about One Hot Encoding which is one way to convert text into vector however with serious disadvantages and to cater to those disadvantages there's another on

See More

Sanskar

Keen Learner and Exp... • 5m

Day 9 of learning AI/ML as a beginner. Topic: Bag of Words practical. Yesterday I shared the theory about bag of words and now I am sharing about the practical I did I know there's still a lot to learn and I am not very much satisfied with the topi

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)