Back

Sanskar

Keen Learner and Exp... • 5m

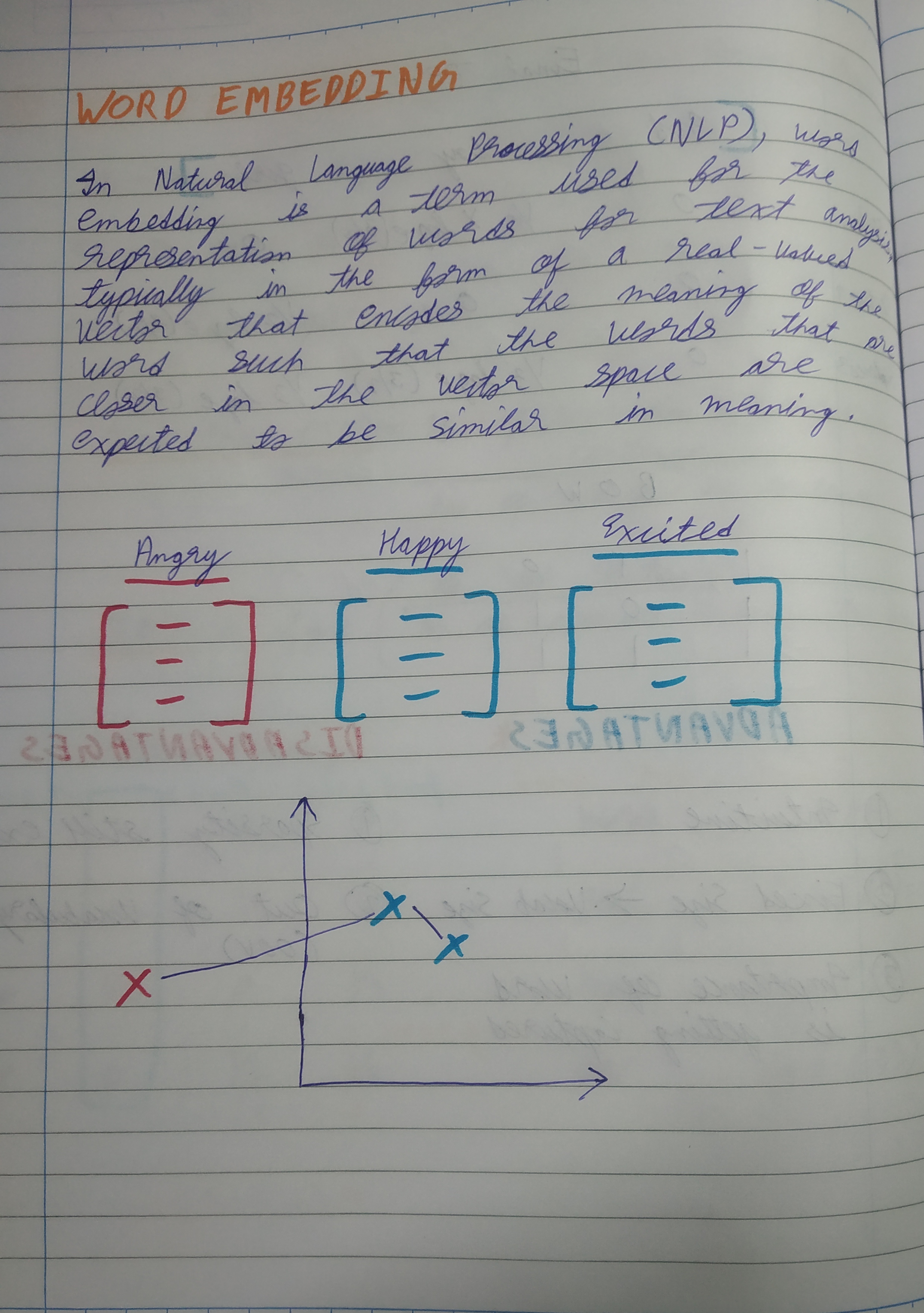

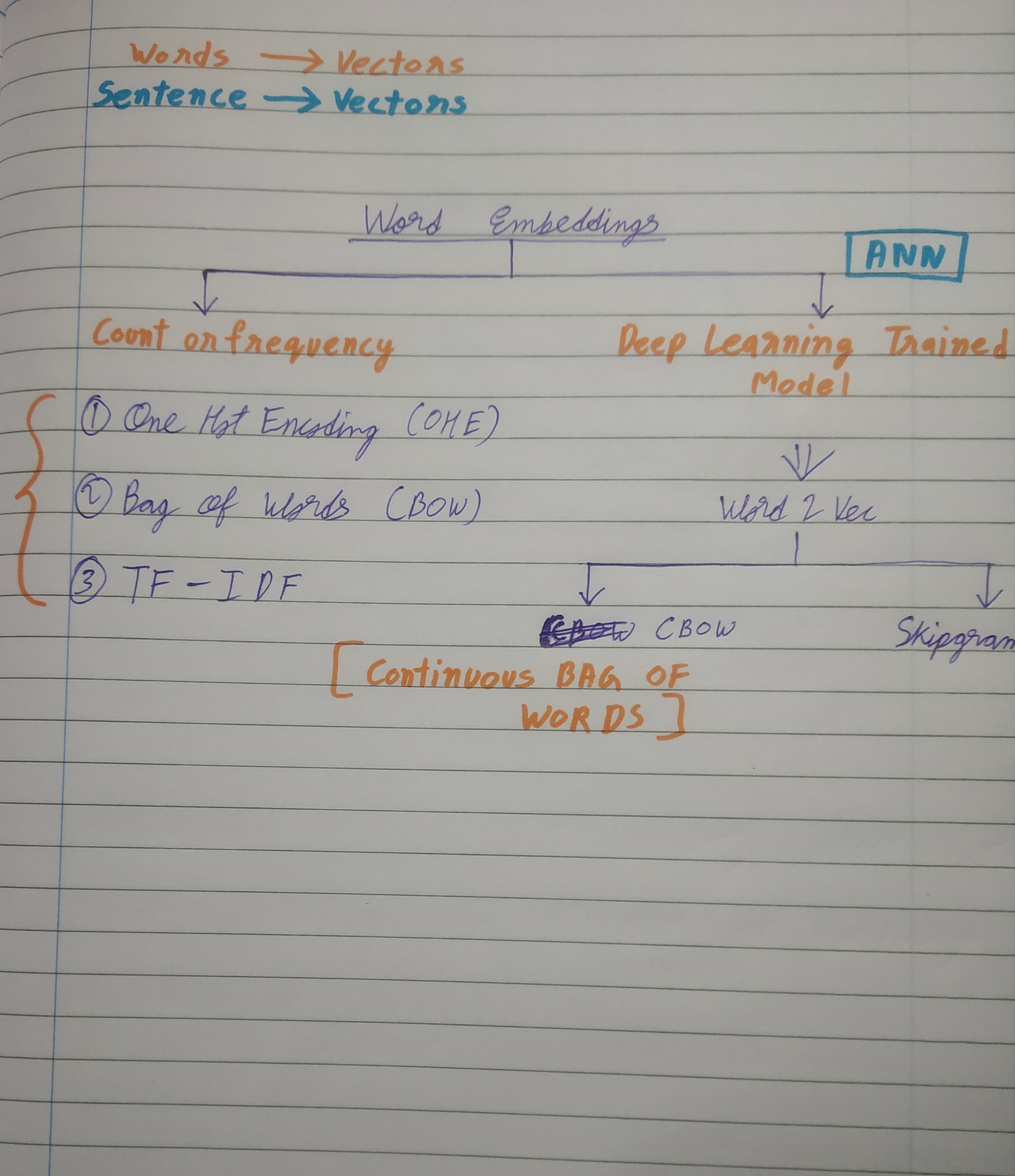

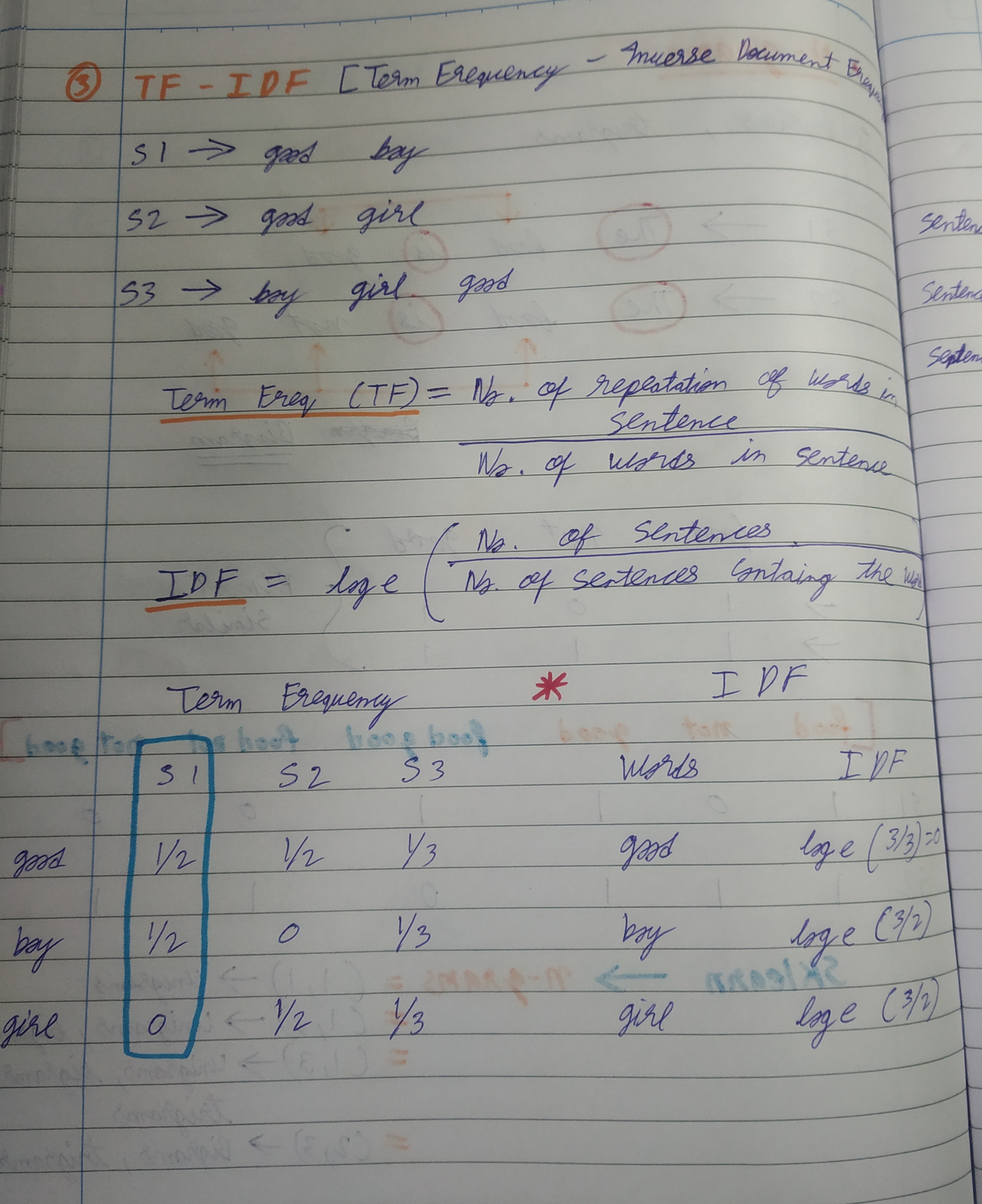

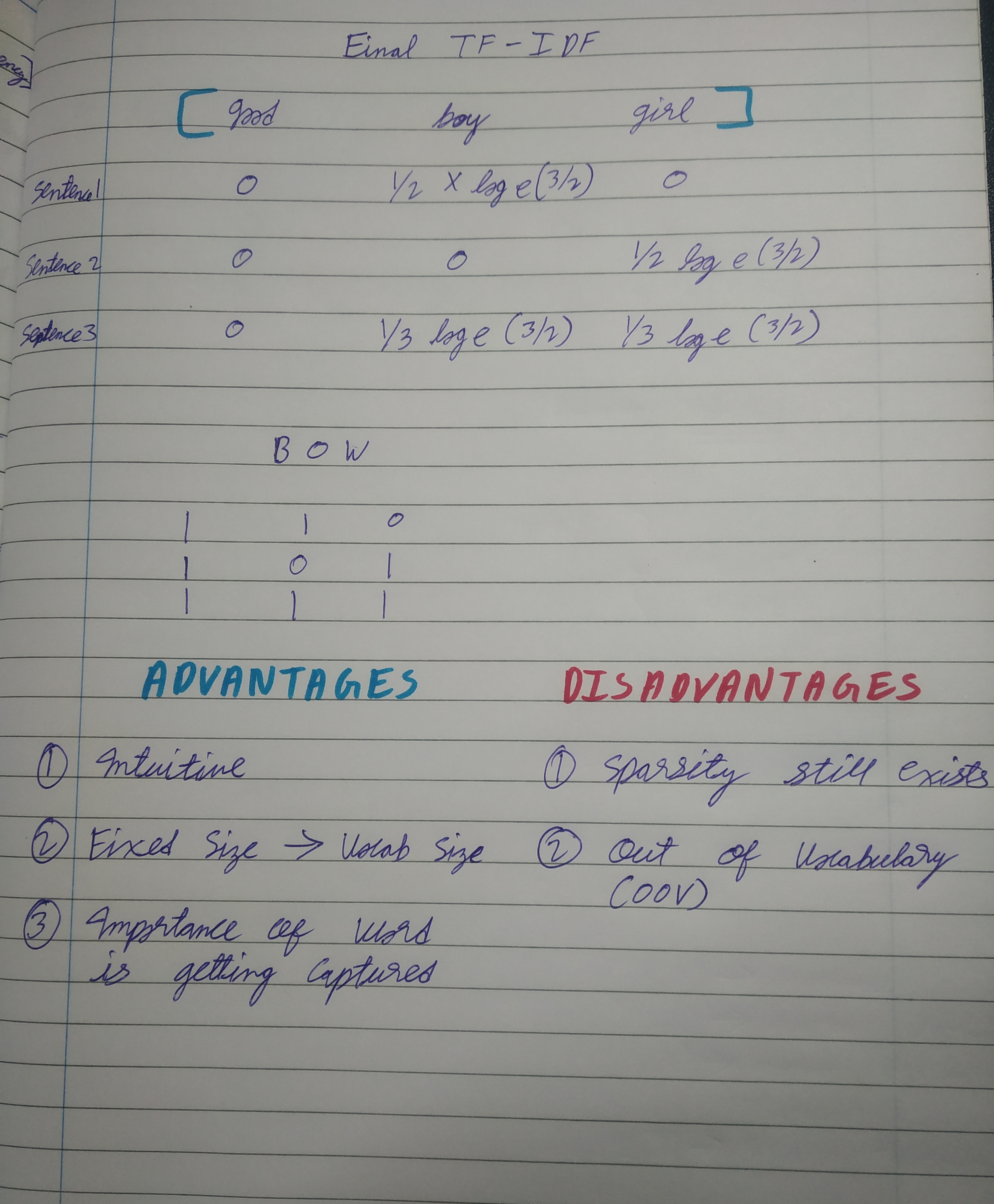

Day 13 of learning AI/ML as a beginner. Topic: Word Embedding. I have discussed about one hot encoding, Bag of words and TF-IDF in my recent posts. These are the count or frequency tools that are a part of word embedding but before moving forward lets discuss about what really is word embedding? Word embedding is a term used for the representation of words for text analysis typically in the form of a real valued vector that encodes the meaning of words in such a way that the words closer in vector space are expected to be similar in meaning. For example happy and excited are similar however angry is the opposite of happy. Word embeddings are of two types: 1. count or frequency: these are when words are represented in vectors based on how many times they appear in a document in corpus. 2. Deep learning trained model: these include word2vec which further include continuous bag of words and skipgram. And here are my notes.

More like this

Recommendations from Medial

Sanskar

Keen Learner and Exp... • 5m

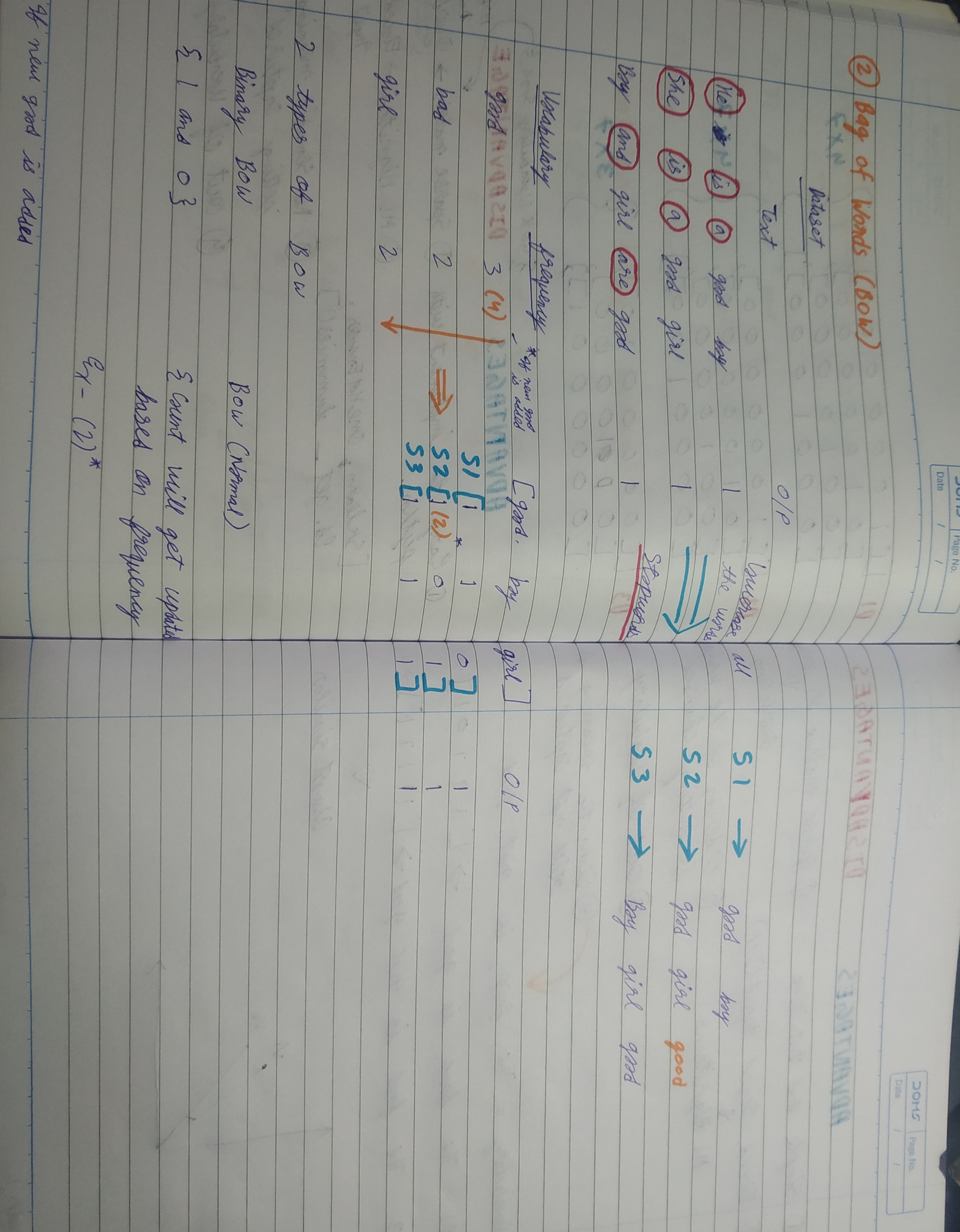

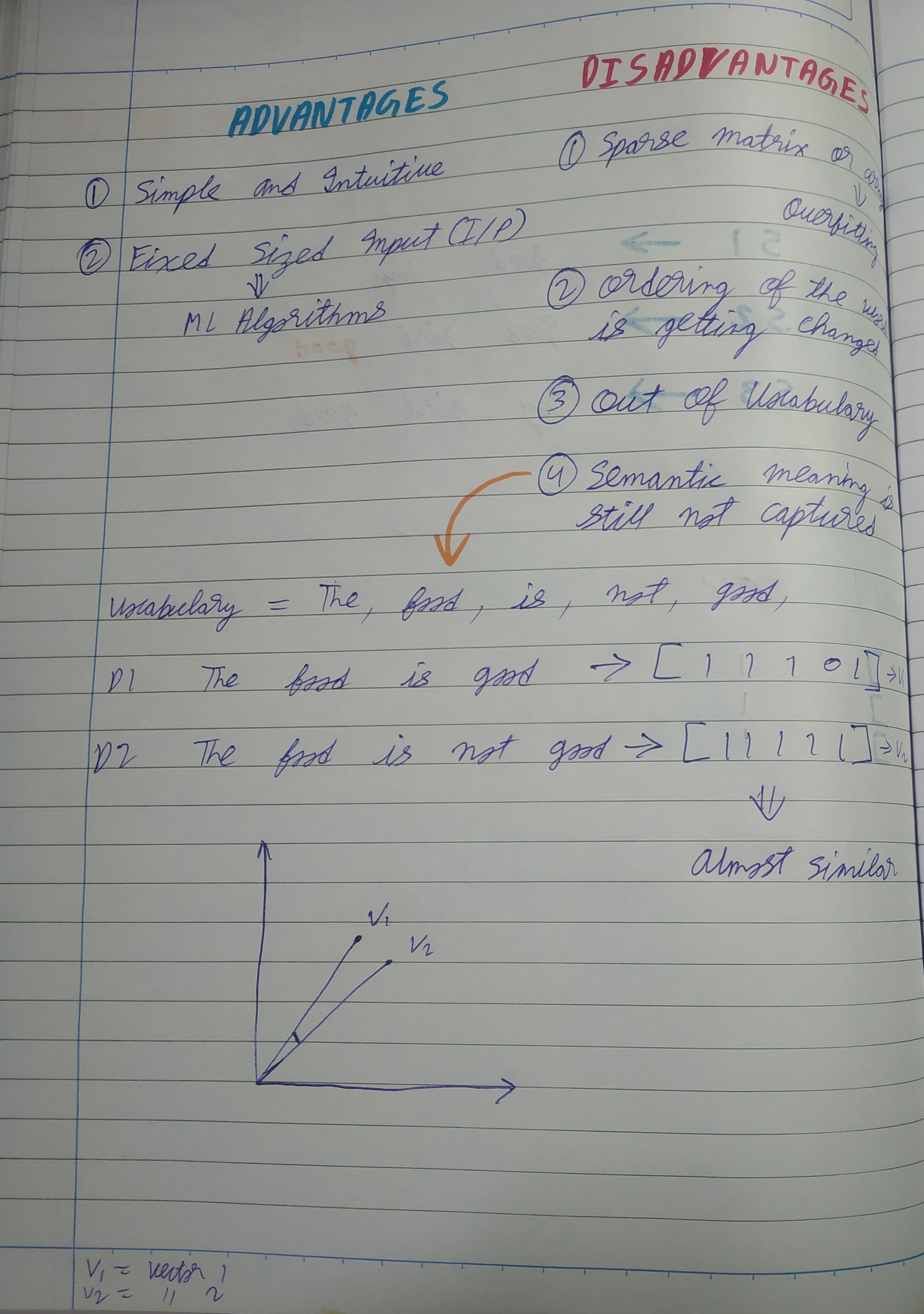

Day 8 of learning AI/ML as a beginner. Topic: Bag of Words (BOW) Yesterday I told you guys about One Hot Encoding which is one way to convert text into vector however with serious disadvantages and to cater to those disadvantages there's another on

See More

Mridul Chandhok

Entrepreneur and Ger... • 5m

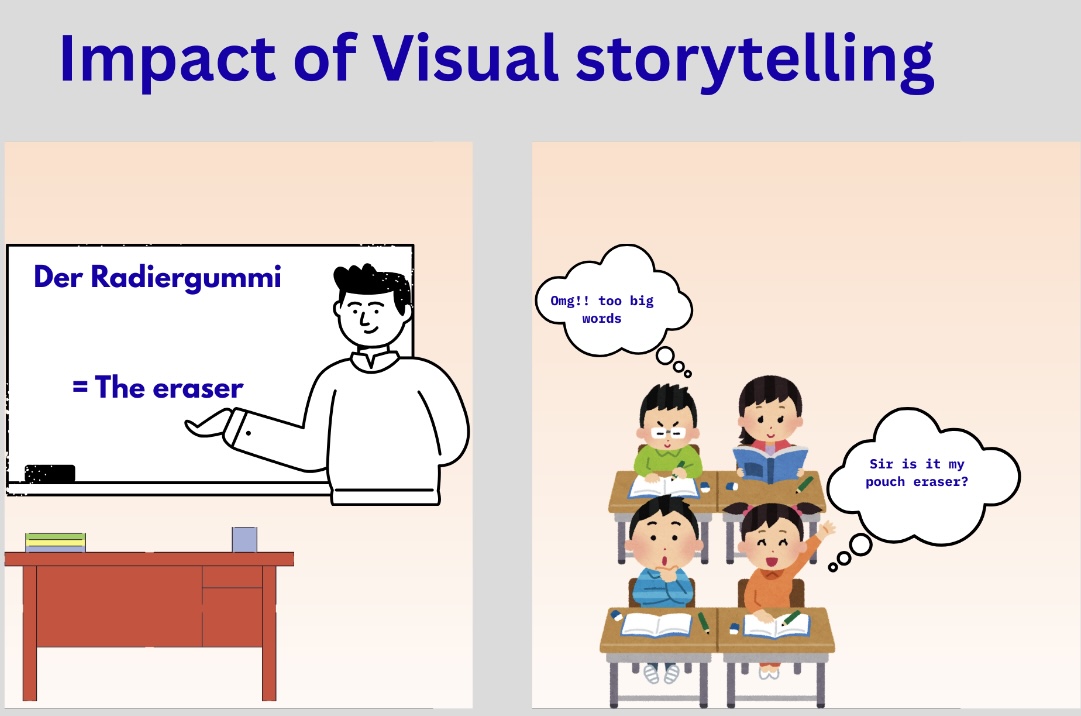

Do you see the power of visual storytelling? 💪 A vocabulary word of German language has been broken down with a visual, which eventually helped the kid imagine the meaning of the word 😇 Every person has its own meaning of the word and visual stor

See More

Rahul Agarwal

Founder | Agentic AI... • 5d

AI apps don’t search text. They search meaning. If you work with LLMs, you’re already relying on vector databases whether you realize it or not. Here’s what’s really happening behind the scenes. First, you organize data into collections. Think of

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)