Back

Subhajit Mandal

Software Developer • 5m

1. LoRA on a reasonably small open model (best balance for small compute): apply low-rank adapters (PEFT/LoRA). Requires less GPU memory and works well for 700–3000 rows. 2. Full fine-tune (costly / heavy): only if you have >A100 GPU or cloud paid GPU. Not recommended for early MVP. 3. No-fine-tune alternative (fast & free): use retrieval + prompting (RAG) — keep base LLM and add context from your 3k+ rows. Great when compute is limited.

More like this

Recommendations from Medial

Narendra

Willing to contribut... • 3m

I fine-tuned 3 models this week to understand why people fail. Used LLaMA-2-7B, Mistral-7B, and Phi-2. Different datasets. Different methods (full tuning vs LoRA vs QLoRA). Here's what I learned that nobody talks about: 1. Data quality > Data quan

See More

AI Engineer

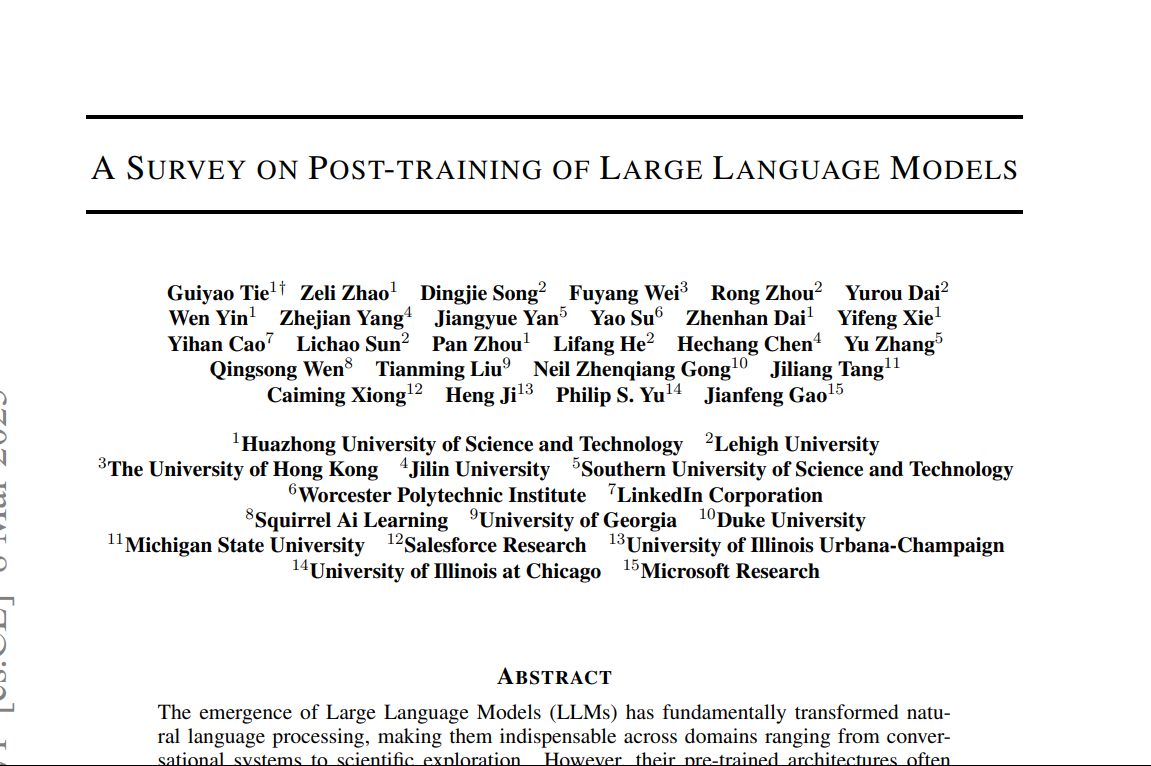

AI Deep Explorer | f... • 11m

"A Survey on Post-Training of Large Language Models" This paper systematically categorizes post-training into five major paradigms: 1. Fine-Tuning 2. Alignment 3. Reasoning Enhancement 4. Efficiency Optimization 5. Integration & Adaptation 1️⃣ Fin

See More

Swamy Gadila

Founder of Friday AI • 2m

From Emotional AI to Enterprise Infrastructure: The Story of Friday AI I don’t know whether to call it a phobia or obsession, but when it comes to my work, I chase correctness relentlessly. I’m not perfect, but I’m consistent. Where It Started Fri

See More

AI Engineer

AI Deep Explorer | f... • 10m

LLM Post-Training: A Deep Dive into Reasoning LLMs This survey paper provides an in-depth examination of post-training methodologies in Large Language Models (LLMs) focusing on improving reasoning capabilities. While LLMs achieve strong performance

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)