Back

Sandeep Prasad

Business Coach • 5m

🔥 Government set to name ~8 Indian teams for foundational model incentives next week – second-round beneficiaries may include BharatGen; GPU access remains tight as only ~17,374 of planned 34,333 GPUs are installed so far. 🤔 Why It Matters – More subsidised compute means faster India‑tuned models, but the GPU crunch could slow training unless procurement accelerates or inference‑efficient approaches are prioritised. 🚀 Action/Example – Founders should prepare grant docs and pivot to efficient training/inference (LoRA, distillation, 4‑bit quant) to ride the incentive window despite supply constraints. 🎯 Who Benefits – AI researchers, Indic LLM builders, and startups focused on low‑cost inference at scale. Tap ❤️ if you like this post.

More like this

Recommendations from Medial

Aditya Karnam

Hey I am on Medial • 11m

"Just fine-tuned LLaMA 3.2 using Apple's MLX framework and it was a breeze! The speed and simplicity were unmatched. Here's the LoRA command I used to kick off training: ``` python lora.py \ --train \ --model 'mistralai/Mistral-7B-Instruct-v0.2' \ -

See MoreNarendra

Willing to contribut... • 3m

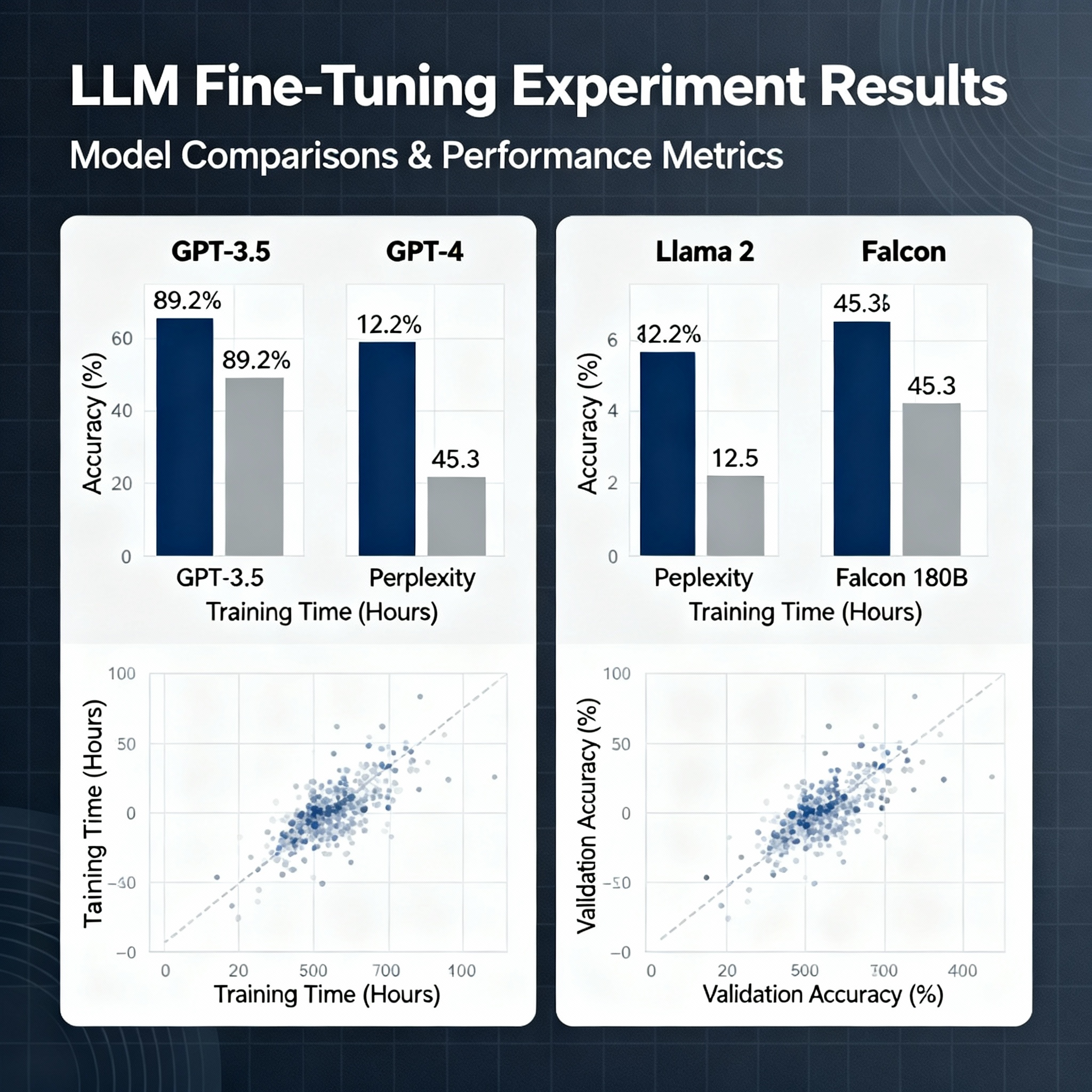

I fine-tuned 3 models this week to understand why people fail. Used LLaMA-2-7B, Mistral-7B, and Phi-2. Different datasets. Different methods (full tuning vs LoRA vs QLoRA). Here's what I learned that nobody talks about: 1. Data quality > Data quan

See More

PraYash

Technology, Business... • 4m

When AI changed the rules, cloud computing had to change too. And that’s exactly where Oracle took the lead. Most cloud giants like AWS, Azure, and GCP still rely on virtualization — where resources like CPU, GPU, and memory are shared across users.

See More

AI Engineer

AI Deep Explorer | f... • 10m

LLM Post-Training: A Deep Dive into Reasoning LLMs This survey paper provides an in-depth examination of post-training methodologies in Large Language Models (LLMs) focusing on improving reasoning capabilities. While LLMs achieve strong performance

See MoreVansh Khandelwal

Full Stack Web Devel... • 15d

Small language models (SLMs) are compact NLP models optimized for edge devices (smartphones, IoT, embedded systems). With fewer parameters they enable faster, low‑latency on‑device inference, improving privacy by avoiding cloud transfers, reducing en

See MoreMada Dhivakar

Let’s connect and bu... • 8m

Why Grok AI Outperformed ChatGPT & Gemini — Without Spending Billions In 2025, leading AI companies invested heavily in R&D: ChatGPT: $75B Gemini: $80B Meta: $65B Grok AI, developed by Elon Musk's xAI, raised just $10B yet topped global benchmar

See More

Parampreet Singh

Python Developer 💻 ... • 12m

3B LLM outperforms 405B LLM 🤯 Similarly, a 7B LLM outperforms OpenAI o1 & DeepSeek-R1 🤯 🤯 LLM: llama 3 Datasets: MATH-500 & AIME-2024 This has done on research with compute optimal Test-Time Scaling (TTS). Recently, OpenAI o1 shows that Test-

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)