Back

Parampreet Singh

Python Developer 💻 ... • 1y

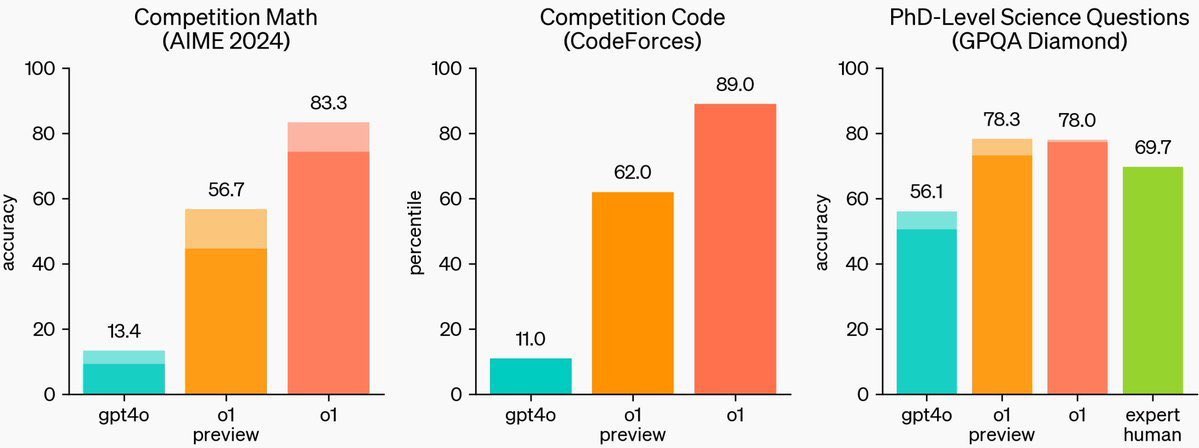

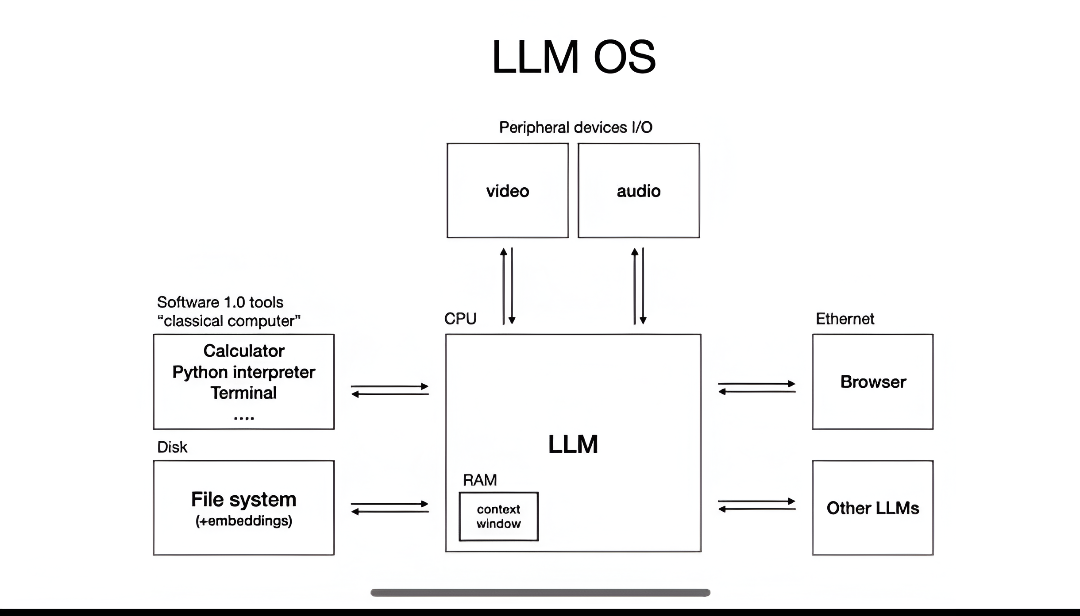

3B LLM outperforms 405B LLM 🤯 Similarly, a 7B LLM outperforms OpenAI o1 & DeepSeek-R1 🤯 🤯 LLM: llama 3 Datasets: MATH-500 & AIME-2024 This has done on research with compute optimal Test-Time Scaling (TTS). Recently, OpenAI o1 shows that Test-Time Scaling (TTS) can enhance the reasoning capabilities of LLMs by allocating additional computation at inference time, which improves LLM performance. In Simple terms: "think slowly with long Chain-of-Thought." But, By generating multiple outputs on a sample and picking the best one and training model again, which eventually leads to perform 0.5B LLM better than GPT-4o. But more computation. To make it efficient, they've used search based methods with the reward-aware Compute-optimal TTS. CC Paper: Can 1B LLM Surpass 405B LLM? Rethinking Compute-Optimal Test-Time Scaling 🔗 https://arxiv.org/abs/2502.06703 #Openai #LLM #GPT #GPTo1 #deepseek #llama3

Replies (1)

More like this

Recommendations from Medial

Swamy Gadila

Founder of Friday AI • 4m

Big News: Friday AI – Adaptive API is Coming! We’re launching Adaptive API, the world’s first real-time context scaling framework for LLMs. Today, AI wastes massive tokens on static context — chat, code, or docs all use the same window. The result?

See More

AI Engineer

AI Deep Explorer | f... • 10m

LLM Post-Training: A Deep Dive into Reasoning LLMs This survey paper provides an in-depth examination of post-training methodologies in Large Language Models (LLMs) focusing on improving reasoning capabilities. While LLMs achieve strong performance

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)