Back

Vishu Bheda

•

Medial • 6m

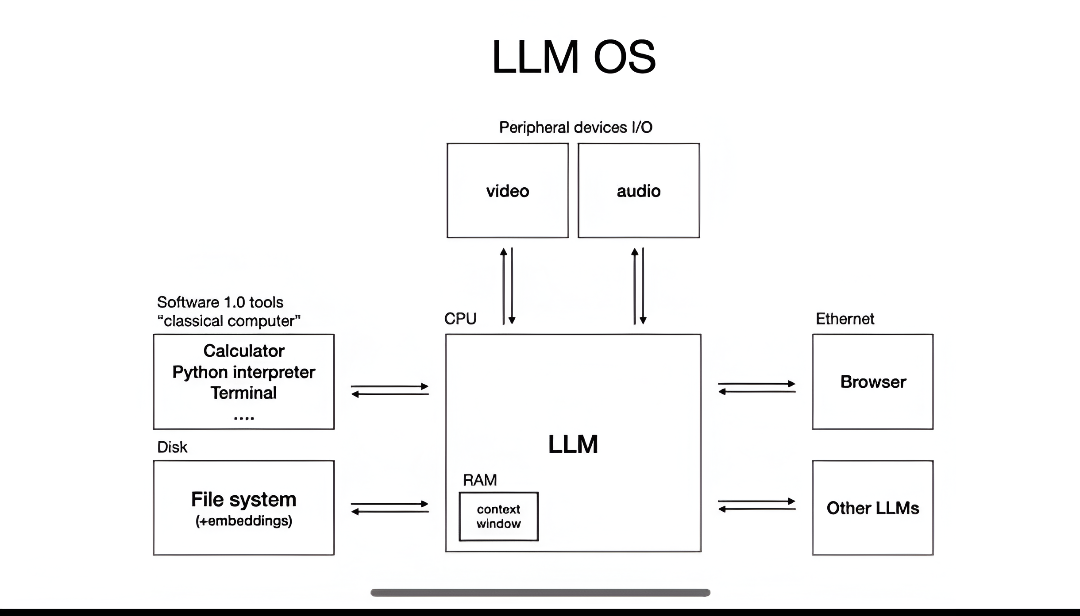

𝗜 𝘀𝗽𝗲𝗻𝘁 𝟰+ 𝗵𝗼𝘂𝗿𝘀 𝗿𝗲𝘄𝗮𝘁𝗰𝗵𝗶𝗻𝗴 𝗞𝗮𝗿𝗽𝗮𝘁𝗵𝘆’𝘀 𝗬𝗖 𝗸𝗲𝘆𝗻𝗼𝘁𝗲. And I realized — we’ve been looking at LLMs the wrong way. They’re not just “AI models.” They’re a new kind of computer. • LLM = CPU • Context window = memory • Orchestration of tools + compute = like how OS manages resources The ecosystem already looks like old OS battles: • OpenAI, Anthropic, Gemini = Windows/macOS • Llama, Qwen, DeepSeek = Linux • Apps (Cursor, Notion AI, etc.) = cross-platform, can run on any LLM backend Switching between GPT, Claude, or Gemini? → literally just a dropdown. But here’s the key insight: We’re basically back in the 1960s of computing. • Compute is expensive → everything lives in the cloud • Users are thin clients → just pinging infra over APIs • No GUI yet → prompting feels like a terminal • No universal shell → only apps, wrappers, hacks And unlike electricity, computers, or the internet (which started in govt/enterprise first)… LLMs went straight to consumers. Billions got access before big corporations even figured them out. That’s crazy. So no — LLMs aren’t just tools or APIs. They’re a foundational computing layer. A programmable OS for intelligence. We’re still very early. The personal LLM moment hasn’t happened yet… but it will.

Replies (6)

More like this

Recommendations from Medial

Parampreet Singh

Python Developer 💻 ... • 12m

3B LLM outperforms 405B LLM 🤯 Similarly, a 7B LLM outperforms OpenAI o1 & DeepSeek-R1 🤯 🤯 LLM: llama 3 Datasets: MATH-500 & AIME-2024 This has done on research with compute optimal Test-Time Scaling (TTS). Recently, OpenAI o1 shows that Test-

See More

Account Deleted

Hey I am on Medial • 7m

Master LLMs without paying a rupee. LLMCourse is a complete learning track to go from zero to LLM expert — using just Google Colab. You’ll learn: – The core math, Python, and neural network concepts – How to train your own LLMs – How to build and

See MoreSarthak Gupta

17 | Building Doodle... • 10m

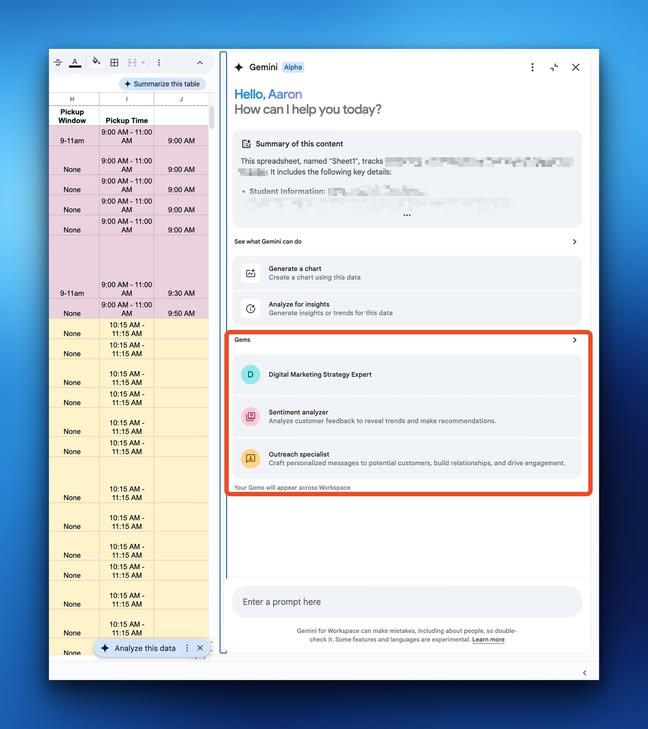

🚀 For the first time in LLM History! Introducing Agentic Compound LLMs in AnyLLM. What are agentic LLM's ? Agentic LLMs have access to all the 10+ llms in anyllm and know when you use any one of them to perform a specific task. They also have acces

See More

Kimiko

Startups | AI | info... • 9m

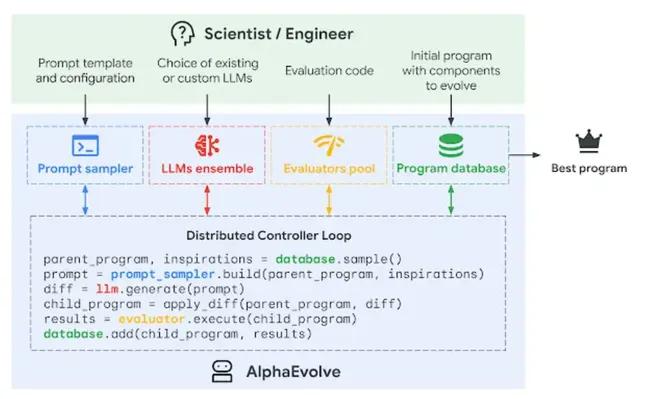

Google DeepMind just dropped AlphaEvolve‼️ A Gemini powered coding agent that evolves algorithms and outperforms AlphaTensor. It delivers: — 23% matrix speedup — 32.5% GPU kernel boost — 0.7% global compute recovery Using Gemini Flash + Pro, it re

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)