Back

Aman Tiwari

Founder | Kalika OS • 5m

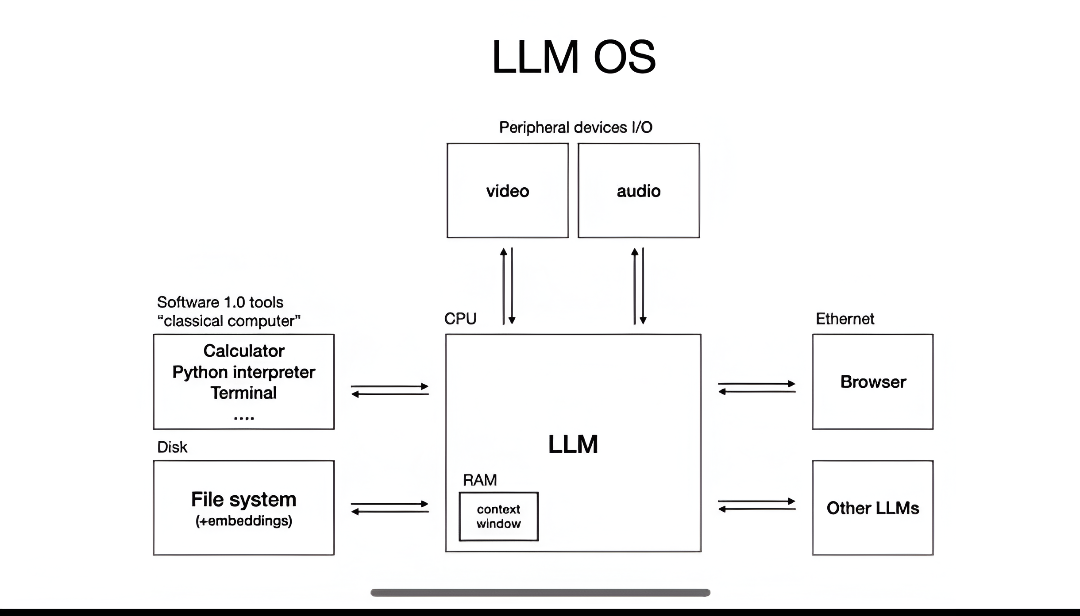

🤔I am confused in one thing? should I integrate my LLM directry into the architecture of my OS or should I launch it separately and connect the OS infra via MCP servers? every suggestions are respected here...🫡

2 Replies

8

Replies (2)

1 Reply

1

2

More like this

Recommendations from Medial

Techiral

Why do it when AI ca... • 6m

Should I directly upload the OS online for everyone, or make it more practical then launch? As in less than half a day, I was successful in building the whole OS with AI, but I think if I invest my time for more practicality it will be better what a

See More10 Replies

4

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)