Back

Aditya Karnam

Hey I am on Medial • 11m

"Just fine-tuned LLaMA 3.2 using Apple's MLX framework and it was a breeze! The speed and simplicity were unmatched. Here's the LoRA command I used to kick off training: ``` python lora.py \ --train \ --model 'mistralai/Mistral-7B-Instruct-v0.2' \ --data '/path/to/data' \ --batch-size 2 \ --lora-layers 8 \ --iters 1000 ``` No cloud needed—just my Mac and MLX! Highly recommend for efficient local fine-tuning. #MLX #LLaMA3.2 #LoRA #FineTuning #AppleSilicon

More like this

Recommendations from Medial

Narendra

Willing to contribut... • 3m

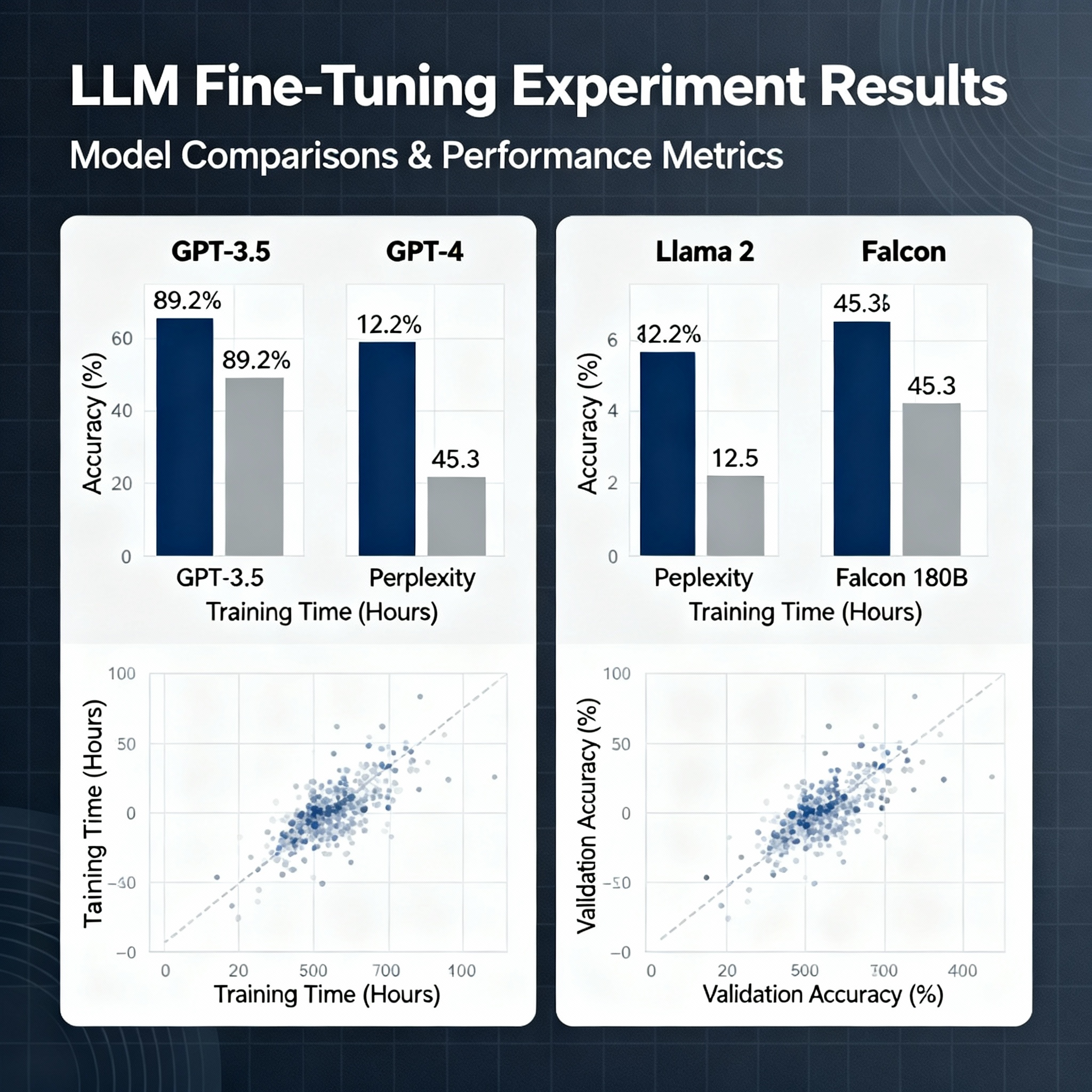

I fine-tuned 3 models this week to understand why people fail. Used LLaMA-2-7B, Mistral-7B, and Phi-2. Different datasets. Different methods (full tuning vs LoRA vs QLoRA). Here's what I learned that nobody talks about: 1. Data quality > Data quan

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)