Back

Narendra

Willing to contribut... • 3m

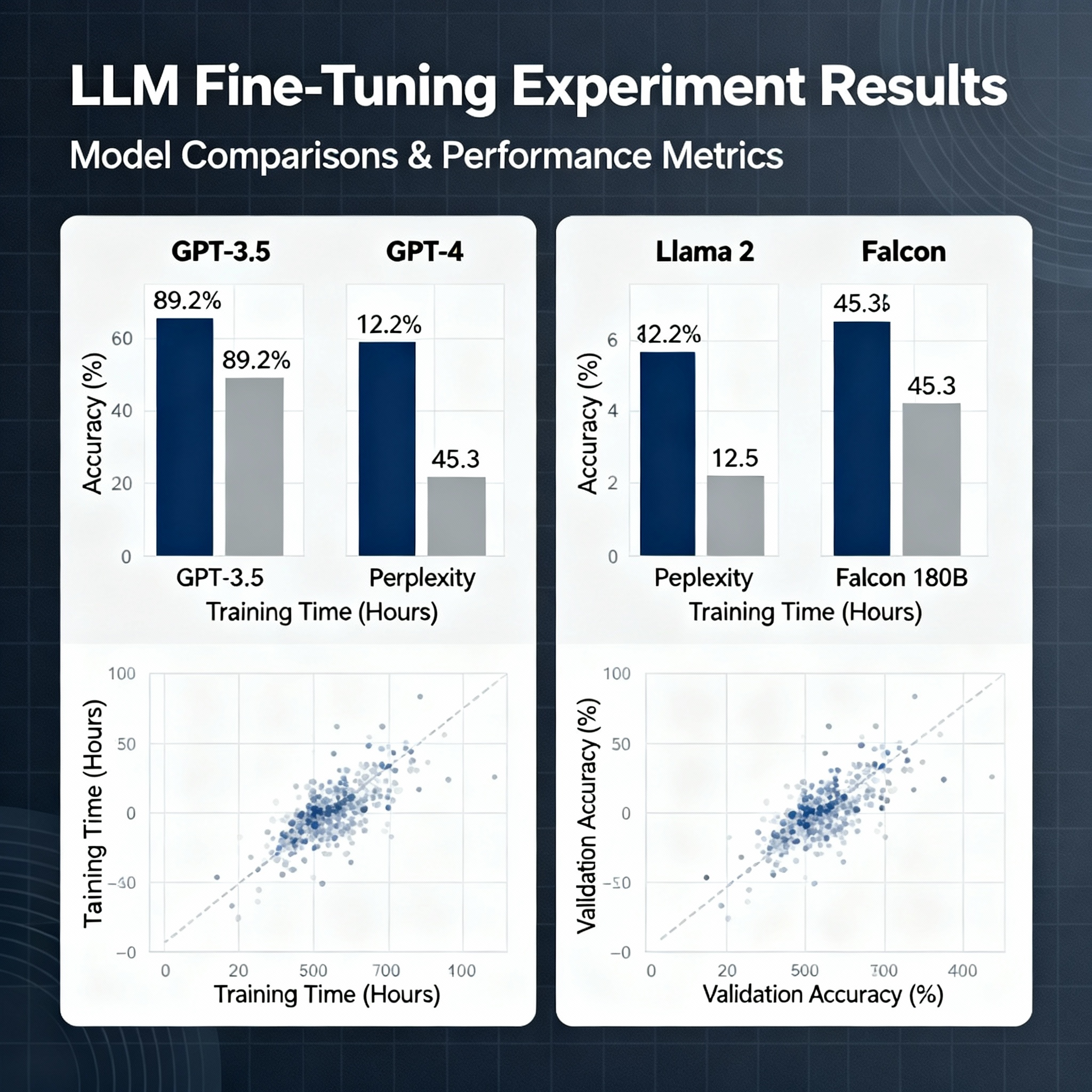

I fine-tuned 3 models this week to understand why people fail. Used LLaMA-2-7B, Mistral-7B, and Phi-2. Different datasets. Different methods (full tuning vs LoRA vs QLoRA). Here's what I learned that nobody talks about: 1. Data quality > Data quantity (but no one checks quality) I ran the same fine-tuning job twice: First: 10,000 examples, auto-generated Second: 1,000 examples, manually curated The 1,000-example model performed 23% better on my test set. But here's the thing: I had to manually inspect samples to know this. Most tools just accept whatever CSV you upload. 2. LoRA isn't always the answer QLoRA used 75% less GPU memory than LoRA. But it was 66% slower and cost 40% more (because runtime). For my use case, LoRA was better. But the "conventional wisdom" says QLoRA for everything. The real answer: It depends on your data size, timeline, and budget. 3. Hyperparameter tuning isn't random—but it looks random I tested learning rates from 1e-5 to 1e-3. Below 5e-5: Training barely moved (10+ hours, no convergence) Above 5e-4: Loss exploded immediately Sweet spot: 5e-4 to 1e-4 But that's for this specific dataset and model combo. Every project needs its own tuning. Yet people copy parameters from tutorials and hope. What I'm building: A system that does this analysis automatically: → Scans your data → recommends LoRA vs QLoRA vs full tuning → Estimates training time + cost for each option → Runs mini-experiments (10% of data) to validate hyperparameters before full training Think of it as "pair programming" for fine-tuning. Piloting with 5 users next month. If you've struggled with this, let's talk. #MachineLearning #LLM #AI #FineTuning #BuildInPubl #DataScience

Replies (2)

More like this

Recommendations from Medial

Aditya Karnam

Hey I am on Medial • 11m

"Just fine-tuned LLaMA 3.2 using Apple's MLX framework and it was a breeze! The speed and simplicity were unmatched. Here's the LoRA command I used to kick off training: ``` python lora.py \ --train \ --model 'mistralai/Mistral-7B-Instruct-v0.2' \ -

See MoreAI Engineer

AI Deep Explorer | f... • 10m

"A Survey on Post-Training of Large Language Models" This paper systematically categorizes post-training into five major paradigms: 1. Fine-Tuning 2. Alignment 3. Reasoning Enhancement 4. Efficiency Optimization 5. Integration & Adaptation 1️⃣ Fin

See More

Nikhil Raj Singh

Entrepreneur | Build... • 5m

Hiring AI/ML Engineer 🚀 Join us to shape the future of AI. Work hands-on with LLMs, transformers, and cutting-edge architectures. Drive breakthroughs in model training, fine-tuning, and deployment that directly influence product and research outcom

See MoreAI Engineer

AI Deep Explorer | f... • 10m

LLM Post-Training: A Deep Dive into Reasoning LLMs This survey paper provides an in-depth examination of post-training methodologies in Large Language Models (LLMs) focusing on improving reasoning capabilities. While LLMs achieve strong performance

See MoreVansh Khandelwal

Full Stack Web Devel... • 1m

Agentic AI—systems that act autonomously, are context-aware and learn in real time—paired with small language models (SLMs) enables efficient, specialized automation. SLMs are lightweight, fast, fine-tunable for niche domains and accessible to smalle

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)