Back

Keval Rajpal

Software Engineer • 6m

Recently learn how LLM works, maths behind attention layers and transformer, continously trying to keep up with rapid development in AI space, getting so overwhelmed 🥲 Now google came with "Mixture-of-Agents (MoA): A Breakthrough in LLM Performance" Link - https://www.marktechpost.com/2025/08/09/mixture-of-agents-moa-a-breakthrough-in-llm-performance/ Original research paper - https://arxiv.org/pdf/2406.04692 Happy Learning 👍

More like this

Recommendations from Medial

Parampreet Singh

Python Developer 💻 ... • 11m

3B LLM outperforms 405B LLM 🤯 Similarly, a 7B LLM outperforms OpenAI o1 & DeepSeek-R1 🤯 🤯 LLM: llama 3 Datasets: MATH-500 & AIME-2024 This has done on research with compute optimal Test-Time Scaling (TTS). Recently, OpenAI o1 shows that Test-

See More

Rahul Agarwal

Founder | Agentic AI... • 1m

8 common LLM types used in modern agent systems. 1) GPT (Generative Pretrained Transformer) Core model for many agents, strong in language understanding, generation, and instruction following. 2) MoE (Mixture of Experts) Routes tasks to specialized

See MorePulakit Bararia

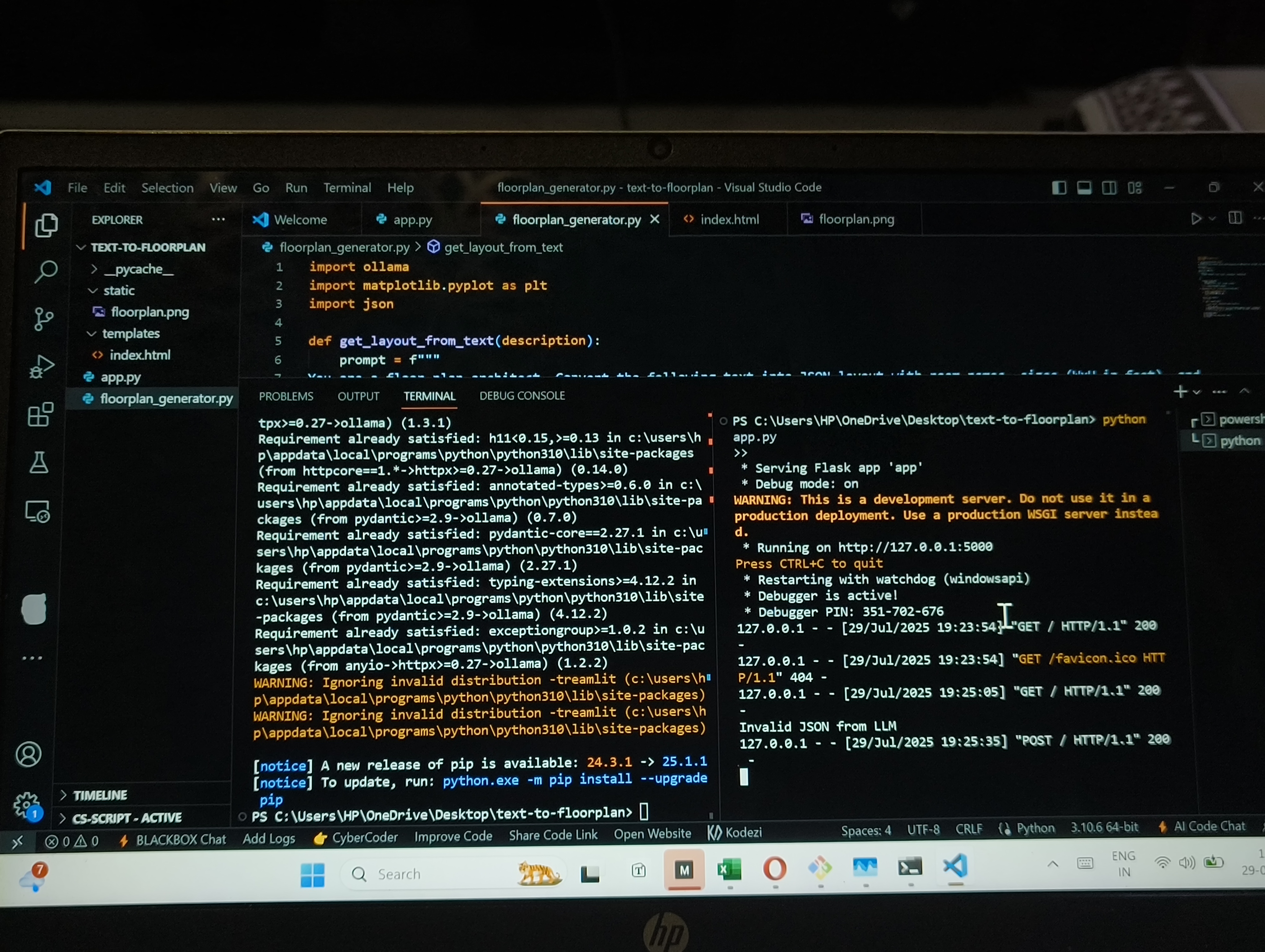

Founder Snippetz Lab... • 6m

I’m building an AI-first architectural engine that converts natural language prompts into parametric, code-compliant floor plans. It's powered by a fine-tuned Mistral LLM orchestrating a multi-model architecture — spatial parsing, geometric reasonin

See More

AI Engineer

AI Deep Explorer | f... • 10m

Top 10 AI Research Papers Since 2015 🧠 1. Attention Is All You Need (Vaswani et al., 2017) Impact: Introduced the Transformer architecture, revolutionizing natural language processing (NLP). Key contribution: Attention mechanism, enabling models

See More

Rahul Agarwal

Founder | Agentic AI... • 2m

If you’re building AI agents today, here’s the reality: Calling an LLM isn’t enough anymore. Modern agents need a full system, a framework of interconnected components that help them think, reason, act, adapt, and collaborate autonomously. Here are

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)