Back

Sarthak Gupta

17 | Building Doodle... • 10m

For past context, I am launching an AI SaaS that aims to lower llm subscription fees by providing users 15 plus llm models having comparable performance to ChatGpt models. I am working on optimizing pricing. Which is better. Please comment the reason if possible and follow me for more.

Replies (5)

More like this

Recommendations from Medial

Swamy Gadila

Founder of Friday AI • 4m

Big News: Friday AI – Adaptive API is Coming! We’re launching Adaptive API, the world’s first real-time context scaling framework for LLMs. Today, AI wastes massive tokens on static context — chat, code, or docs all use the same window. The result?

See More

Gopal Sharma

Python Developer | E... • 1y

India’s large language model (LLM) is expected to be ready within the next 10 months, said the Minister of Electronics and IT Ashwini Vaishnaw on Thursday. “We have created the framework, and it is being launched today. Our focus is on building AI m

See MoreSwamy Gadila

Founder of Friday AI • 2m

Adaptive Plugin: The next efficiency layer for Enterprise GenAI LLM workloads are exploding across finance, healthcare, SaaS, telecom, and government. The hidden drain is token waste from oversized prompts, long documents, and heavy chat histories.

See More

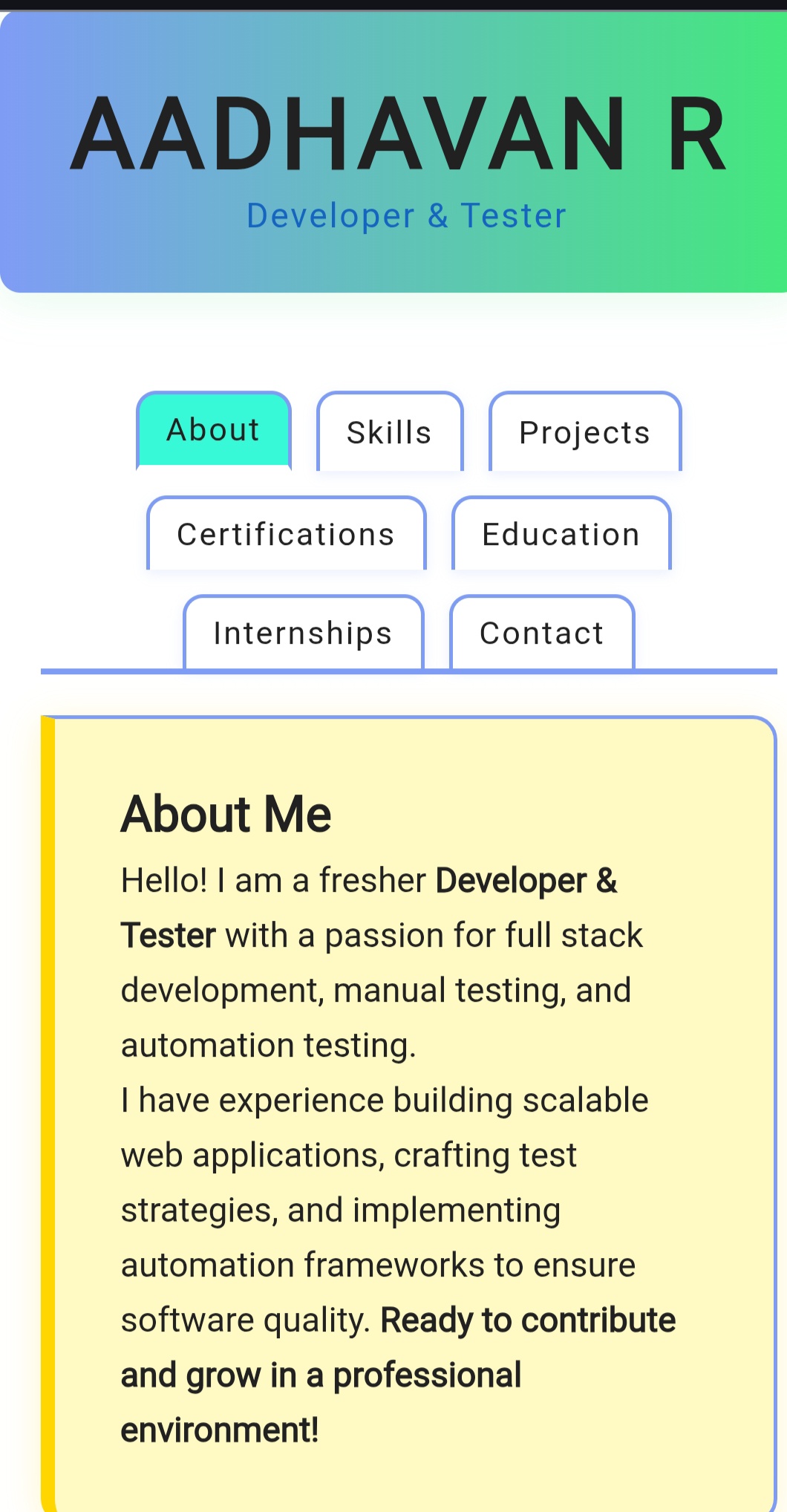

aadhavan

Dev| tester| automat... • 5m

🚀 Sharing my portfolio update! Past Goal: Built scalable applications that could handle growth with efficiency. Current Goal: Diving deep into Large Language Models (LLMs), exploring how they can shape intelligent solutions. Future Goal: Contribu

See More

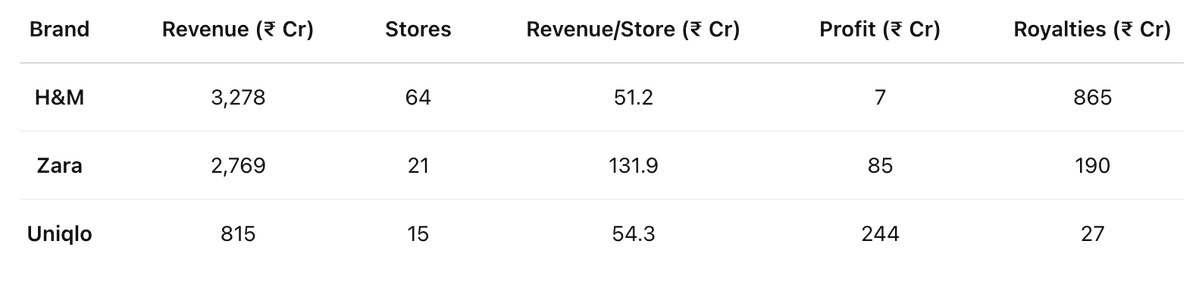

VCGuy

Believe me, it’s not... • 10m

I assumed H&M’s lower price point would translate into healthier profits. H&M may lead in total sales, but it’s shelling out 4.5× what Zara pays in royalties — ₹865 Cr vs Zara’s ₹190 Cr, eating into margins⤵️ (source - Entrackr) From what I’ve seen

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)