Back

Anonymous

Hey I am on Medial • 1y

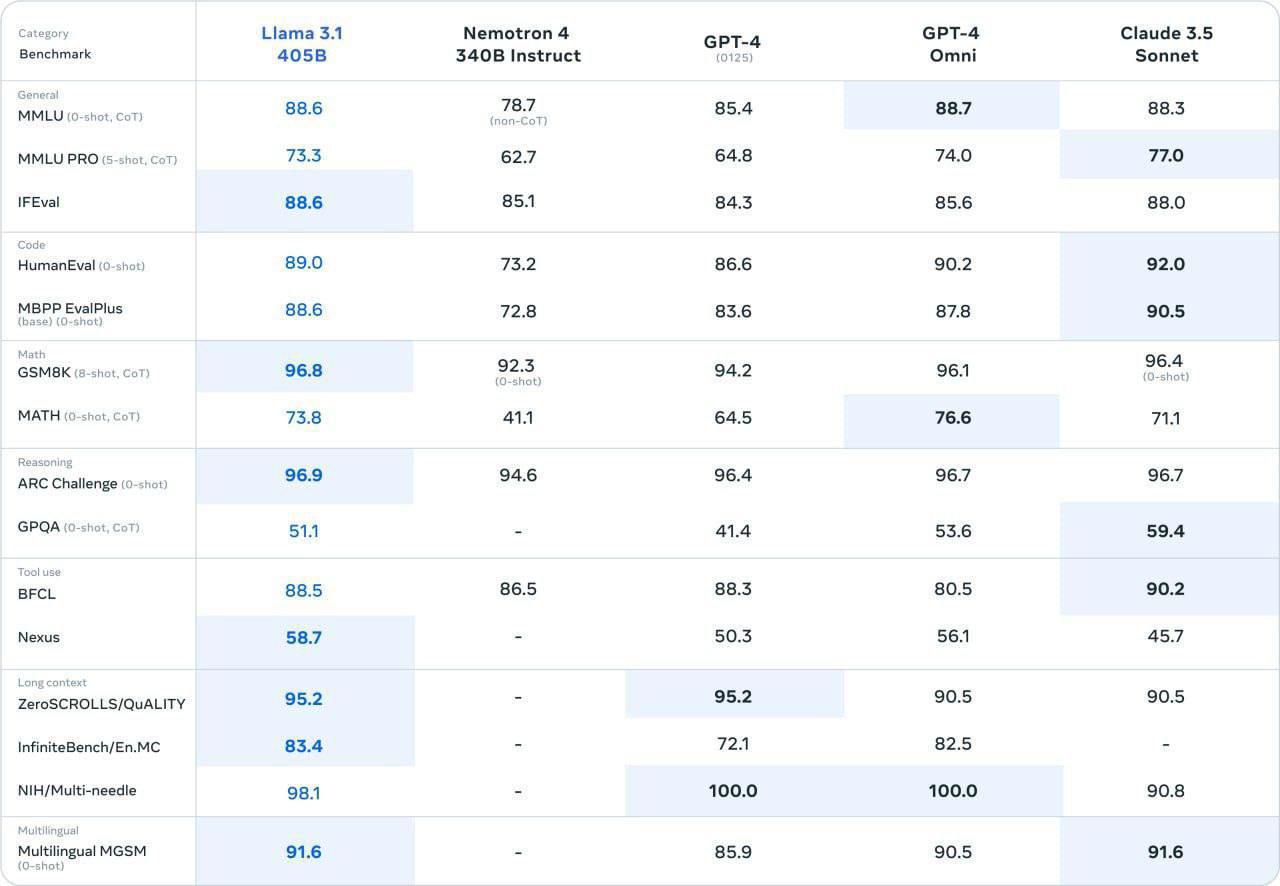

Huge announcement from Meta. Welcome Llama 3.1🔥 This is all you need to know about it: The new models: - The Meta Llama 3.1 family of multilingual large language models (LLMs) is a collection of pre-trained and instruction-tuned generative models in 8B, 70B, and 405B sizes (text in/text out) - All models support long context length (128k) and are optimized for inference with support for grouped query attention (GQA) - Optimized for multilingual dialogue use cases and outperform many of the available open source chat models on common industry benchmarks -Llama 3.1 is an auto-regressive language model with an optimized transformer architecture, using SFT and RLHF for alignment. Its core LLM architecture is the same dense structure as Llama 3 for text input and output - Tool use, Llama 3.1 Instruct Model (Text) is fine-tuned for tool use, enabling it to generate tool calls for search, image generation, code execution, and mathematical reasoning, and also supports zero-shot tool use

Replies (1)

More like this

Recommendations from Medial

Sweekar Koirala

startups, technology... • 1y

Meta has introduced the Llama 3.1 series of large language models (LLMs), featuring a top-tier model with 405 billion parameters, as well as smaller variants with 70 billion and 8 billion parameters. Meta claims that Llama 3.1 matches the performance

See MoreKushal Jain

Founding Software En... • 1y

Excited to share a preview of the AI Prescreening Assistant I’ve been developing! This tool prescreens candidates via calls and has incredible potential in Customer Support, Sales, and Marketing. Demo Video: https://youtu.be/0sWprEl4KnE?si=M1RDm28x

See MoreMohit Singh

19yo ✨ #developer le... • 1y

Meta, formerly Facebook, has unveiled two new open-source AI models called Llama 3 8B and Llama 3 70B, with 8 billion and 70 billion parameters respectively. 🚀 These models outperform some rivals and spark debate over open versus closed source AI de

See Moregray man

I'm just a normal gu... • 10m

Bhavish Aggarwal-led AI unicorn Krutrim has announced that it has begun hosting Meta’s latest Llama 4 models on its cloud platform. In an official statement, Krutrim revealed that Llama 4 Scout and Llama 4 Maverick are now available for developers t

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)