Back

Account Deleted

Hey I am on Medial • 1y

Another open-source model has arrived, and it’s even better than DeepSeek-V3. The Allen Institute for AI just introduced Tülu 3 (405B) 🐫, a post-training model that is a fine-tune of Llama 3.1 405B, which outperforms DeepSeek V3.

Replies (10)

More like this

Recommendations from Medial

Parampreet Singh

Python Developer 💻 ... • 12m

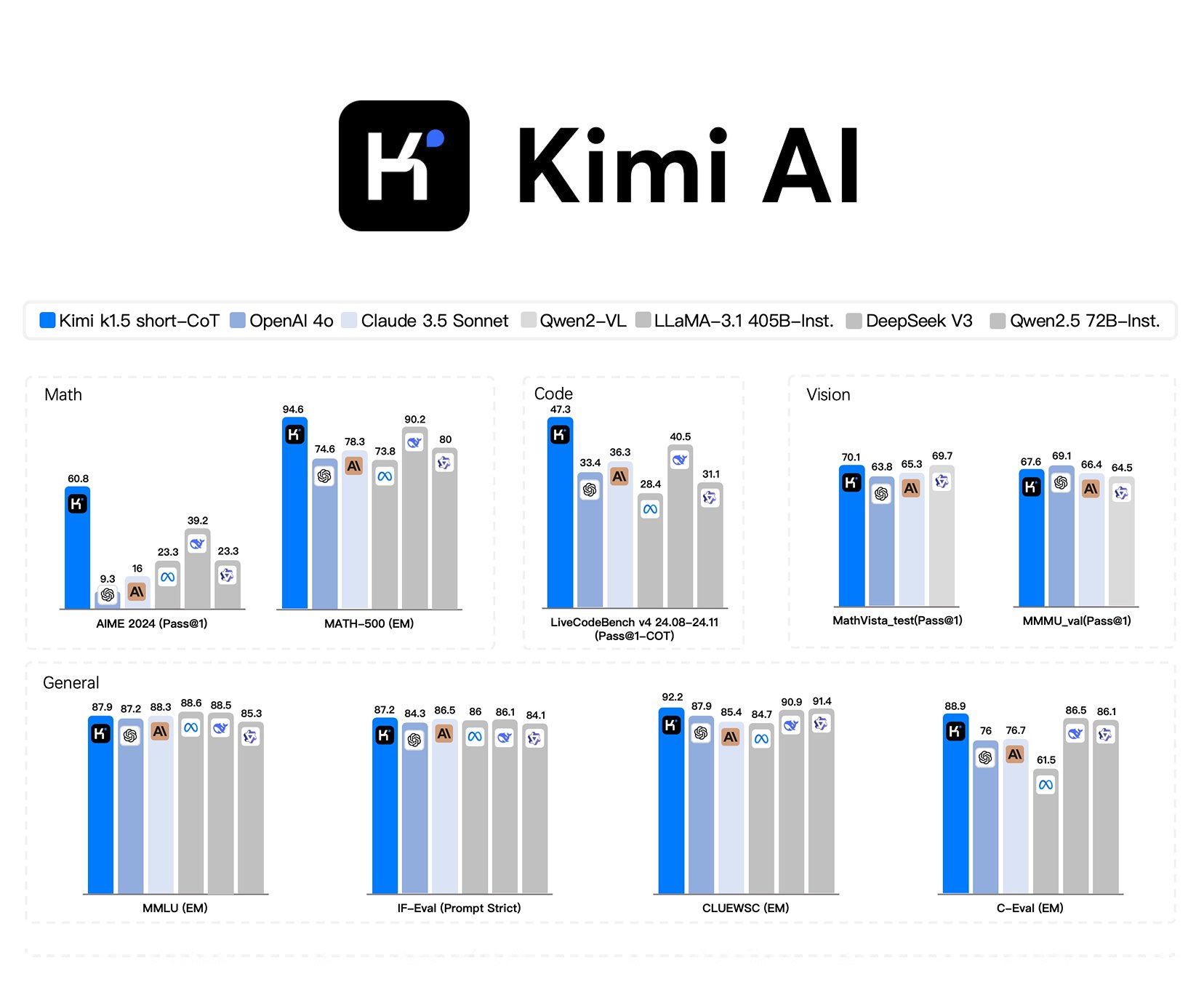

3B LLM outperforms 405B LLM 🤯 Similarly, a 7B LLM outperforms OpenAI o1 & DeepSeek-R1 🤯 🤯 LLM: llama 3 Datasets: MATH-500 & AIME-2024 This has done on research with compute optimal Test-Time Scaling (TTS). Recently, OpenAI o1 shows that Test-

See More

Sweekar Koirala

startups, technology... • 1y

Meta has introduced the Llama 3.1 series of large language models (LLMs), featuring a top-tier model with 405 billion parameters, as well as smaller variants with 70 billion and 8 billion parameters. Meta claims that Llama 3.1 matches the performance

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)