Back

lakshya sharan

Do not try, just do ... • 1y

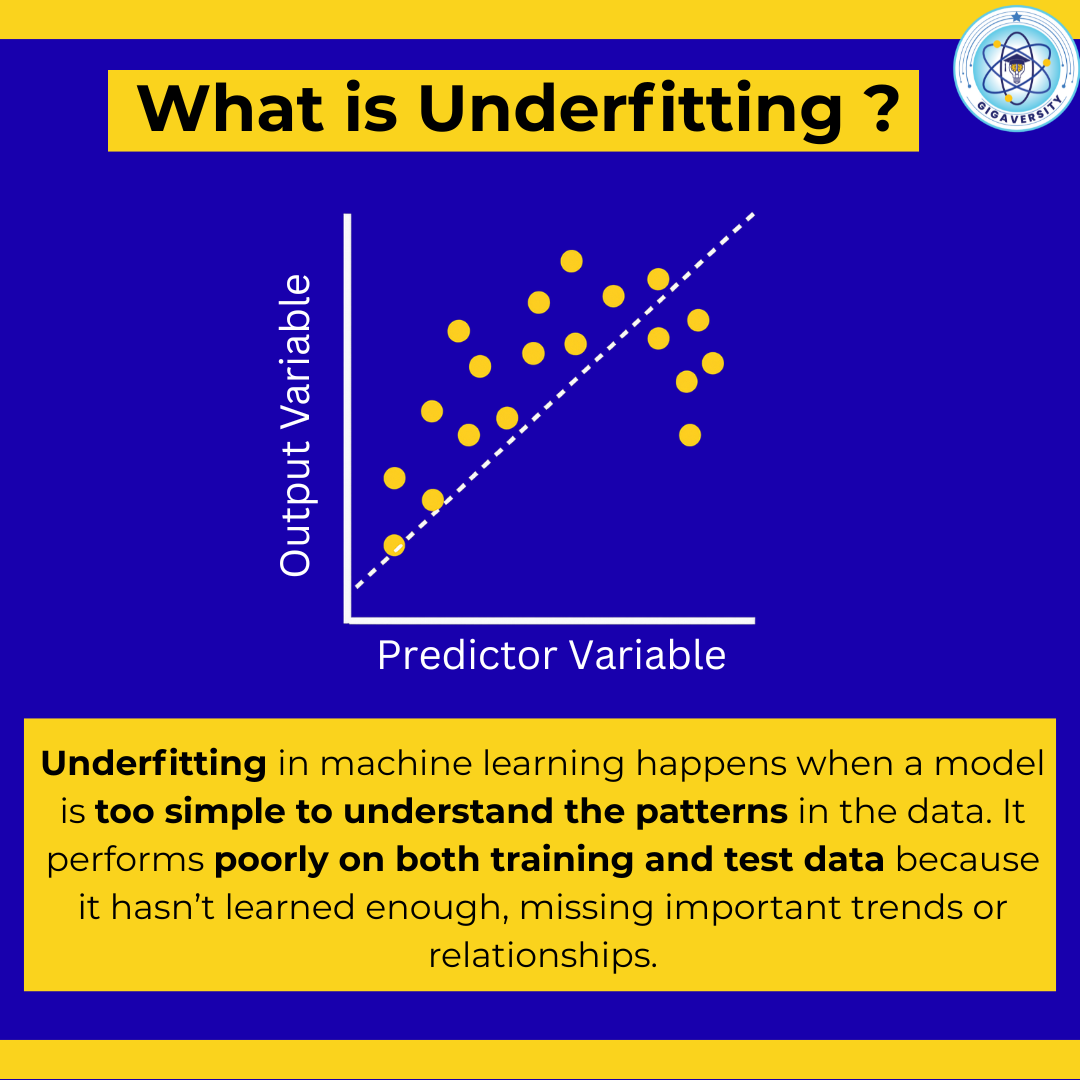

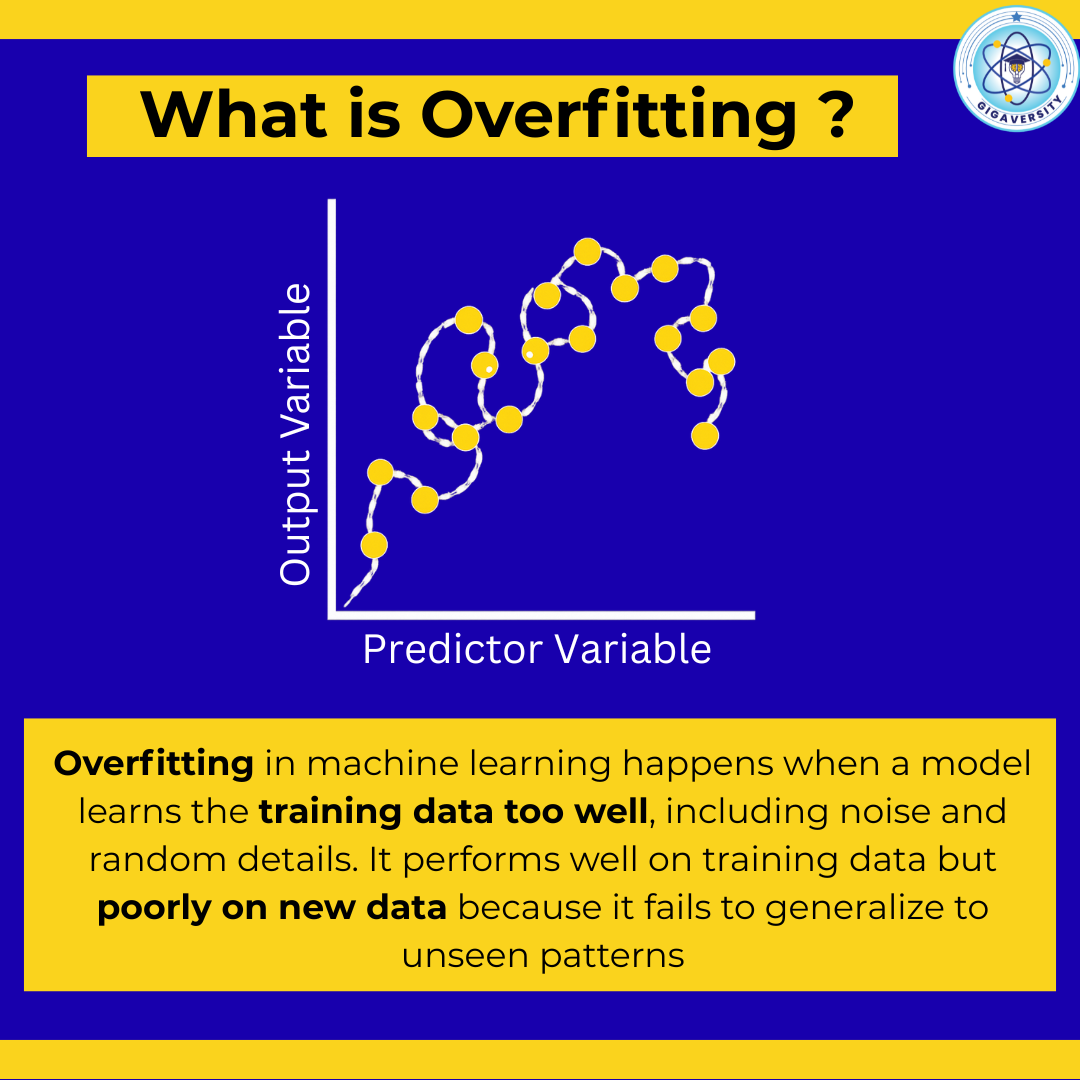

Random Thought : I was wondering, why ChatGPT weren't build on the Increment Learning modle.. Because I might destroy it's algo.... Let me expain.. In the world of machine learning, training models can be approached in two main ways, Batch Learning and Incremental Learning. Offline training involves training the model on a fixed dataset all at once, typically split into training, and test sets. After the initial training phase, the model is used to make predictions on new data without further updates. On the other hand, online training continuously updates the model as new data comes in, learning incrementally from each new data point or batch. This allows the model to adapt to new patterns and data changes over time. ___________________________________ If later approach were adopted, someone easily manipulated the algo by feeding the wrong data... Like 2+2=6 not 4.... ___<<<<__<<<_____<<<<__________ This in my thoughts, whats yours....?? #MachineLearning #DataScience

Replies (2)

More like this

Recommendations from Medial

Aditya Karnam

Hey I am on Medial • 11m

"Just fine-tuned LLaMA 3.2 using Apple's MLX framework and it was a breeze! The speed and simplicity were unmatched. Here's the LoRA command I used to kick off training: ``` python lora.py \ --train \ --model 'mistralai/Mistral-7B-Instruct-v0.2' \ -

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)