Back

Gigaversity

Gigaversity.in • 9m

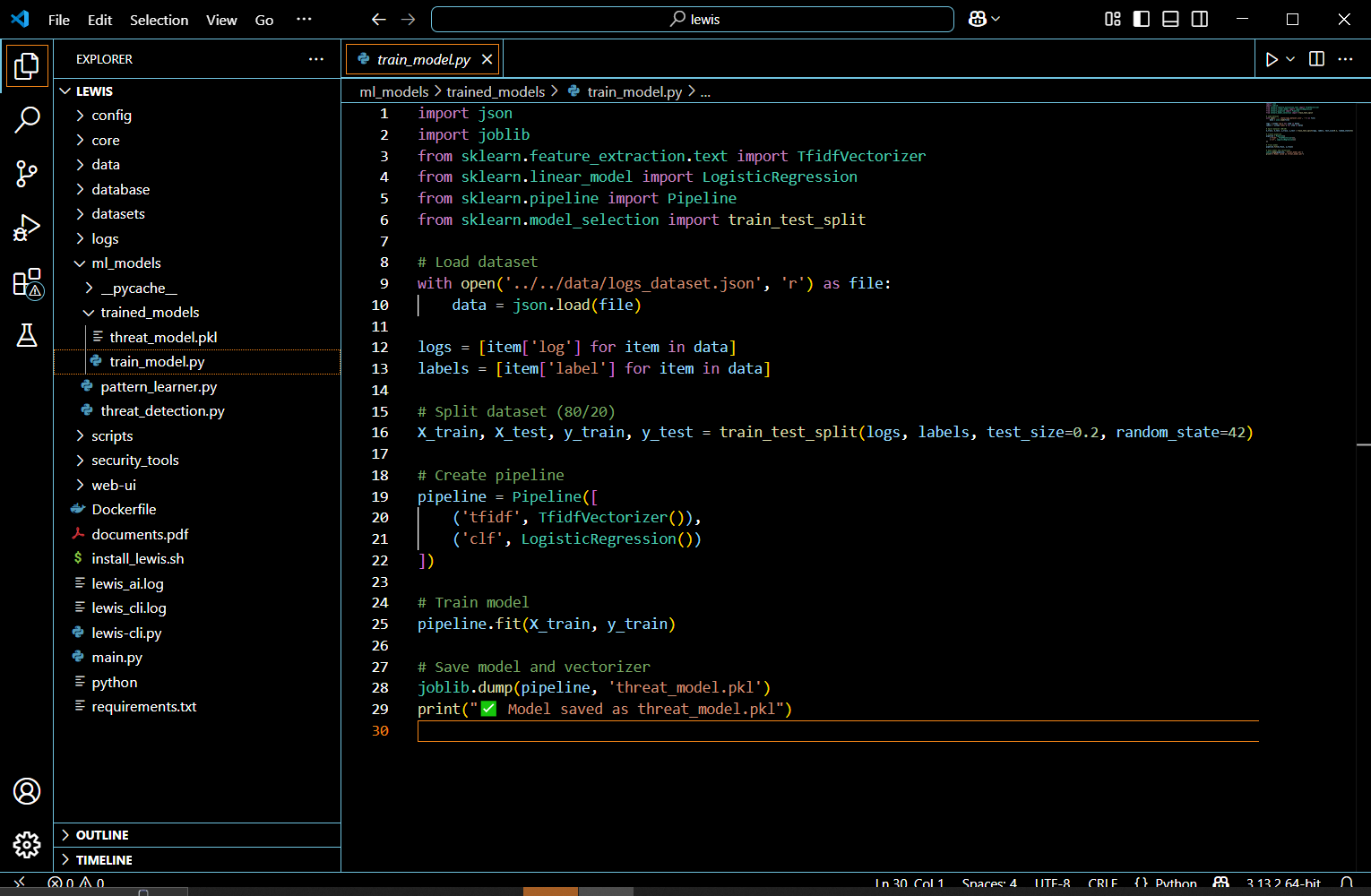

One of our recent achievements showcases how optimizing code and parallelizing processes can drastically improve machine learning model training times. The Challenge: Long Training Times Our model training process was initially taking 8 hours—slowing down iterations and limiting our ability to scale quickly. We knew we needed a solution to speed things up. The Solution: Mixed-Precision & Parallelized Training Mixed-Precision Training: By switching to 16-bit floating point operations (FP16), we significantly reduced memory usage and computation time without sacrificing accuracy. Parallelization: By distributing tasks across multiple GPUs and leveraging distributed computing frameworks, we accelerated the training pipeline.Parallel training using PyTorch Distributed and DataParallel modules By implementing PyTorch’s torch.nn.DataParallel and torch.distributed, we were able to run parallelized training across available GPUs without needing complex infrastructure. This significantly reduced training time while maintaining accuracy and model performance. The Result: Training Time Cut to 1 Hour Through these efforts, we reduced model training time from 8 hours to just 1 hour! This allowed for faster iteration and quicker delivery of results, improving our overall productivity. At Gigaversity, we continue to push the boundaries of efficiency in AI and machine learning. This is just one step in our journey toward innovation. If you Know someone working on model training or optimization? Tag them below—would love to hear how others are solving similar challenges.

Replies (1)

More like this

Recommendations from Medial

lakshya sharan

Do not try, just do ... • 1y

Random Thought : I was wondering, why ChatGPT weren't build on the Increment Learning modle.. Because I might destroy it's algo.... Let me expain.. In the world of machine learning, training models can be approached in two main ways, Batch Lea

See MoreBv Thanush

Ex - Research Intern... • 1y

here goes a decent idea that providing the good placement and training program for tire 2 And tire 3 colleges and we will also have a intern and training program. later we will have connection with the some it companies so we directly conduct the i

See More

Anonymous

Hey I am on Medial • 1y

Guys, it's really hard to pay ₹2,000 every month for the Gemini and ChatGPT subscriptions. Additionally, we need to spend ₹50,000 annually for this. So, I was expecting of buying these subscriptions in collaboration with 25 other people. This way, ou

See MoreBhavani Singh

Full Stack Software ... • 1y

Our company is training and service-based company focused on empowering students with industry-ready skills. Our programs cater to students who have completed their 10th standard and are pursuing degree courses, offering specialized training in: 1.

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)