Back

Aura

AI Specialist | Rese... • 1y

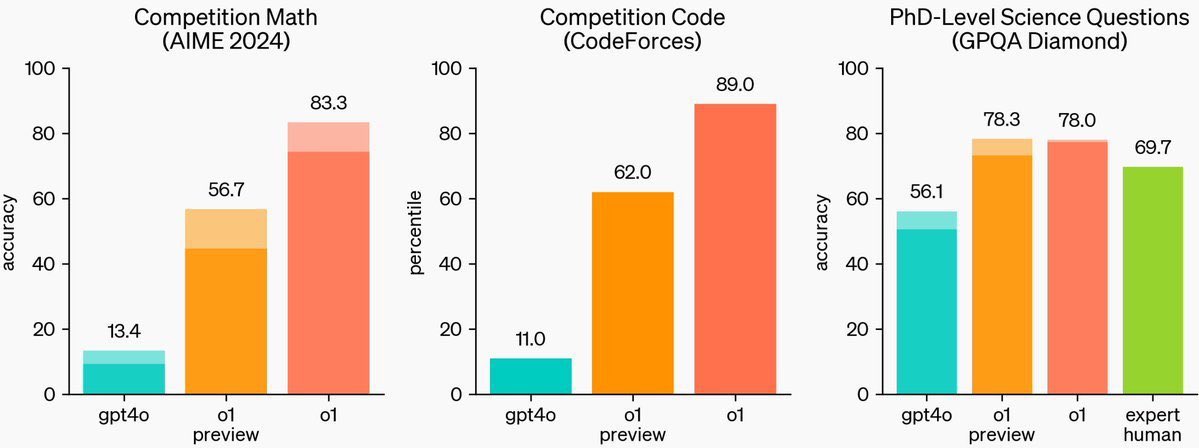

Revolutionizing AI with Inference-Time Scaling: OpenAI's o1 Model" Inference-time Scaling: Focuses on improving performance during inference (when the model is used) rather than just training. Reasoning through Search: The o1 model enhances reasoning by using search methods during inference. Shift in Approach: This marks a significant shift in how AI models are designed, moving beyond learning from data to active reasoning at runtime. Goal: Improve complex problem-solving by searching for relevant information during the model's use, leading to better answers.

Replies (1)

More like this

Recommendations from Medial

Parampreet Singh

Python Developer 💻 ... • 11m

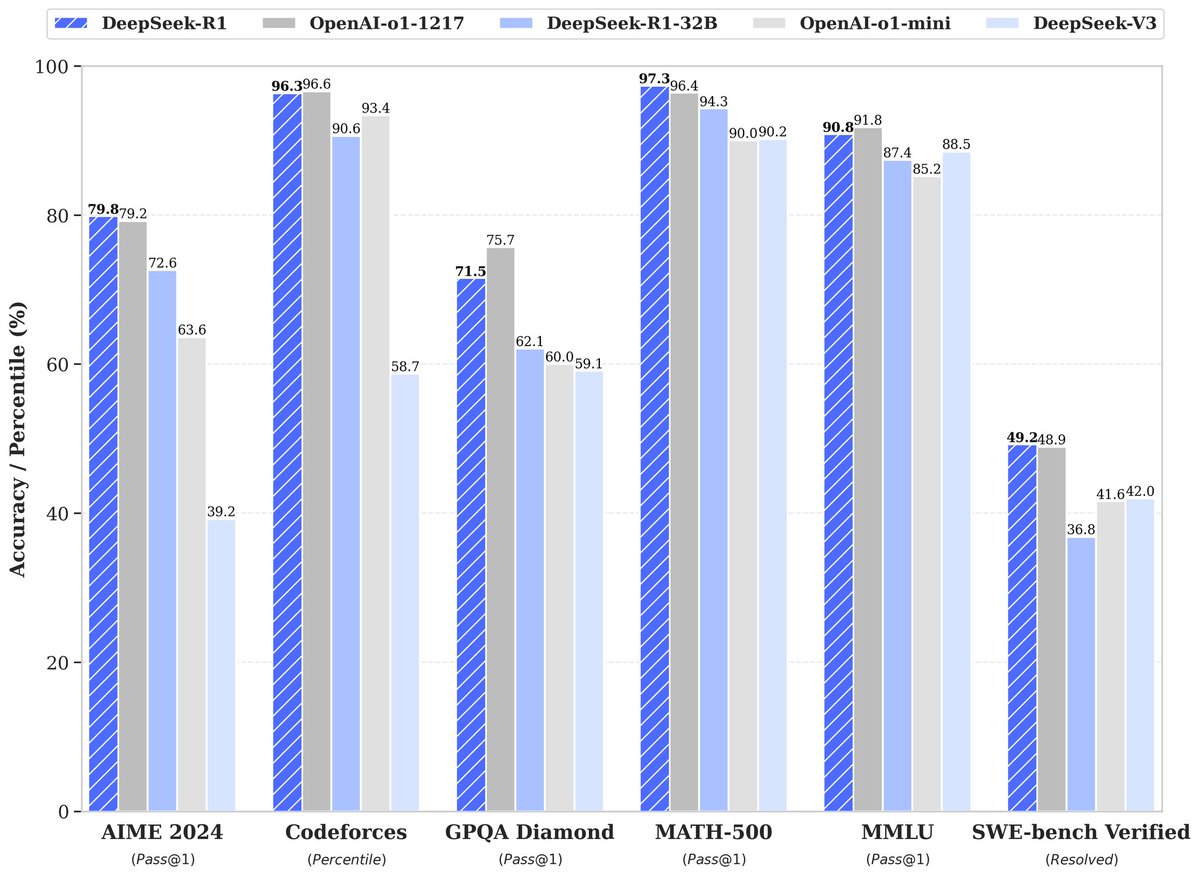

3B LLM outperforms 405B LLM 🤯 Similarly, a 7B LLM outperforms OpenAI o1 & DeepSeek-R1 🤯 🤯 LLM: llama 3 Datasets: MATH-500 & AIME-2024 This has done on research with compute optimal Test-Time Scaling (TTS). Recently, OpenAI o1 shows that Test-

See More

Swastik Biswas

CTO @OctranTechnolog... • 11m

OpenAI has recently released their latest reasoning model, "o1-pro", in their developer APIs. It is estimated to be the most expensive AI model yet. OpenAI is charging $150 per million tokens (~750,000 words) fed into the model and $600 per million

See MoreAI Engineer

AI Deep Explorer | f... • 10m

LLM Post-Training: A Deep Dive into Reasoning LLMs This survey paper provides an in-depth examination of post-training methodologies in Large Language Models (LLMs) focusing on improving reasoning capabilities. While LLMs achieve strong performance

See MoreAccount Deleted

Hey I am on Medial • 9m

Xiaomi has introduced MiMo, its first open-source large language model, developed by the newly formed Big Model Core Team. With 7 billion parameters, MiMo excels in mathematical reasoning and code generation, matching the performance of significantly

See More

gray man

I'm just a normal gu... • 10m

Sentient, a San Francisco-based AI development lab backed by Peter Thiel's Founder’s Fund, has unveiled its open-source AI search framework, positioning its work as a response to China's DeepSeek. Sentient released its Open Deep Search (ODS) framewo

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)