Back

Shuvodip Ray

•

YouTube • 1y

Researchers at Meta recently presented ‘An Introduction to Vision-Language Modeling’, to help people better understand the mechanics behind mapping vision to language. The paper includes everything from how VLMs work, how to train them, and approaches to evaluate VLMs. This approach is more effective than traditional methods such as CNN-based image captioning, RNN and LSTM networks, encoder-decoder models, and object detection techniques. Traditional methods often lack the advanced capabilities of newer VLMs, such as handling complex spatial relationships, integrating diverse data types and scaling to more sophisticated tasks involving detailed contextual interpretations.

Replies (4)

More like this

Recommendations from Medial

Rahul Agarwal

Founder | Agentic AI... • 23d

Google is offering free AI learning programs and you do not need any prior experience or payment to get started. If you want to break into AI, these 10 courses are a great place to begin: 1 - Introduction to Generative AI A fast, beginner friendly

See MoreAstrologer Bhraradwaj

The Road to sucess i... • 1y

What is Shadbala? 🤔 Shadbala, meaning 'sixfold strength,' is a method to measure the power of each planet in your astrology chart. Unlike traditional methods that rely solely on planetary positions in houses and signs, Shadbala provides a comprehen

See MoreAkshay Prudhviraj

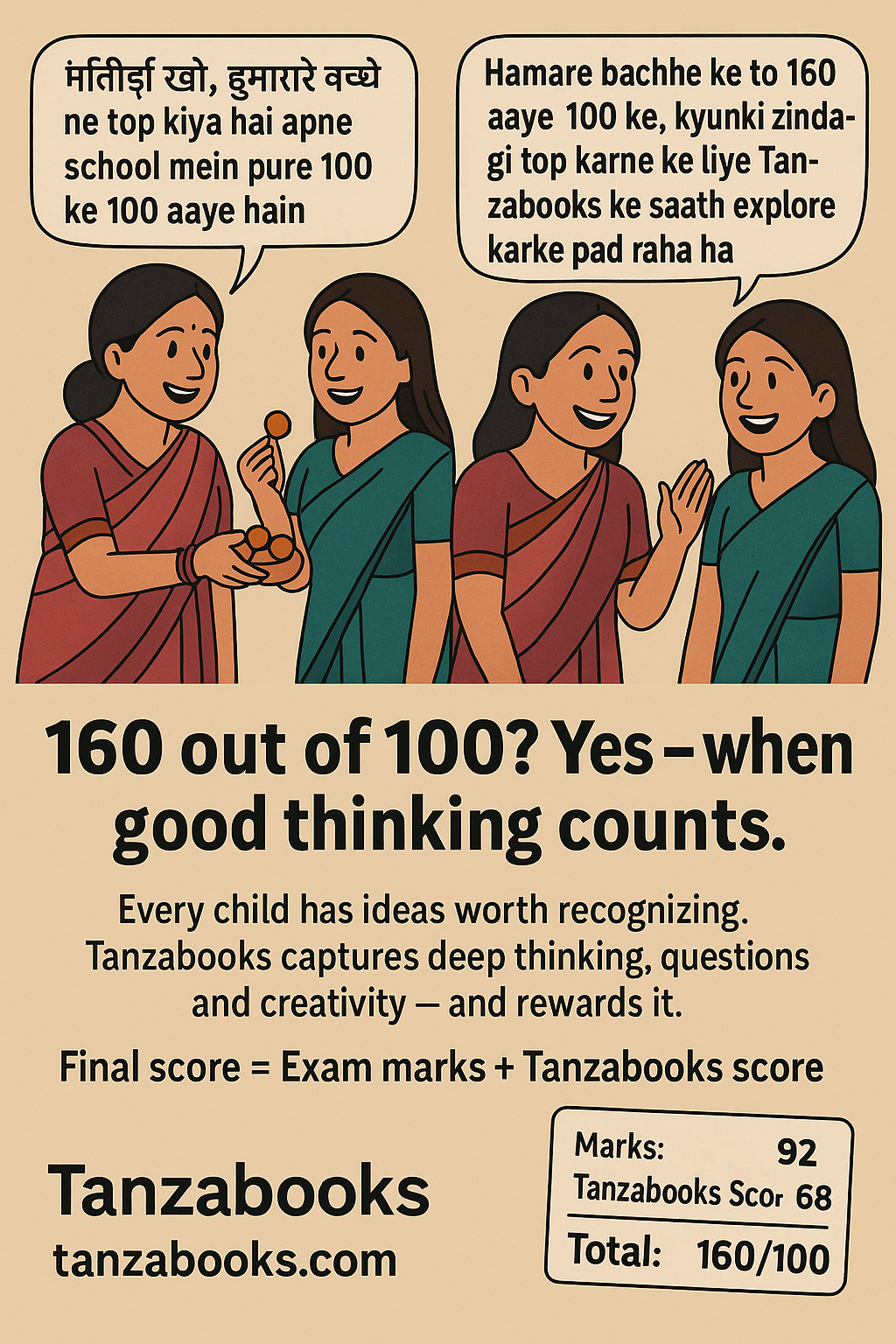

EdTech Entrepreneur ... • 7m

Rating centaurs should evolve beyond our traditional methods. I would like to know your opinions on this. Tanzabooks - Explorative learning initiative. What do you think? a). Funny, maybe a head turner b). Too much, gets ignored c). There's some meri

See More

Rajan Paswan

Building for idea gu... • 1y

Solutions to Traditional Hiring Issues Last time, we discussed issues in traditional hiring methods. Today, let's explore a popular solution: hiring challenges. Hiring Challenges! Companies now conduct competitions in coding, design, and product ma

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)