Back

DK

•

Ride • 1y

https://arxiv.org/pdf/2404.07143.pdf Google has dropped possibly THE most important and future defining AI paper under 12 pages. Models can now have infinite context.

Replies (2)

More like this

Recommendations from Medial

AI Engineer

AI Deep Explorer | f... • 10m

Want to learn AI the right way in 2025? Don’t just take courses. Don’t just build toy projects. Look at what’s actually being used in the real world. The most practical way to really learn AI today is to follow the models that are shaping the indus

See MorePulakit Bararia

Founder Snippetz Lab... • 6m

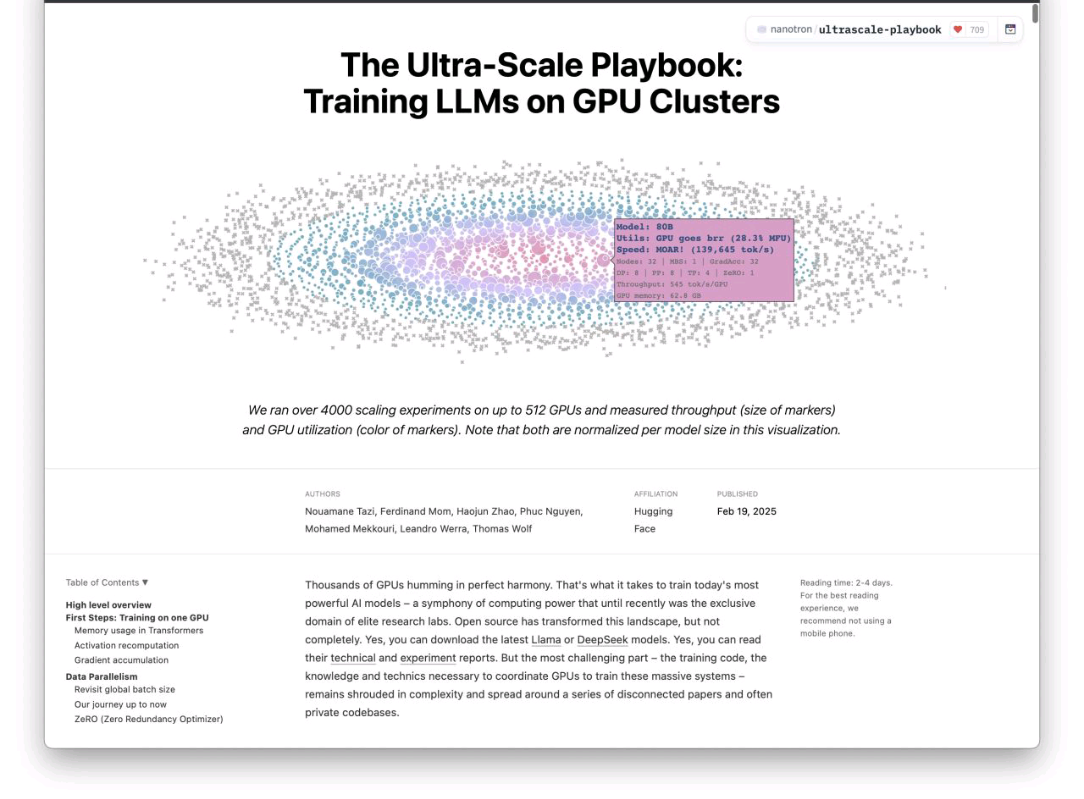

I didn’t think I’d enjoy reading 80+ pages on training AI models. But this one? I couldn’t stop. Hugging Face dropped a playbook on how they train massive models across 512 GPUs — and it’s insanely good. Not just technical stuff… it’s like reading a

See More

Siddharth K Nair

Thatmoonemojiguy 🌝 • 8m

Apple 🍎 is planning to integrate Al-powered heart rate monitoring into AirPods 🎧 Apple's newest research suggests that AirPods could soon double as Al-powered heart monitors. In a study published on May 29, 2025, Apple's team tested six advanced A

See More

Mohd Rihan

Student| Passionate ... • 6m

Dhruv Rathee just dropped a banger AI startup: AI Fiesta. As we know, different AI models excel at different tasks, so switching between them efficiently can be a hassle. With AI Fiesta, you can use multiple AI tools like ChatGPT, Gemini, Claude, Gr

See More

Siddharth K Nair

Thatmoonemojiguy 🌝 • 8m

🧠🍏 Apple Just Dropped a Bomb on AI Reasoning, “The Illusion of Thinking” Apple’s latest research paper is making waves and not the usual “faster chip, better cam” kind. In The Illusion of Thinking, Apple’s AI team basically said: “Yeah… large la

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)