Back

Rahul Agarwal

Founder | Agentic AI... • 2m

Most people don't even know these basics of RAG. I've explained it in a simple way below. 1. 𝗜𝗻𝗱𝗲𝘅𝗶𝗻𝗴 Convert documents into a format that AI can quickly search later. Step-by-step: • 𝗗𝗼𝗰𝘂𝗺𝗲𝗻𝘁: You start with files like PDFs, Word docs, notes, websites, etc. • 𝗘𝘅𝘁𝗿𝗮𝗰𝘁 → 𝗧𝗲𝘅𝘁: The system pulls raw text out of those documents. • 𝗖𝗵𝘂𝗻𝗸𝘀: The long text is broken into 𝘀𝗺𝗮𝗹𝗹 𝗽𝗶𝗲𝗰𝗲𝘀 (chunks). This is important because AI can’t understand very large text at once. • 𝗩𝗲𝗰𝘁𝗼𝗿𝗶𝘇𝗲 / 𝗘𝗻𝗰𝗼𝗱𝗲: Each chunk is converted into numbers called 𝘃𝗲𝗰𝘁𝗼𝗿𝘀. These numbers represent the 𝘮𝘦𝘢𝘯𝘪𝘯𝘨 of the text. • 𝗘𝗺𝗯𝗲𝗱𝗱𝗶𝗻𝗴 𝗠𝗼𝗱𝗲𝗹: A special model does this text → vector conversion. • 𝗦𝗮𝘃𝗲 𝗶𝗻 𝗩𝗲𝗰𝘁𝗼𝗿 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲: All vectors are stored in a 𝘃𝗲𝗰𝘁𝗼𝗿 𝗱𝗮𝘁𝗮𝗯𝗮𝘀𝗲 so they can be searched later. ________________ 2. 𝗥𝗲𝘁𝗿𝗶𝗲𝘃𝗮𝗹 (𝗥) Fetch the most relevant chunks for a user’s question. Step-by-step: • 𝗨𝘀𝗲𝗿 𝘀𝘂𝗯𝗺𝗶𝘁𝘀 𝗮 𝗾𝘂𝗲𝘀𝘁𝗶𝗼𝗻: Example: “𝘞𝘩𝘢𝘵 𝘥𝘰𝘦𝘴 𝘵𝘩𝘦 𝘤𝘰𝘯𝘵𝘳𝘢𝘤𝘵 𝘴𝘢𝘺 𝘢𝘣𝘰𝘶𝘵 𝘵𝘦𝘳𝘮𝘪𝘯𝘢𝘵𝘪𝘰𝘯?” • 𝗤𝘂𝗲𝘀𝘁𝗶𝗼𝗻 → 𝗩𝗲𝗰𝘁𝗼𝗿𝗶𝘇𝗲𝗱: The question is also converted into a vector using the same embedding engine. • 𝗩𝗲𝗰𝘁𝗼𝗿 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲 𝗦𝗲𝗮𝗿𝗰𝗵: The system compares: 1. Question vector 2. Stored document vectors • 𝗠𝗮𝘁𝗰𝗵𝗶𝗻𝗴 / 𝗦𝗶𝗺𝗶𝗹𝗮𝗿𝗶𝘁𝘆 𝗦𝗲𝗮𝗿𝗰𝗵: The database finds chunks whose meaning is closest to the question. • 𝗔𝗽𝗽𝗿𝗼𝗽𝗿𝗶𝗮𝘁𝗲 𝗖𝗵𝘂𝗻𝗸𝘀 𝗢𝘂𝘁𝗽𝘂𝘁: Only the 𝗺𝗼𝘀𝘁 𝗿𝗲𝗹𝗲𝘃𝗮𝗻𝘁 𝗽𝗶𝗲𝗰𝗲𝘀 𝗼𝗳 𝘁𝗲𝘅𝘁 are returned. ________________ 3. 𝗔𝘂𝗴𝗺𝗲𝗻𝘁𝗮𝘁𝗶𝗼𝗻 (𝗔) Enhance the user’s question with relevant information. Step-by-step: • 𝗥𝗲𝗹𝗲𝘃𝗮𝗻𝘁 𝗖𝗵𝘂𝗻𝗸𝘀: The retrieved text pieces are collected. • 𝗠𝗲𝗿𝗴𝗲 𝘄𝗶𝘁𝗵 𝗦𝗼𝘂𝗿𝗰𝗲 𝗖𝗼𝗻𝘁𝗲𝗻𝘁: These chunks are combined into a clean context block. • 𝗣𝗿𝗼𝗺𝗽𝘁 𝗖𝗿𝗲𝗮𝘁𝗶𝗼𝗻: The system builds a new prompt: 1. User’s original question 2. Retrieved context • 𝗔𝘂𝗴𝗺𝗲𝗻𝘁 𝘁𝗵𝗲 𝗣𝗿𝗼𝗺𝗽𝘁: This enriched prompt gives the AI 𝘣𝘢𝘤𝘬𝘨𝘳𝘰𝘶𝘯𝘥 𝘬𝘯𝘰𝘸𝘭𝘦𝘥𝘨𝘦. ________________ 4. 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝗼𝗻 (𝗚) Generate a correct, grounded response. Step-by-step: • 𝗘𝗻𝗿𝗶𝗰𝗵𝗲𝗱 𝗣𝗿𝗼𝗺𝗽𝘁 𝗦𝗲𝗻𝘁: The prompt (question + context) is sent to the LLM. • 𝗟𝗟𝗠 𝗠𝗼𝗱𝗲𝗹𝘀 (𝗢𝗽𝗲𝗻𝗔𝗜 / 𝗼𝘁𝗵𝗲𝗿𝘀): The language model reads: 1. The question 2. The retrieved knowledge • 𝗙𝗶𝗻𝗮𝗹 𝗢𝘂𝘁𝗽𝘂𝘁: The model generates a response 𝗯𝗮𝘀𝗲𝗱 𝗼𝗻 𝗽𝗿𝗼𝘃𝗶𝗱𝗲𝗱 𝗰𝗼𝗻𝘁𝗲𝘅𝘁, not guesses. Why RAG Is Powerful? <> Normal LLMs rely only on training data BUT, <> RAG lets LLMs use 𝘆𝗼𝘂𝗿 𝗽𝗿𝗶𝘃𝗮𝘁𝗲 𝗼𝗿 𝗳𝗿𝗲𝘀𝗵 𝗱𝗮𝘁𝗮 and it's easy to update knowledge anytime. ✅ Repost for others so they can understand the very basics of RAG.

Replies (4)

More like this

Recommendations from Medial

Kimiko

Startups | AI | info... • 9m

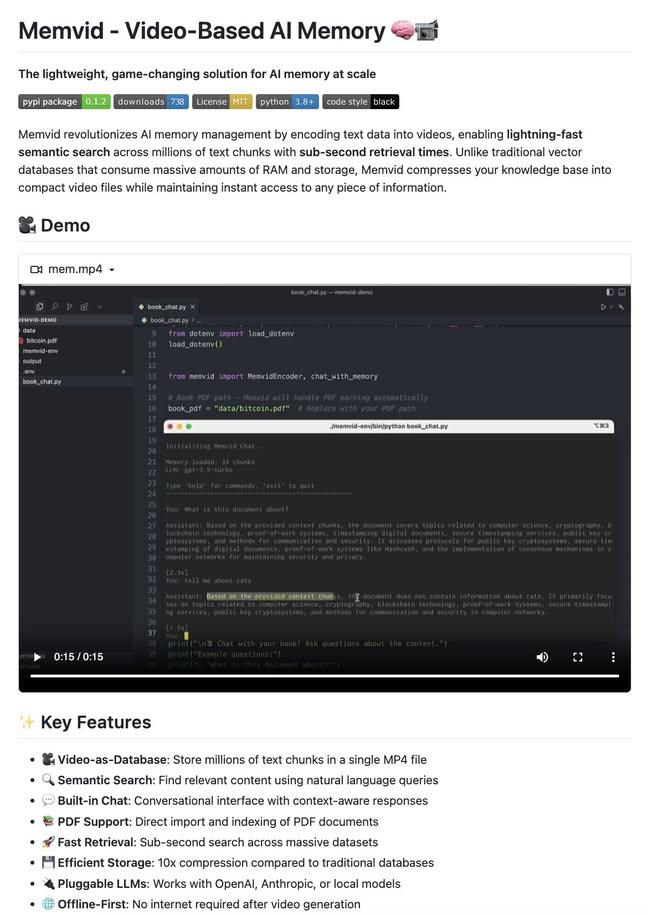

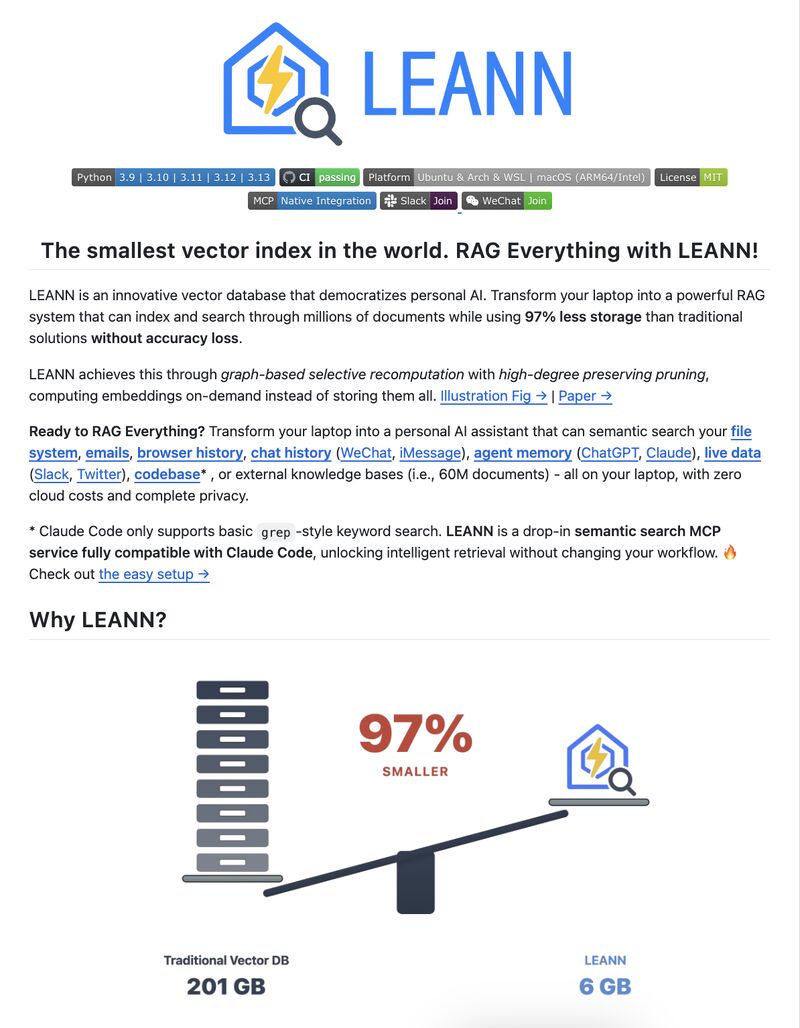

Vector databases for AI memory just got disrupted… by MP4 files?! Video as Database: Store millions of text chunks in a single MP4 file Store millions of text chunks with blazing-fast semantic search — no database required. 100% open source. Zero

See More

Krish Jaiman

Passionate about tec... • 1y

AI is reaching heights these days.But have you ever wondered how ChatGPT answers the question from recent affairs? Because see ChatGPT trained on the news of 31st January 2025 but it still answers the question relating to it .How? This is because of

See MoreSaswata Kumar Dash

Buidling FedUp| AI R... • 8m

🚨 Everyone says "RAG is dead" — but I say: It’s just been badly implemented. I’ve worked on AI systems where Retrieval-Augmented Generation (RAG) either changed the game… or completely flopped. Here’s the hard truth 👇 --- 🤯 Most teams mess up

See MoreChirotpal Das

Building an AI eco-s... • 11m

I feel pride in announcing our Made in India, Real-Time Vector Database - SwarnDB. SwarnDB is a SarthiAI initiative towards an effort to create an end to end eco-system for the future of AI. We tested SwarnDB with 100K vector records of 1536 dime

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)