Back

Narendra

Willing to contribut... • 3m

I spent 4 weeks interviewing 30 people who tried to fine-tune LLMs. Here's what I found:70% of them spent 2-4 weeks just preparing data. 60% copied hyperparameters from blog posts without understanding why. 53% abandoned projects because costs spiraled to $2-3K unexpectedly.The pattern was clear: Fine-tuning isn't a technical problem anymore. It's a workflow problem.Real quotes from my interviews:A YC founder: "We spent 3 weeks getting data in the right format. Had to redo it twice. Then wondered if it was even better than prompt engineering. Dropped it."An ML engineer at a unicorn startup: "I tried 50 different learning rates. Never knew if mine was good or just good enough. Shipped the least-bad version because we ran out of runway."A technical co-founder: "Labeling cost us $8K. Still had consistency issues. Had to re-label 40% of examples."The real insight: Everyone's solving the same problems from scratch. Data formatting. Hyperparameter tuning. Cost estimation. But no one's built the workflow that just... works.Cursor did this for coding—took VS Code's power and made it approachable for everyone. I'm building the same thing for LLM fine-tuning.What I'm building: → Upload your data. Get instant quality scoring + format fixes. → Describe what you want in plain English. Get auto-configured hyperparameters. → See cost estimates upfront. No $2K surprises. → Train in hours, not weeks.Reality check: This could completely fail. My validation shows 73% would pay $400-500/month, but that's 30 people saying "maybe." I need to prove people will actually pay.Looking for 5 people to pilot this (free access).Requirements:Attempted fine-tuning in the last 1-2 months. Hit roadblocks with data prep, hyperparameters, or costs2-3 hours available to test the MVP. Reply or DM if this sounds like your experience. Building this in public—will share results good or bad.#MachineLearning #LLM #BuildInPublic #AI #Startups

More like this

Recommendations from Medial

Narendra

Willing to contribut... • 3m

I fine-tuned 3 models this week to understand why people fail. Used LLaMA-2-7B, Mistral-7B, and Phi-2. Different datasets. Different methods (full tuning vs LoRA vs QLoRA). Here's what I learned that nobody talks about: 1. Data quality > Data quan

See More

Aditya Karnam

Hey I am on Medial • 11m

"Just fine-tuned LLaMA 3.2 using Apple's MLX framework and it was a breeze! The speed and simplicity were unmatched. Here's the LoRA command I used to kick off training: ``` python lora.py \ --train \ --model 'mistralai/Mistral-7B-Instruct-v0.2' \ -

See Morenivedit jain

Hey I am on Medial • 8m

I have started a series to make it easy for people to, simply understand the latest AI trends here: https://open.substack.com/pub/jainnivedit/p/fine-tuning?utm_source=share&utm_medium=android&r=5l6i8y Think of it Finshots for AI, looking for feedbac

See MoreAI Engineer

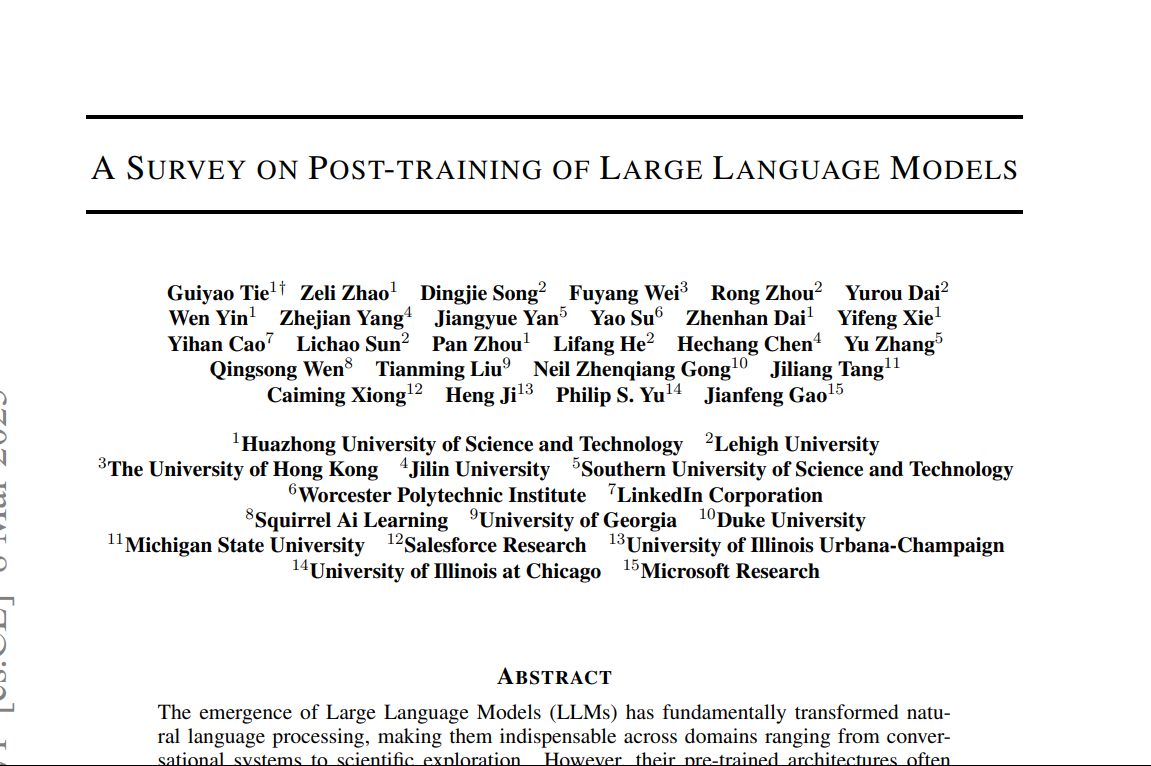

AI Deep Explorer | f... • 10m

"A Survey on Post-Training of Large Language Models" This paper systematically categorizes post-training into five major paradigms: 1. Fine-Tuning 2. Alignment 3. Reasoning Enhancement 4. Efficiency Optimization 5. Integration & Adaptation 1️⃣ Fin

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)