Back

Gigaversity

Gigaversity.in • 9m

We recently integrated an AI chatbot into our website to improve user support and engagement. Initial tests showed everything was working fine — responses were fast, well-structured, and accurate. But once it went live, we started noticing something strange. The chatbot was giving answers that looked correct in format, but the information was outdated or incomplete. What Went Wrong: After a detailed investigation, we discovered that the issue wasn’t with the chatbot model, but with the backend integration. The root cause was a subtle glitch in how the chatbot connected to our knowledge base API. This problem didn't surface during standard testing but became clear under real-world user load. 🔹 The API connection intermittently failed, especially under traffic spikes. 🔹 When it failed, the chatbot defaulted to using fallback or cached data instead of live content. 🔹 These fallback responses lacked the context and freshness needed for complex user How We Fixed It: Once the problem was identified, we made targeted improvements to both our integration process and system behavior to ensure consistent accuracy moving forward. 🔹 We stabilized the API connection to ensure it handles real-time traffic without failure. 🔹We added stricter error-handling logic so the chatbot avoids using fallback data when the API fails. Our experience was a reminder that even minor integration glitches can lead to major communication gaps. Have you faced similar challenges while deploying AI tools on your platform? share your thoughts in the comments below!

More like this

Recommendations from Medial

Account Deleted

•

Urmila Info Solution • 6m

SaaS is no longer a feature. It’s a business. 2025 founders aren’t launching just apps. They’re launching AI-powered SaaS businesses. Here’s how Opslify makes it happen: 🔹 Custom SaaS Architecture 🔹 AI-driven workflows 🔹 User-friendly dashboard

See MoreMayank Srivastava

Workflow Automation ... • 1y

Hello guys, I’m excited to announce that my company, AGenAI, is offering free custom AI chatbot integration services. Benefits include: 24/7 customer support: Your chatbot can handle queries at any time, ensuring seamless customer engagement. Imp

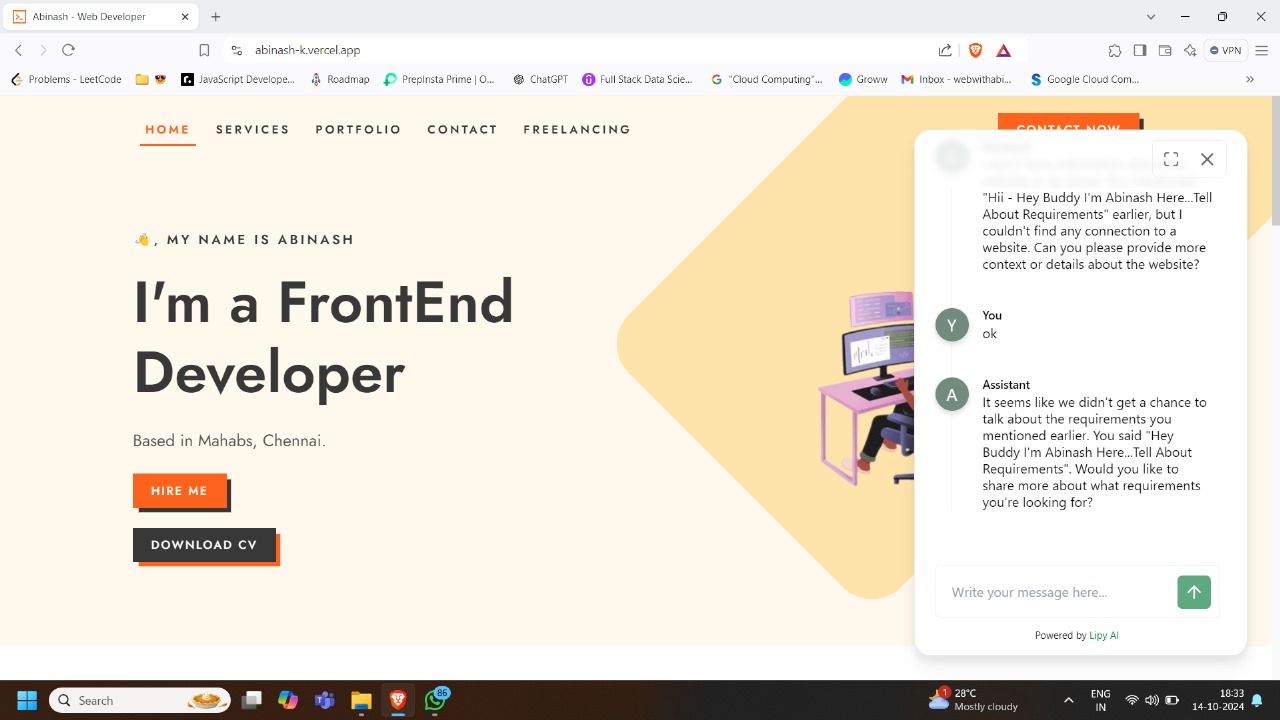

See MoreAbinash Karunanidhi

Web Developer • 1y

Hey Folks I Just Integrated Lippy.ai in my portfolio...It is really awesome with the chatbot technology...I'm really liked The Chatbot...The chatbot is not giving answer randomly from ai. We Can Train The Chatbot To Reply To The customers.And from ou

See More

Jatin Ahirwar

Full Stack Developer • 1y

📊 Day 29 of the 30-day Question Mania challenge with Hitesh Choudhary Sir! 📊 Today, I worked on creating a Social Media Dashboard, bringing together several key elements to create an interactive and user-friendly platform. Here’s what I accomplish

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)