Back

Tirush V

Infrastructure/AI en... • 1y

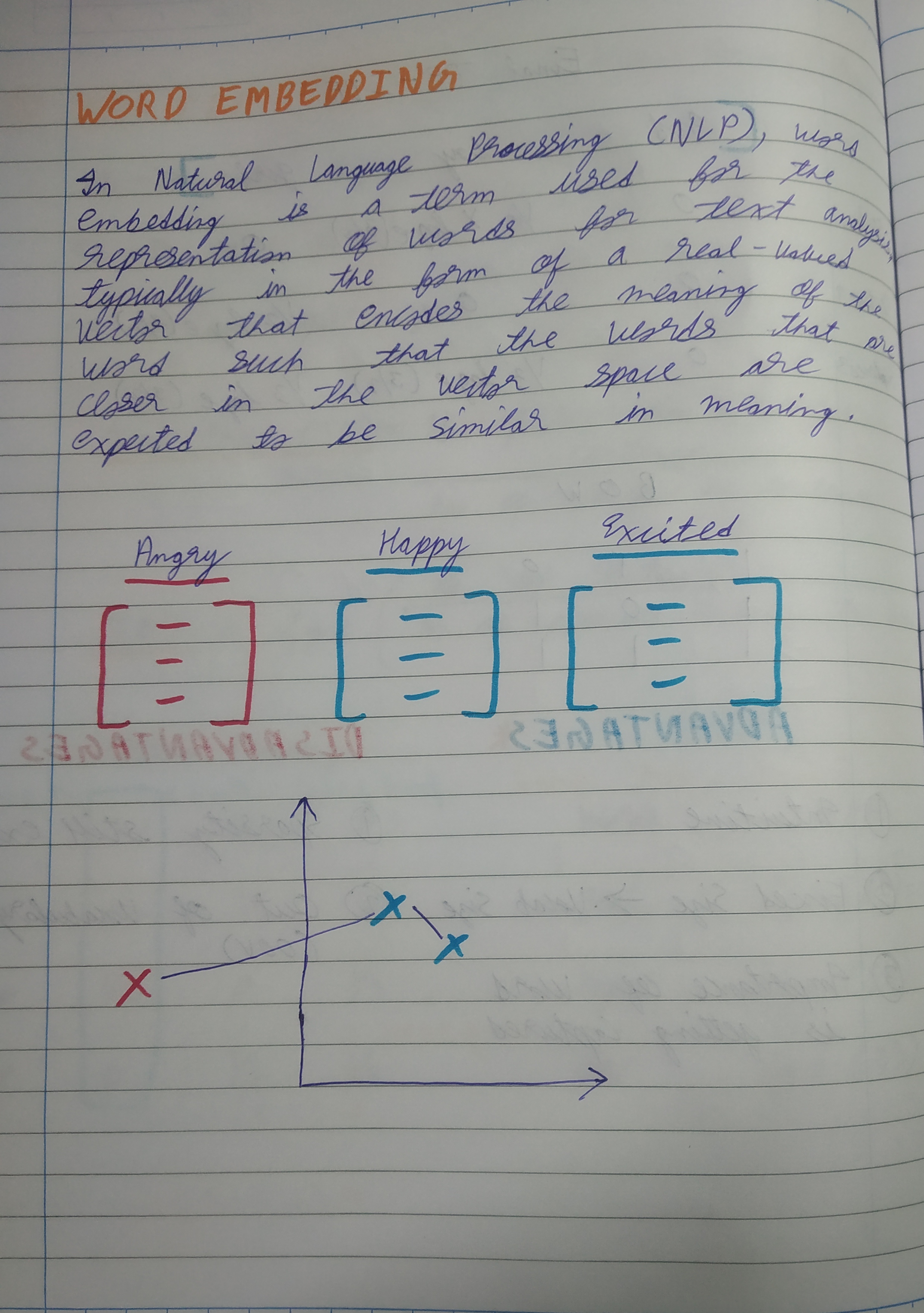

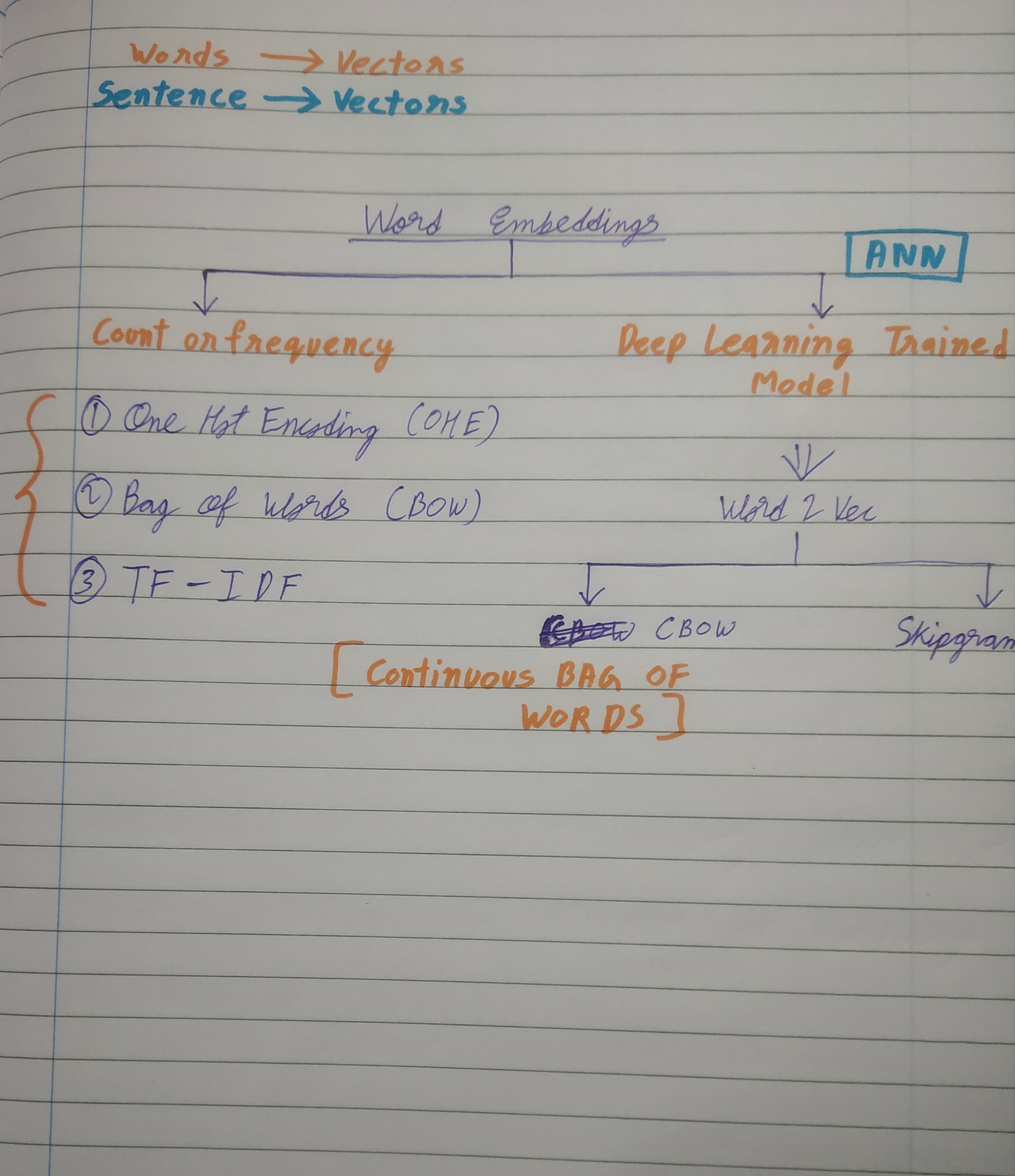

Not fine tuning or pre training. It’s the way of changing pre processing step. What we do now is chunk the data, create embedding then store them in vector db and query it and feed it to llm for response. This has more error prone due to complex documents, like tables, graph elements etc to solve this chunking Doesn’t make as it misses the content by breaking the text of each document text. So changing this with other approach solves the accrueacy in retrieval.

Replies (2)

More like this

Recommendations from Medial

Chirotpal Das

Building an AI eco-s... • 10m

I'm working towards building India's first Vector DB and Memory Management System. I'm not yet funded by any VC - bootstrapping everything with a very small team. I need the support of the community to take India's deep-tech products to the Global s

See MoreSarthak Pandey

Wake UP V. I. O. N. ... • 2m

🚀 Hiring for V.I.O.N.A. Project — 2 Critical Roles Open 1️⃣ LLM Engineer / AI Systems Developer Looking for someone strong in: Python REST API development Vector Databases (Pinecone / Weaviate / Chroma etc.) Prompt Engineering Token Optimizati

See MoreSaswata Kumar Dash

Buidling FedUp| AI R... • 7m

🚨 Everyone says "RAG is dead" — but I say: It’s just been badly implemented. I’ve worked on AI systems where Retrieval-Augmented Generation (RAG) either changed the game… or completely flopped. Here’s the hard truth 👇 --- 🤯 Most teams mess up

See MoreDownload the medial app to read full posts, comements and news.