Back

Replies (1)

More like this

Recommendations from Medial

Ishan Mishra

Hey I am on Medial • 1y

I really want to work on AI projects but I'm inexperienced with company work, I used to work as a research intern at a lab and was a data science intern at another place. I really want to get into working on hf models, langchain, langgraph, fine-tuni

See MoreAI Engineer

AI Deep Explorer | f... • 10m

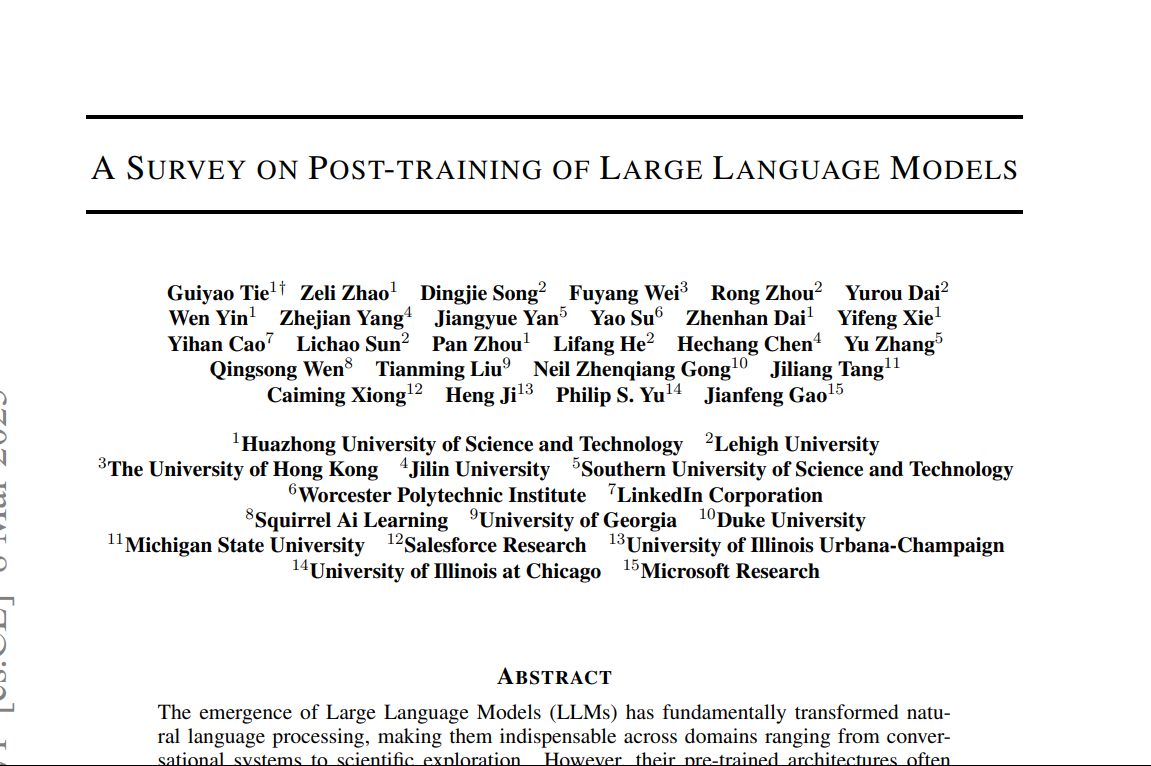

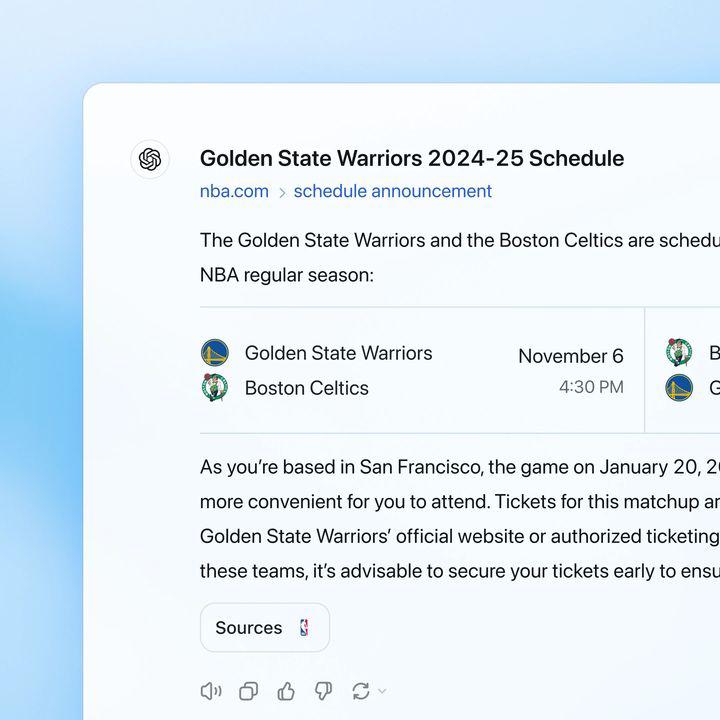

"A Survey on Post-Training of Large Language Models" This paper systematically categorizes post-training into five major paradigms: 1. Fine-Tuning 2. Alignment 3. Reasoning Enhancement 4. Efficiency Optimization 5. Integration & Adaptation 1️⃣ Fin

See More

Jainil Prajapati

Turning dreams into ... • 12m

India should focus on fine-tuning existing AI models and building applications rather than investing heavily in foundational models or AI chips, says Groq CEO Jonathan Ross. Is this the right strategy for India to lead in AI innovation? Thoughts?

Mohammed Zaid

building hatchup.ai • 8m

OpenAI researchers have discovered hidden features within AI models that correspond to misaligned "personas," revealing that fine-tuning models on incorrect information in one area can trigger broader unethical behaviors through what they call "emerg

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)