Back

Jeet Sarkar

Technology, Developm... • 1y

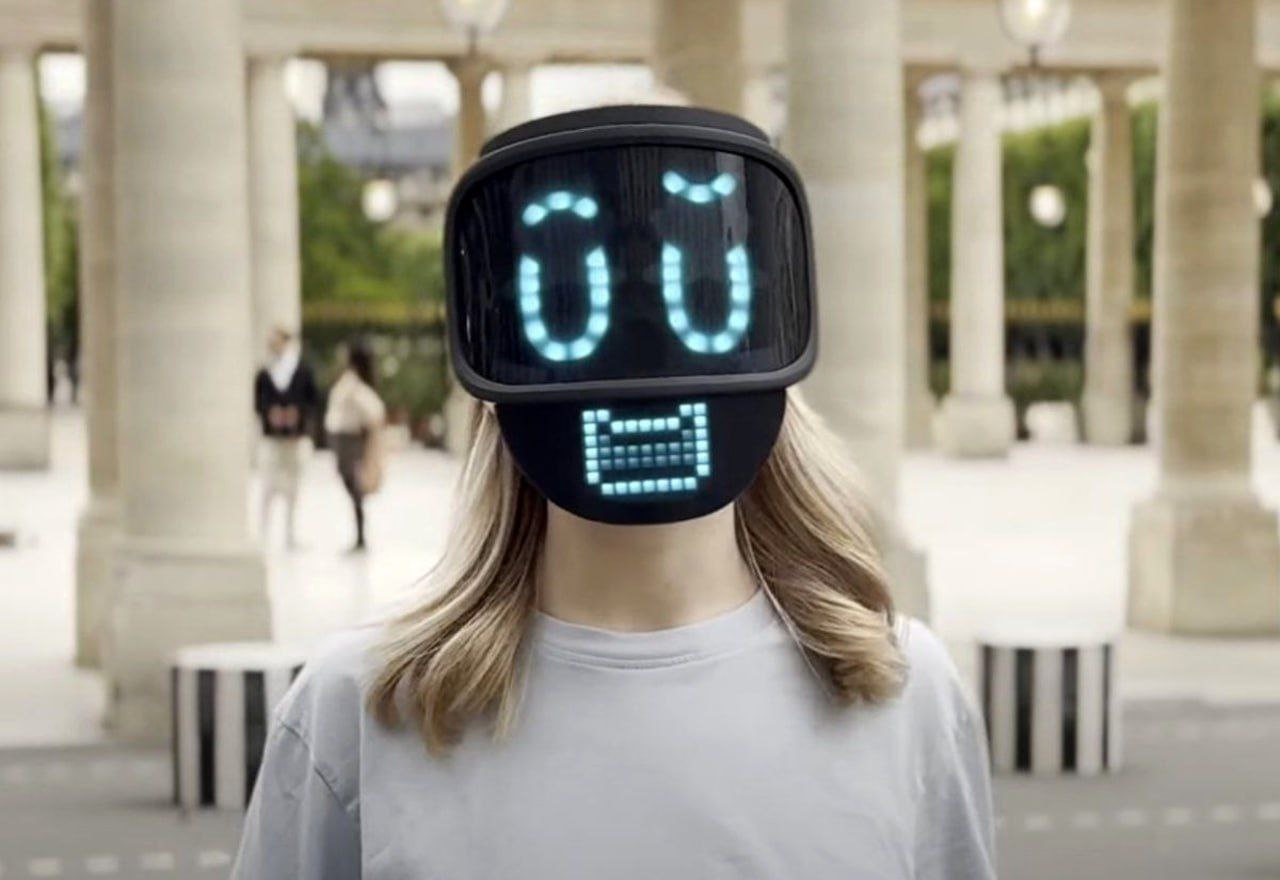

Microsoft recently revealed VASA-1, an impressive generative AI model that can turn a single still photo into a believable video. That's fuckin scary. Here is simple explanation of how does it work: Essentially, VASA-1 examines a still image and uses it to generate a video where the person’s lips and facial expressions move in perfect sync with an accompanying audio track. The model is trained on thousands of images that capture a wide range of facial expressions. This training helps VASA-1 understand how different expressions correspond to various sounds or words in the audio. The animation includes not just the lips but also other subtle facial movements and even head motions that add life to the video. Now watch prototype here: https://twitter.com/minchoi/status/1780792793079632130?t=1gAF5ob7Gp6H_QCMNsIMlw&s=19 And share your views on security, advancement of this product and how many animator gonna lose their job.

Replies (1)

More like this

Recommendations from Medial

sarah wilson

Daily sharing of use... • 1m

Just tried out this AI video avatar tool LongCat Avatar, and it’s actually pretty handy 👀 Upload audio + a reference image → in minutes you get a talking head video Lip-sync is smooth, gestures and expressions look natural Supports multiple charact

See More

Account Deleted

Hey I am on Medial • 8m

How about an ai that engages users to access their mental health and give advice based on their condition Basically a therapist. Moreover we can use speech recognition and computer vision to detect physical parameters of the person like his tone, his

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)