Back

Devarsh Ukani

Co-Founder @INDI • 1y

We haven't mastered the skill of finetuning the small models l, and the quality data for fine-tuning is also insufficient. We are working on improving the model

Replies (1)

More like this

Recommendations from Medial

Jainil Prajapati

Turning dreams into ... • 11m

India should focus on fine-tuning existing AI models and building applications rather than investing heavily in foundational models or AI chips, says Groq CEO Jonathan Ross. Is this the right strategy for India to lead in AI innovation? Thoughts?

Aditya Karnam

Hey I am on Medial • 10m

"Just fine-tuned LLaMA 3.2 using Apple's MLX framework and it was a breeze! The speed and simplicity were unmatched. Here's the LoRA command I used to kick off training: ``` python lora.py \ --train \ --model 'mistralai/Mistral-7B-Instruct-v0.2' \ -

See MoreMohammed Zaid

building hatchup.ai • 7m

OpenAI researchers have discovered hidden features within AI models that correspond to misaligned "personas," revealing that fine-tuning models on incorrect information in one area can trigger broader unethical behaviors through what they call "emerg

See More

AI Engineer

AI Deep Explorer | f... • 10m

"A Survey on Post-Training of Large Language Models" This paper systematically categorizes post-training into five major paradigms: 1. Fine-Tuning 2. Alignment 3. Reasoning Enhancement 4. Efficiency Optimization 5. Integration & Adaptation 1️⃣ Fin

See More

Prabhjot Singh

@Techentia MERN & Ne... • 3m

Day 12 of 60 Explored retargeting strategies in Meta Ads, learned about link-building techniques in SEO, and worked on improving website content flow. Every concept mastered today builds a stronger skill set for tomorrow. #Day12 #60DaysChallenge #D

See MoreNarendra

Willing to contribut... • 3m

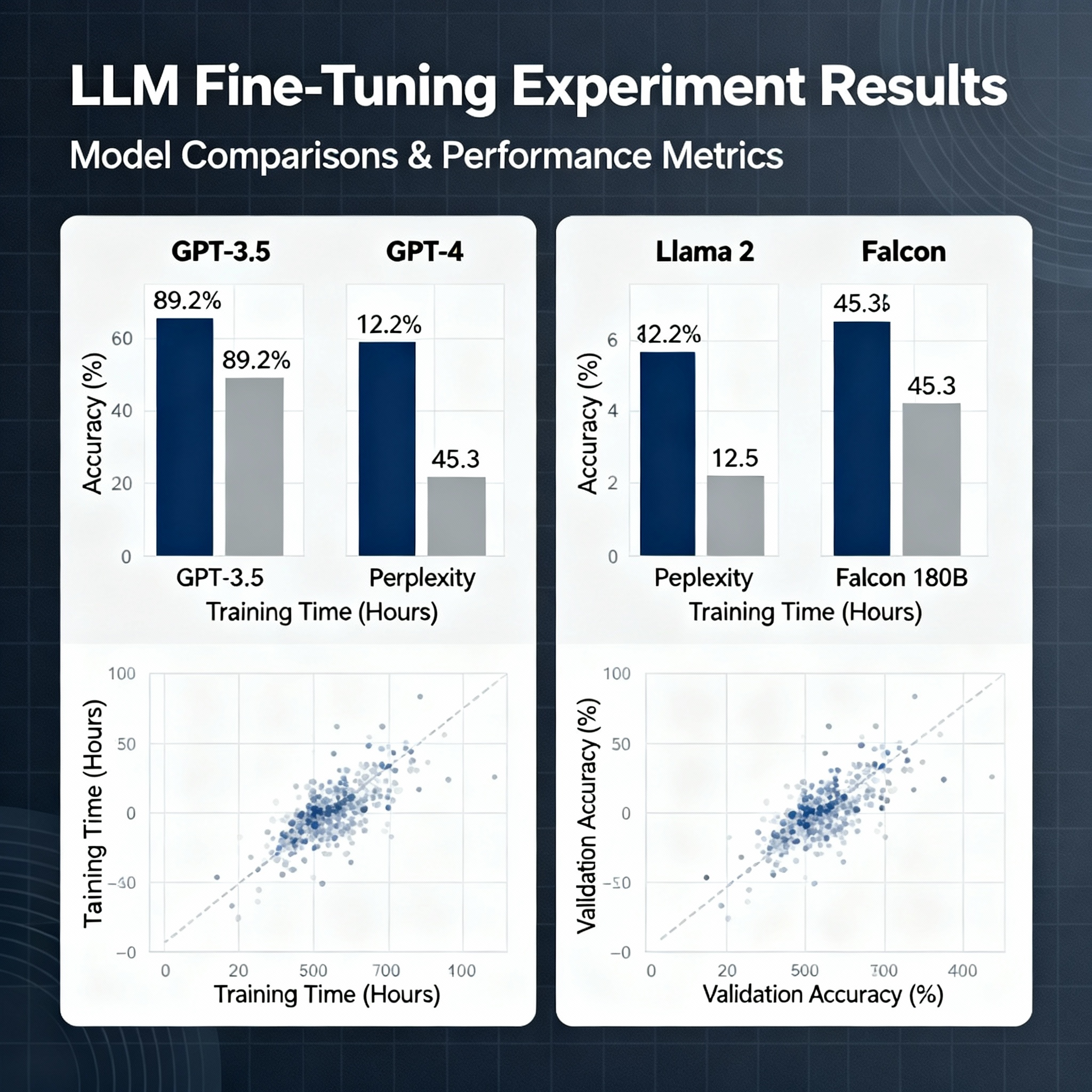

I fine-tuned 3 models this week to understand why people fail. Used LLaMA-2-7B, Mistral-7B, and Phi-2. Different datasets. Different methods (full tuning vs LoRA vs QLoRA). Here's what I learned that nobody talks about: 1. Data quality > Data quan

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)