Back

Naman Rathi

Student of Computer ... • 5m

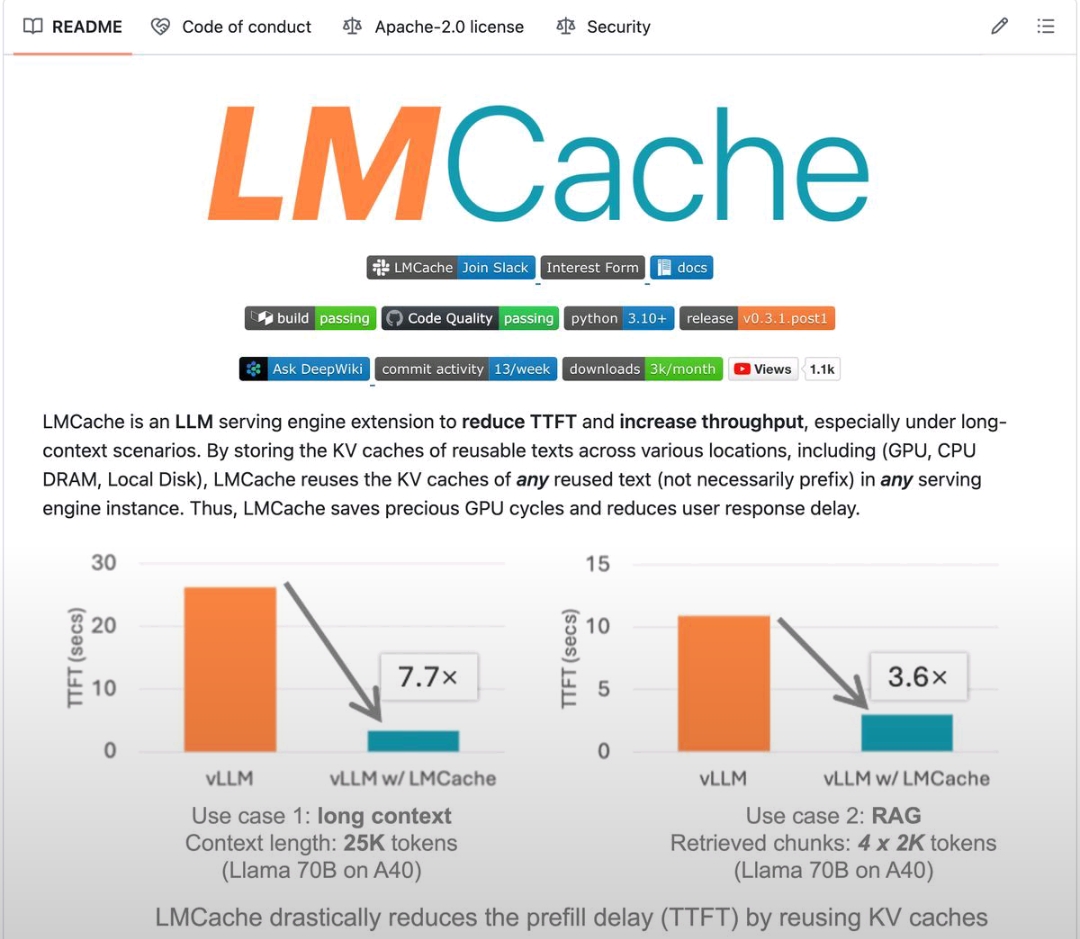

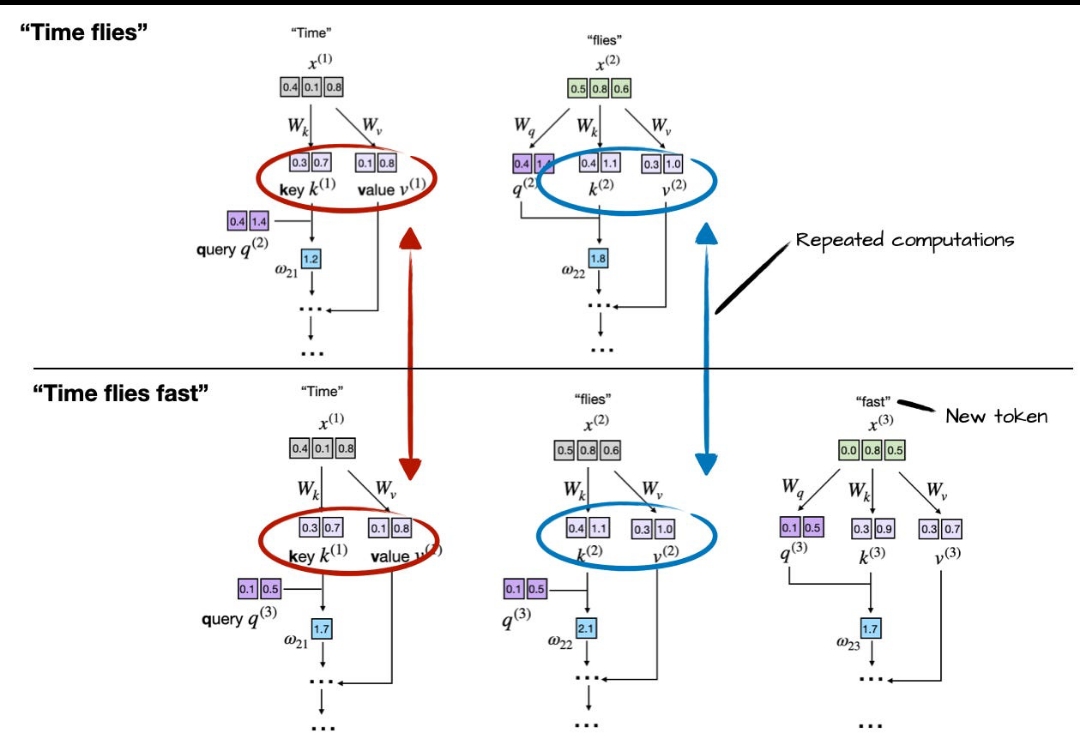

“Imagine if your brain could instantly recall everything you learned without ever forgetting. That’s what LM Cache does for AI.” That is what LM Cache makes possible. Normally, an AI has to reprocess everything from the beginning each time you ask a question, even if you already asked something similar. With caching, it can store past work and reuse it later. This means faster answers, lower costs, and less wasted energy. But the real impact is long term. As caching improves, AI will be able to: 1). Keep long conversations going without slowing down 2). Work on complex projects for days instead of minutes 3). Run advanced models on everyday devices 4). Cut down the massive energy demands of large models LM Cache is more than a performance trick. It is one of the key steps that will make AI sustainable and powerful enough to grow with us into the future. What do you think : "If AI had a memory like us, what’s the very first thing it should remember?"

Replies (1)

More like this

Recommendations from Medial

Manmit Tiwade

Software Developer |... • 9m

Offering APIs with caching + rate limiting. Problem: When users send the same payload multiple times within milliseconds, credits get deducted for each. Why? The first request is still processing, so the cache isn’t ready — the rest hit backend as n

See MoreAparna Pradhan

fullstack dev specia... • 4m

🚀 How to Save 90% on Your AI Costs Here’s exactly how we cut AI costs from $500/month to $5/month: 1. Avoid LLMs When Possible Use rules, regex, or database lookups for simple tasks (60–80% of workflows). Example: A lead qualification bot using rege

See MoreSidhant Pandey

Software Developer f... • 5m

Big AI move today : OpenAI is teaming up with Broadcom to roll out its own in-house AI chip by 2026. This means faster models, cost control, and less reliance on Nvidia. It’s not just infrastructure. It’s the AI equivalent of owning your energy g

See Moresatyam singhal

Entrepreneur I Busin... • 11m

Today’s AI Highlights: Appetizer: 🍏 Apple’s “Siri-ous” problem: Modernized Siri upgrades are delayed until at least 2027, as internal challenges slow progress. Meanwhile, rivals like Amazon and OpenAI surge ahead. Entrée: 🎙️ Google turns your sea

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)