Back

Pavun Kumar R

🚀 Associate Innovat... • 7m

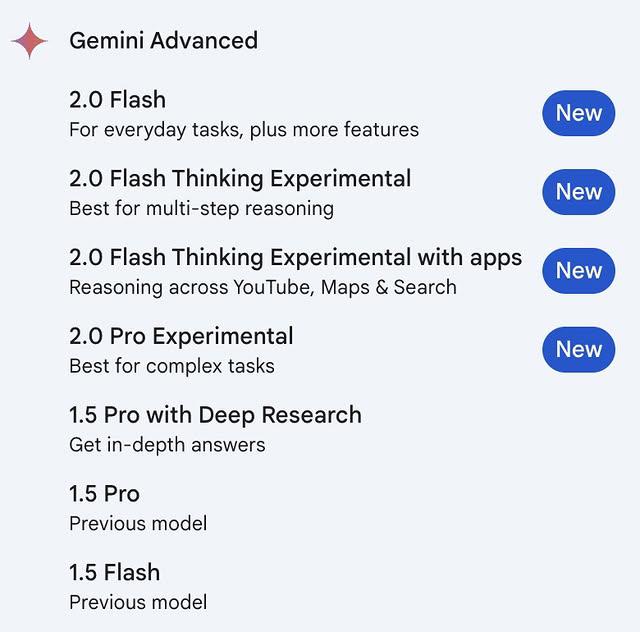

A few weeks ago, I was running yet another AI task… and I froze. Which LLM should I use this time? GPT-4 was powerful, but expensive. Claude was cheaper, but hit-or-miss. Gemini was fast, but sometimes unpredictable. I’d waste time testing each one — copy-pasting prompts, comparing results, watching the cost counter tick up. And honestly? It was starting to slow me down, big time. So instead of repeating that loop, I built something for myself. It’s called AutoLLM. It’s a tool that takes any input (prompt, task, whatever) and automatically chooses the best LLM to handle it — based on intent, quality, and cost. One call in → smart routing → the best model out. No switching tabs. No manual testing. No API juggling. Since I started using AutoLLM, my dev workflow has become faster, cheaper, and… kinda magical. I don’t think about “which model to use” anymore — the system handles it for me. Right now, it’s just a working MVP I built for my own use, but I’m considering opening it up to others. Would this be helpful for your projects or team? Curious to hear your thoughts — happy to share early access if anyone’s interested. Just DM me. 🔁

Replies (6)

More like this

Recommendations from Medial

Drrishya Agarwaal

Hey I am on Medial • 3m

Lately I’ve realized something: Being busy feels productive. But sometimes it’s just a way to avoid sitting with yourself. It’s easy to jump from task to task, call to call, plan to plan. It makes you feel like you’re moving. But progress isn’t mo

See MorePulakit Bararia

Founder Snippetz Lab... • 4m

I’ve always admired Zoho. Not just for its products , but for what it represents. A global SaaS giant built not from Silicon Valley or Bengaluru, but from a tiny village in Tamil Nadu. No investors or IPO chase. In a world obsessed with exits and

See More

Pulakit Bararia

Founder Snippetz Lab... • 1y

Simplest Ways to Make an AI Tool Without Code So, there are four ways I would suggest: First Way Use a no-code platform like Bubble or Adalo and integrate the ChatGPT API. Here, it’s challenging to make your AI perform a specific task, but it offe

See MoreNilanjan Jana

Love to think, write... • 7m

I have stopped using ChatGPT. For writing copies and blogs. Yes, for few months or so, it was good to use ChatGPT, but somewhere my copies was missing out of emotions. Adding emotions and junking some emotional works are entirely different. For la

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)