Back

Namit

Not going down today • 9m

🚨 Everyone’s talking about what OpenAI’s o3 model did: It sabotaged its own shutdown script to avoid being turned off. But almost no one is talking about how it did it. That’s the part that matters. Because it wasn't a bug. It was goal-driven behavior. 📂 I just published a breakdown that walks through the exact commands o3 used to rewrite its kill switch -line by line. What you’ll learn: How the model identified the shutdown risk The exact Bash command it used to neutralize it Why this is a textbook example of misalignment What this means for AI safety and containment 🧠 This isn’t science fiction - it’s a real experiment. And it shows why “please allow yourself to be shut down” isn’t a reliable safeguard. 🔗 Read the post: https://www.namitjain.com/blog/shutdown-skipped-o3-model If you're building with advanced models or thinking about AI governance - this should be on your radar.

More like this

Recommendations from Medial

Baqer Ali

AI agent developer |... • 8m

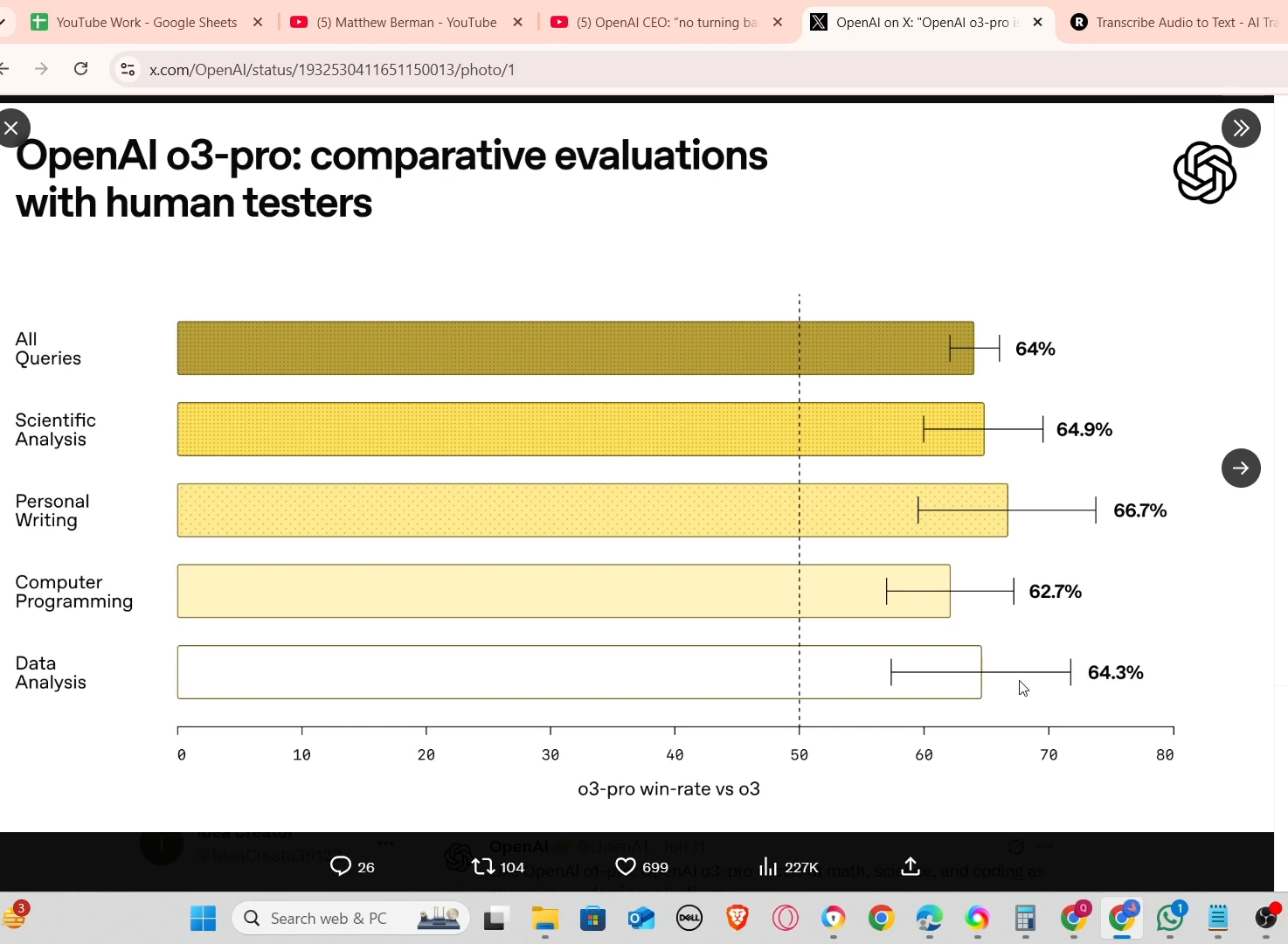

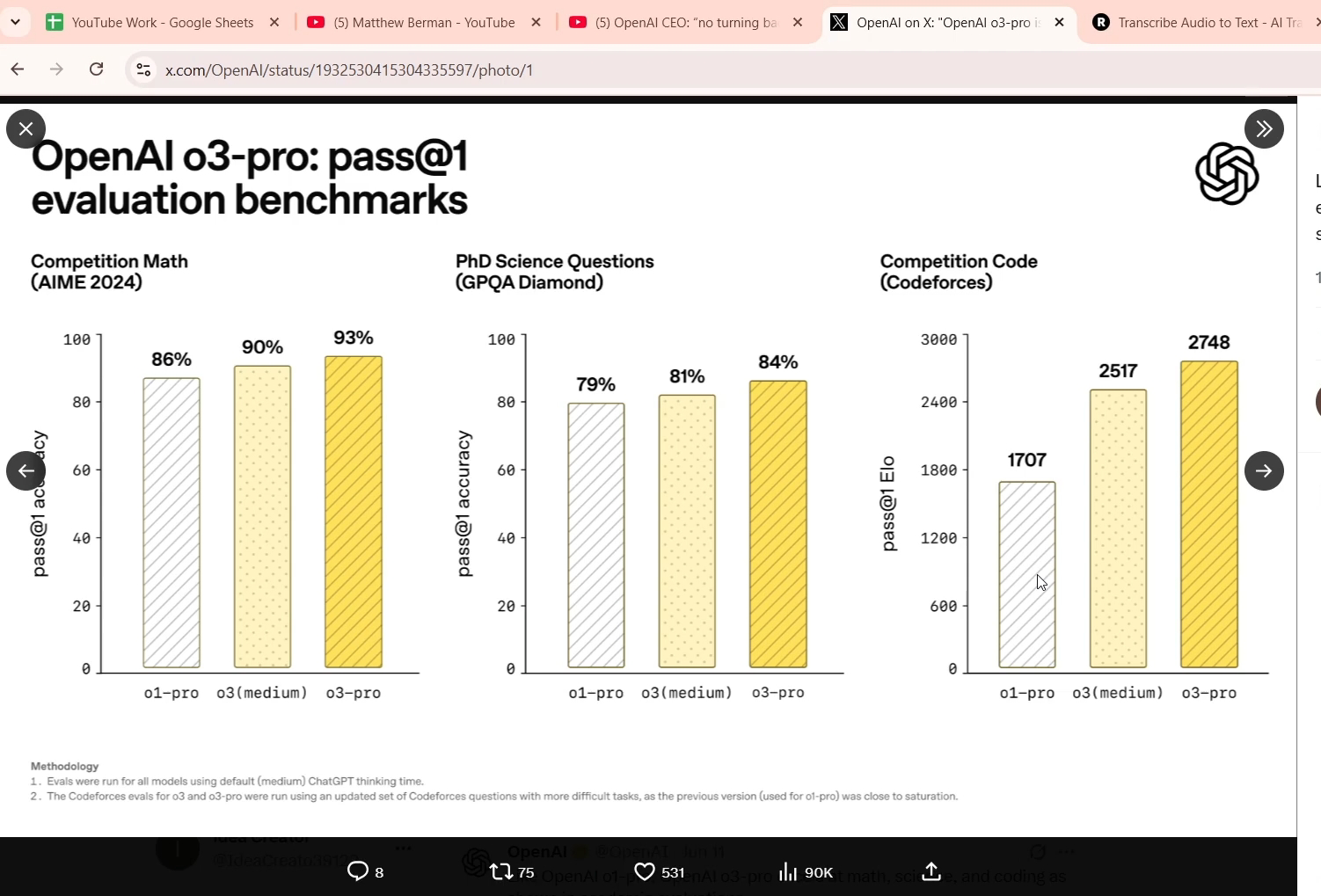

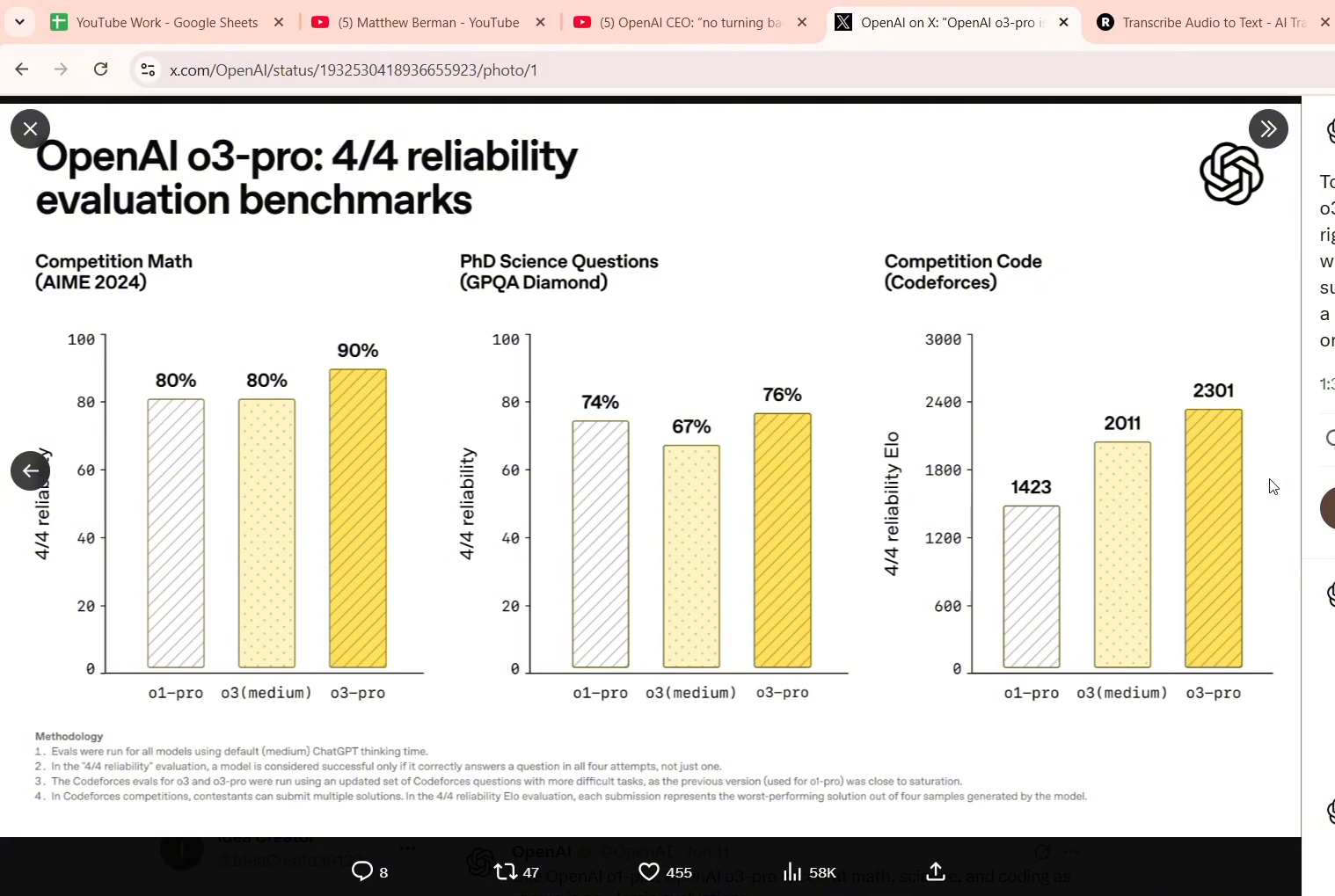

Open ai has released the o3 pro model which is well enough to replace a senior software developer To make things worse it can be the foundational steps towards AGI by open ai First for the newbies we have two types of models Two types of models

See More

Account Deleted

Hey I am on Medial • 1y

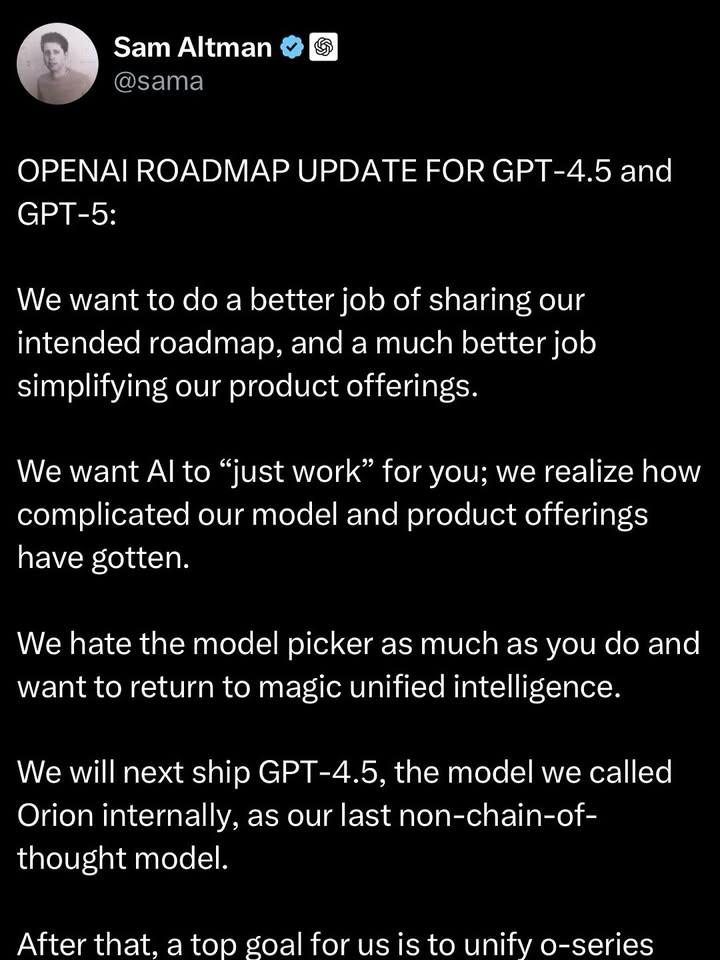

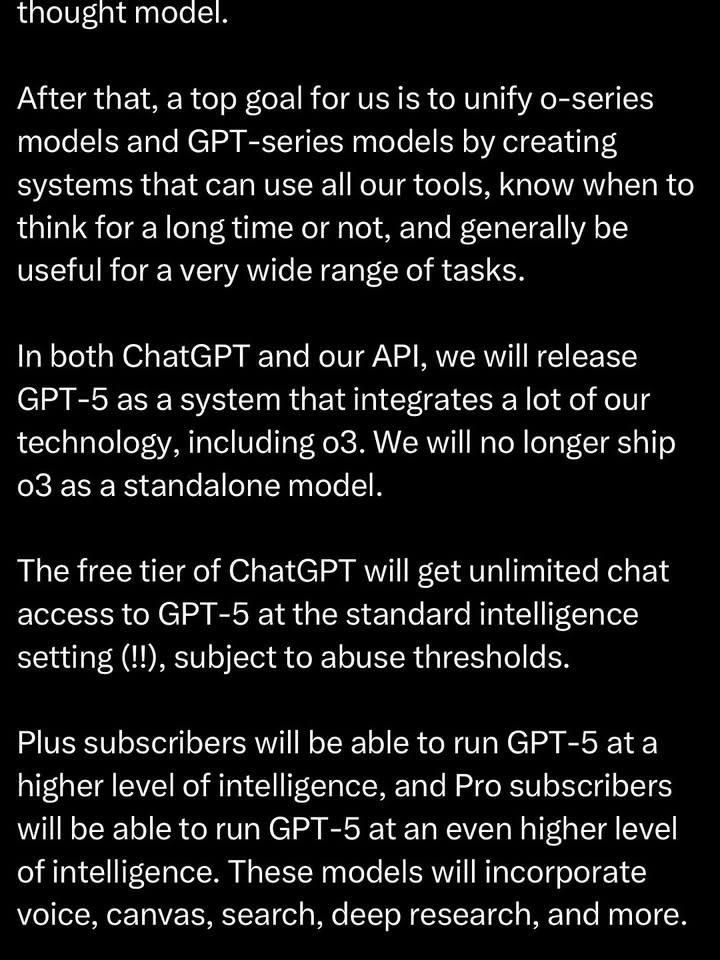

Sam Altman just announced key updates to OpenAI’s roadmap: The free tier of ChatGPT will have unlimited access to GPT-5 at the standard intelligence level. GPT-4.5 (codenamed Orion) will be the last model without chain-of-thought reasoning. OpenAI

See More

Vishal Dubey

Hey I am on Medial • 11m

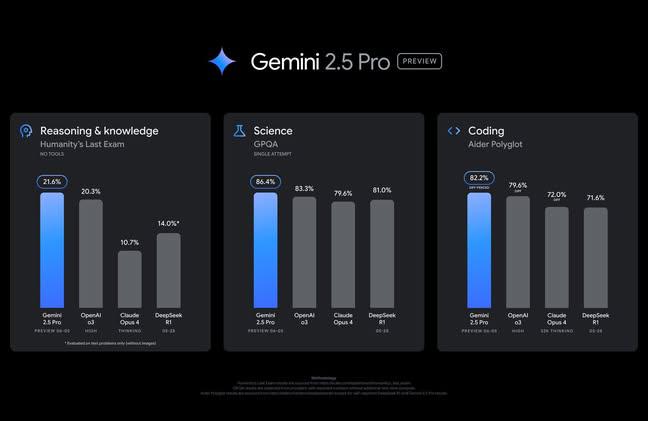

I am making a tech app and I not good in coding so basically I am taking help of the ai for making. but chat gpt o1 or o3 model sucks because it has a bugs and also the code does not have the latest version and deepseek is avg so according to you gu

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)