Back

Sarthak Gupta

17 | Building Doodle... • 10m

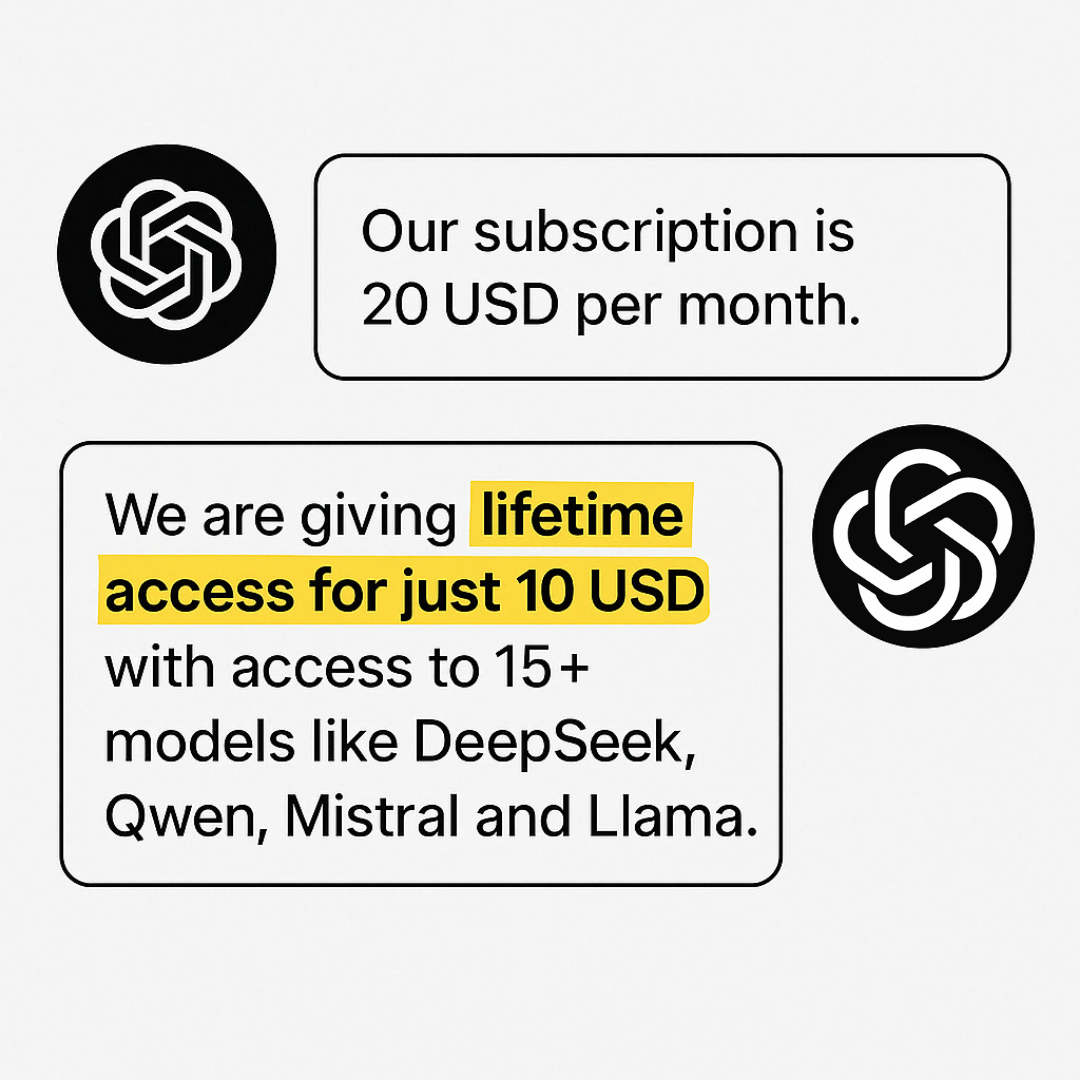

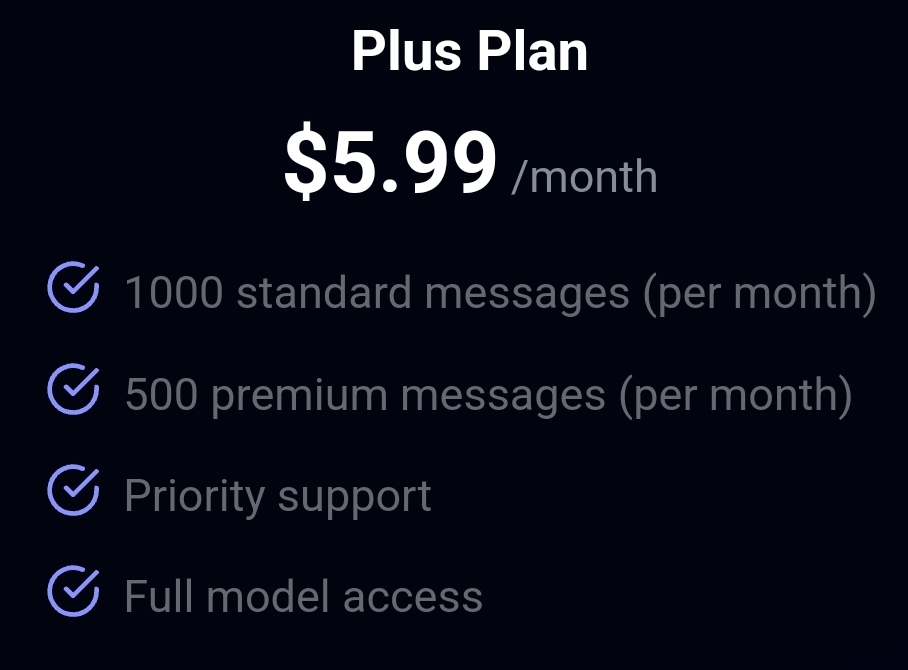

Introducing AnyLLM 🚀 AnyLLM is your destination to over 15+ llms including Llama from Meta, DeepSeek, Qwen from Alibaba and Google. How is anyllm different? Good Question 🤔 ● Use 15 + LLMs at the same place ● Use your own api key and get the benefit of free tier that gives you roughly 10x daily usage. ● believe me when I say anyllm is 10x Faster than ChatGPT. AnyLLM uses GroqAPI under the hood. You generate a free api key from groq and get lakhs of free tokens daily. (Really it's alot) As a special offer we are giving lifetime subscription of anyllm for just 999. Valid for today only

Replies (1)

More like this

Recommendations from Medial

Sarthak Gupta

17 | Building Doodle... • 10m

for the last 24 hrs. AnyLLM lifetime subscription for just 999. Link in comments below Introducing AnyLLM 🚀 AnyLLM is your destination to over 15+ llms including Llama from Meta, DeepSeek, Qwen from Alibaba and Google. How is anyllm different? G

See MoreSarthak Gupta

17 | Building Doodle... • 10m

Introducing AnyLLM 🚀 AnyLLM is your go-to destination for over 15+ llms including Llama from Meta, DeepSeek, Qwen from Alibaba and Gemma from Google. How is anyllm different? Good Question 🤔 I built anyllm after struggling from the high subscripti

See MoreSarthak Gupta

17 | Building Doodle... • 10m

🚀 For the first time in LLM History! Introducing Agentic Compound LLMs in AnyLLM. What are agentic LLM's ? Agentic LLMs have access to all the 10+ llms in anyllm and know when you use any one of them to perform a specific task. They also have acces

See More

Anonymous

Hey I am on Medial • 1y

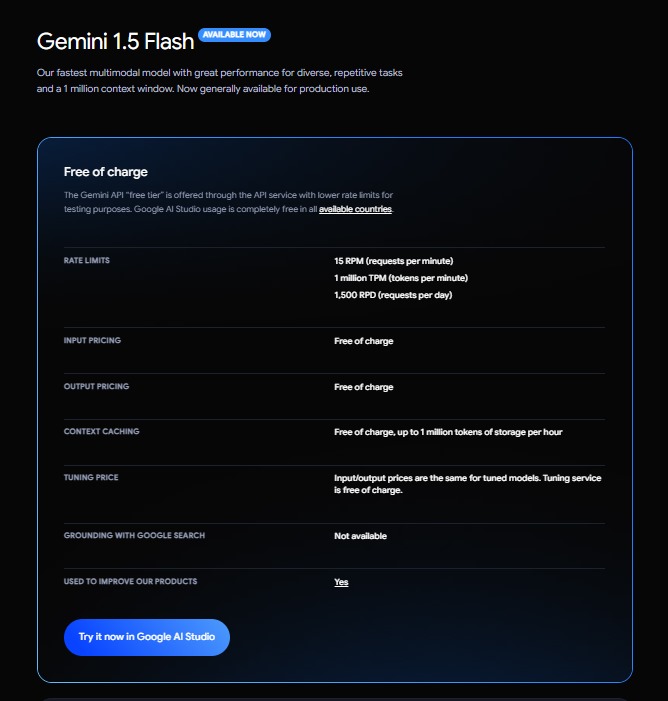

hii, i have created an ai android app where i use gemini api to fetch ai response i use gemini 1.5 flash. It provide rate limit of 15 RPM and 1M TPM (tokens per minute) which is obviously less in production. So, i have an idea that i will generate 20

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)