Back

Dr Bappa Dittya Saha

We're gonna extinct ... • 11m

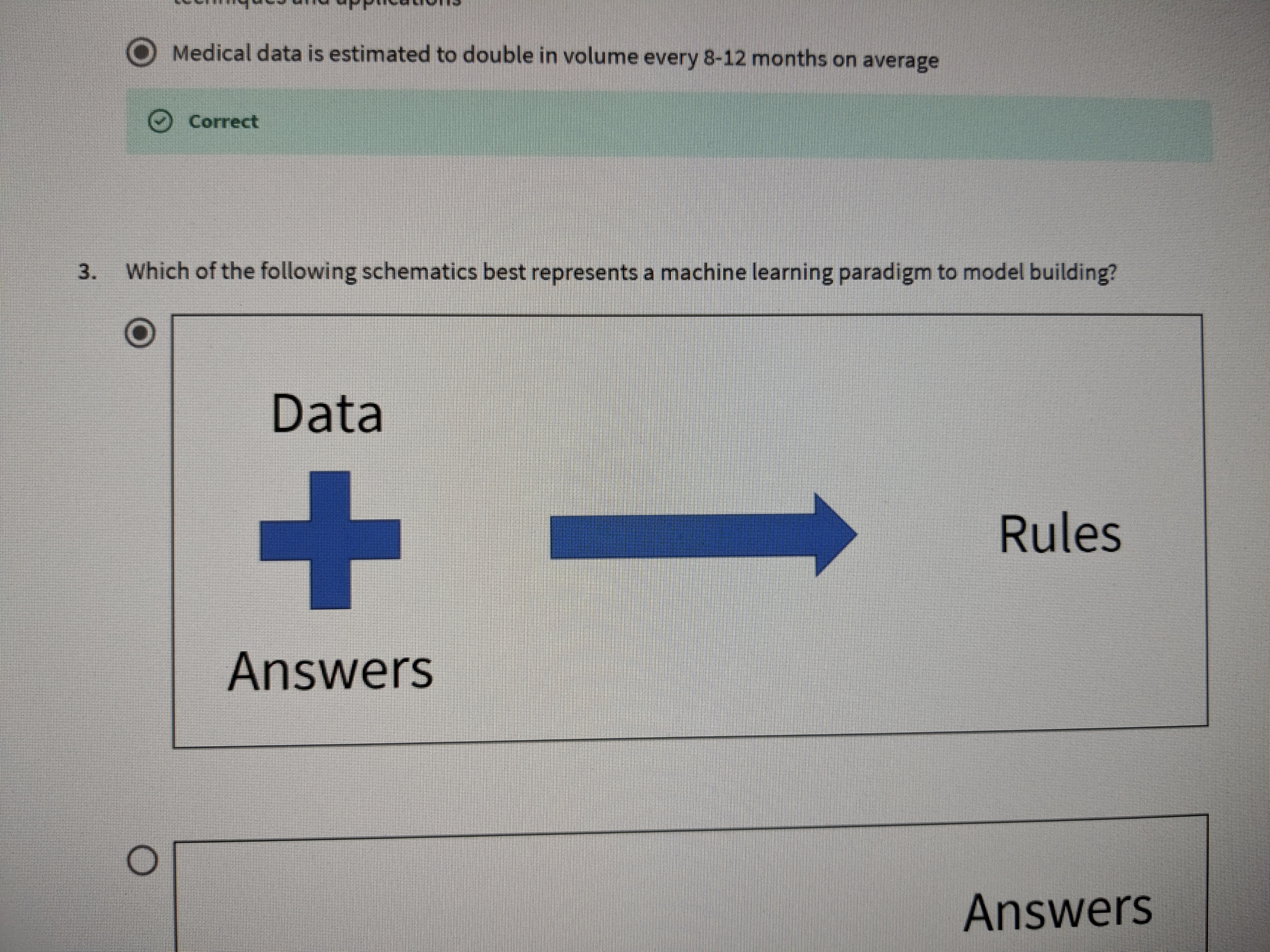

The Hidden Bias in Medical AI: Are We Training Fair Systems? AI in healthcare is only as good as the data it learns from. And if that data is flawed, the AI inherits those flaws - amplifying healthcare disparities rather than solving them. ⚠️ The Problem? Bias creeps in through: 🔹 Data Imbalance - If a model is trained mostly on one demographic, it misfires on others. 🔹 Systemic Bias - Historical healthcare inequalities get baked into algorithms, reinforcing the same injustices. 🔹 Environmental Bias - Differences in hospital settings, imaging techniques, and even device settings can skew results. 🚨 AI isn’t magic - it reflects the world we feed it. The real question: Are we building AI that fixes biases or worsens them? What's your perspective on this? How can we build a better model and How can we capture and store data in such a way it brings meaning and is easy to train? #MedicalAI #BiasInAI #HealthcareEquity

Replies (4)

More like this

Recommendations from Medial

Vatan Pandey

Founder & CEO @Zyber... • 11m

AI: Convenience or a Threat? 🤖⚡ We are rapidly adopting AI—for content creation, design, data analysis, and more. 🚀 But have we considered its impact on our creativity and skills? 🤔 🔹 AI has made work easier, but is it diminishing our original

See More

Aryan Mankotia

FEAR IS JUST AN ILLU... • 11m

Transforming Healthcare with Brain-Computer Interface (BCI) & Virtual Reality! At PixelPeak, we're developing a BCI-powered VR system that enables patients with paralysis, stroke, and motor impairments to communicate, move, and rehabilitate—using o

See More

Thakur Ambuj Singh

Entrepreneur & Creat... • 11m

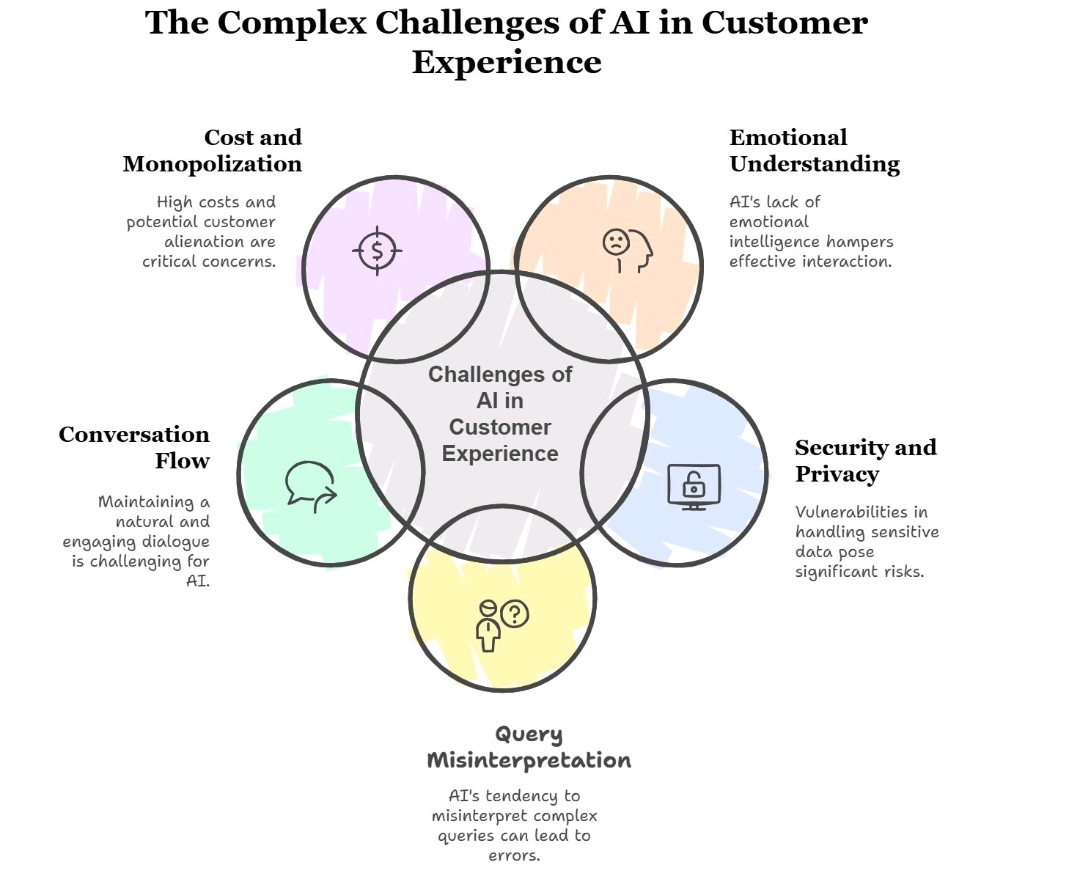

🚀 The AI Revolution in Customer Experience: A Game Changer or a Risky Bet? Artificial Intelligence is transforming customer interactions but is it really ready to handle the complexities of human emotions, security and conversation flow? 🔹 Cost

See More

Chandan Kumar Singh

Win The Chance • 11m

India’s Pharmacy Revolution – Looking for Visionary Investors! 🚀 Healthcare industry ₹2,50,000+ crore ka hai, par traditional medical stores ab bhi outdated models par chal rahe hain. Hamara Pharmacy ek AI-powered, hybrid (online + offline) pharmac

See MorePRADEEP BIJARNIYA

Founder & COO @Oogle... • 8m

🚀 Building a Tech or AI Product? Let Ooglesoft handle it for you! 🔹 Web & App Development 🔹 Custom AI Tools & Automation 🔹 Viral Branding & Launch Strategy Got an idea? Just DM us — we turn visions into working products. 📩 Message us now to get

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)