Back

SHIV DIXIT

CHAIRMAN - BITEX IND... • 1y

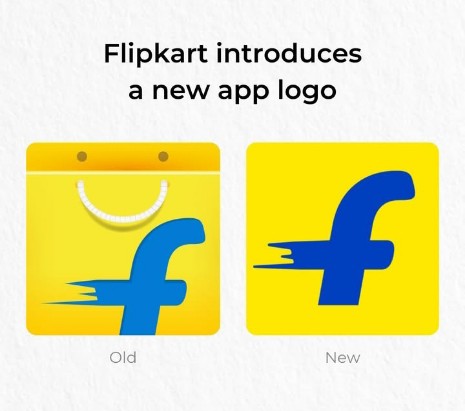

they just fine-tuned it

Replies (1)

More like this

Recommendations from Medial

Aditya Karnam

Hey I am on Medial • 11m

"Just fine-tuned LLaMA 3.2 using Apple's MLX framework and it was a breeze! The speed and simplicity were unmatched. Here's the LoRA command I used to kick off training: ``` python lora.py \ --train \ --model 'mistralai/Mistral-7B-Instruct-v0.2' \ -

See MoreRohan Saha

Founder - Burn Inves... • 7m

These days many finance experts are turning on the “Join” button on their youtube channels all because they are scared of SEBI. They say they want to “talk privately” or "share real insights in detail" but the truth is they are just afraid SEBI might

See MorePrabhjot Singh

@Techentia MERN & Ne... • 2m

Day 35 of 60 Worked on scaling high-performing ads in Meta Ads, improved SEO content hierarchy, and fine tuned website UX elements to enhance user engagement. Consistency and refinement are shaping stronger digital execution each day. #Day35 #60Day

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)