Back

Replies (1)

More like this

Recommendations from Medial

Rahul Agarwal

Founder | Agentic AI... • 2m

Hands down the simplest explanation of AI agents using LLMs, memory, and tools. A user sends an input → the system (agent) builds a prompt and may call tools and memory-search (RAG) → agent decides and builds an answer → the answer is returned to th

See More

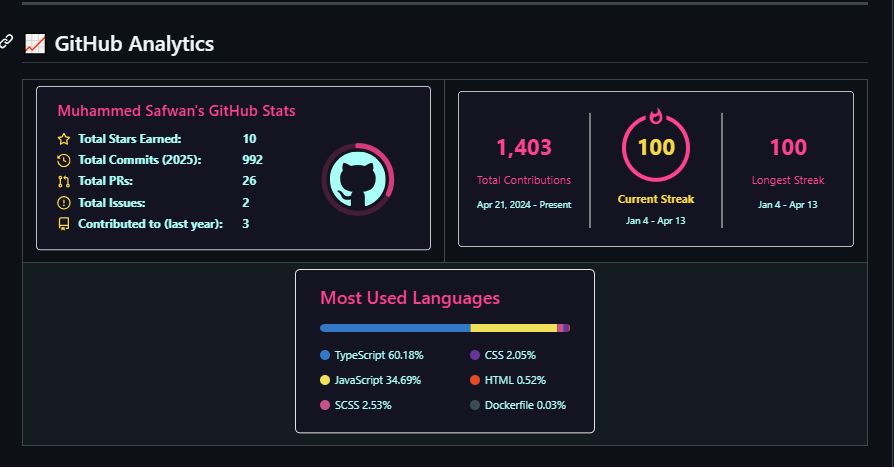

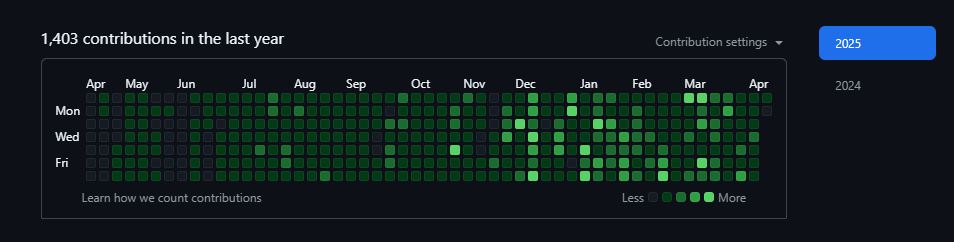

Harish Kumar K

I build what you see • 3m

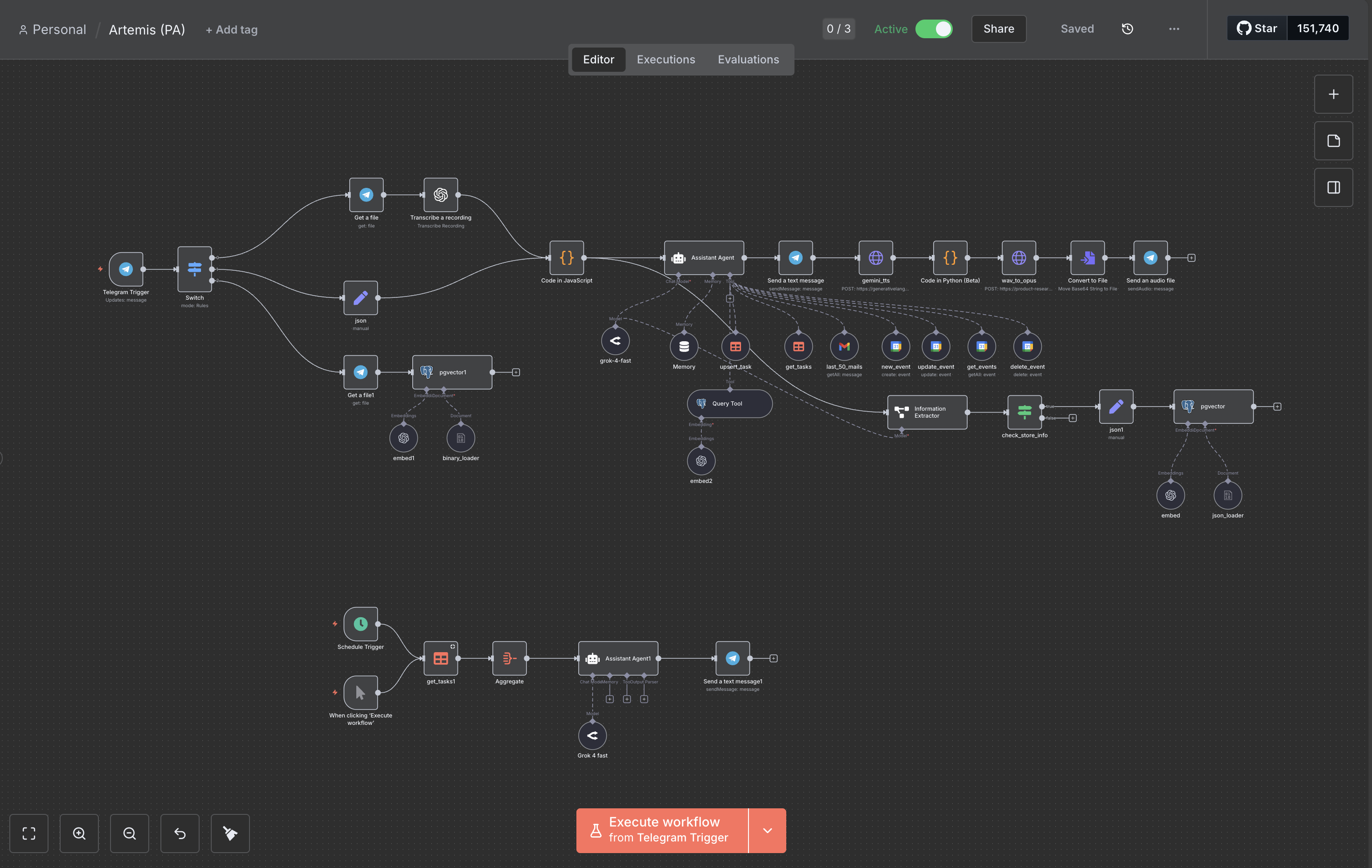

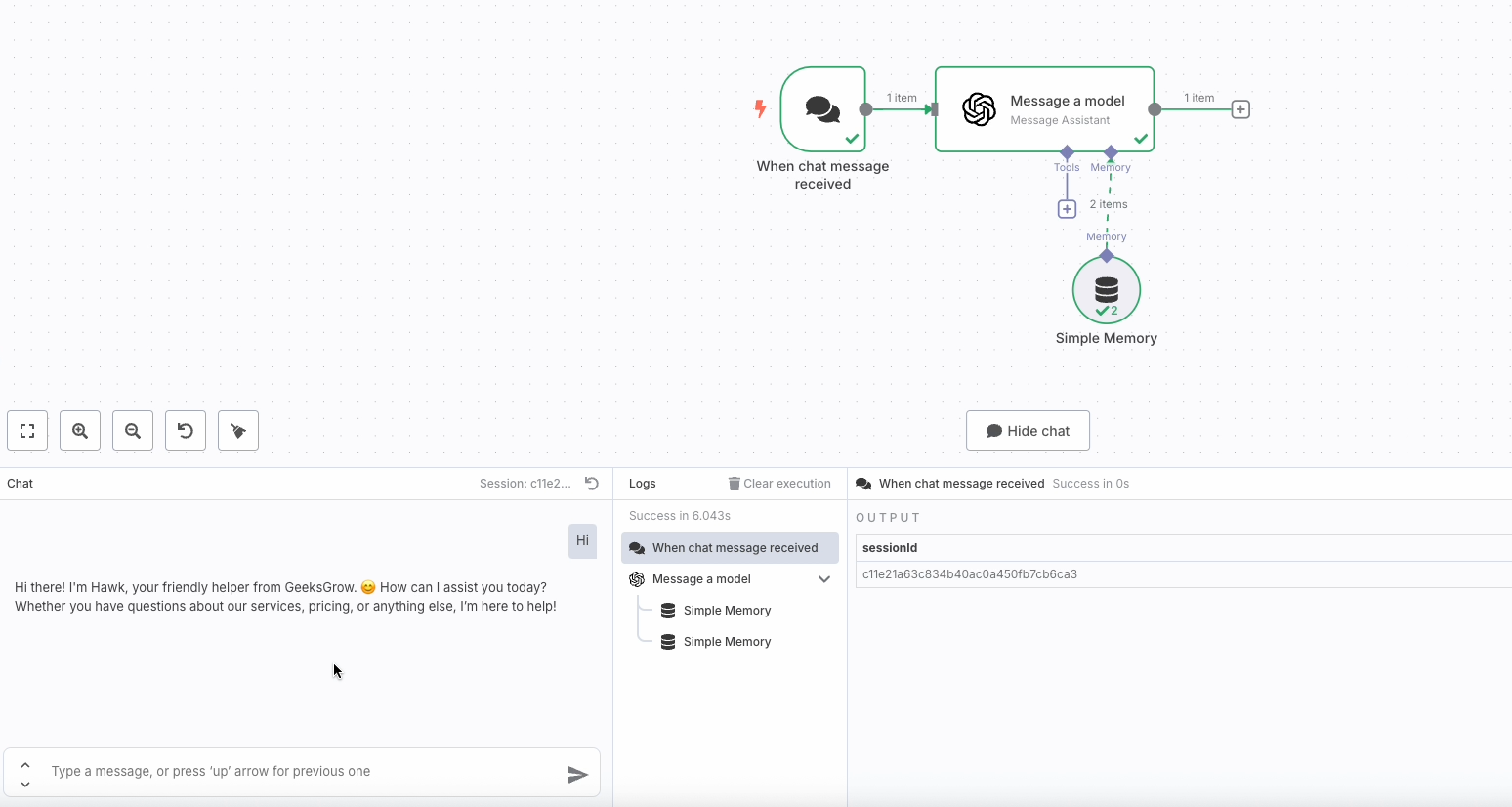

n8n is honestly one of the best tools I’ve come across - absolutely love it! 🚀 I wanted an easy way to organize and plan my day without it feeling like a chore, so I built a little Telegram bot powered by n8n. It’s got: • A tasks data table to ma

See More

Nandha Reddy

Cyber Security | Blo... • 1y

Name: Sow and Reap problem it's solving:-the company is trying to reduce the usage of fertilizers in modern day farming by educating them constantly and the carbon credits these practices generate are kept for sale and farmers can earn additional re

See More

The next billionaire

Unfiltered and real ... • 10m

Greg Isenberg just shared 23 MCP STARTUP IDEAS TO BUILD IN 2025 (ai agents/ai/mcp ideas) and its amazing: "1. PostMortemGuy – when your app breaks (bug, outage), MCP agent traces every log, commit, and Slack message. Full incident report in seconds.

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)