Back

Shiva Chaithanya

Founder of ailochat.... • 5m

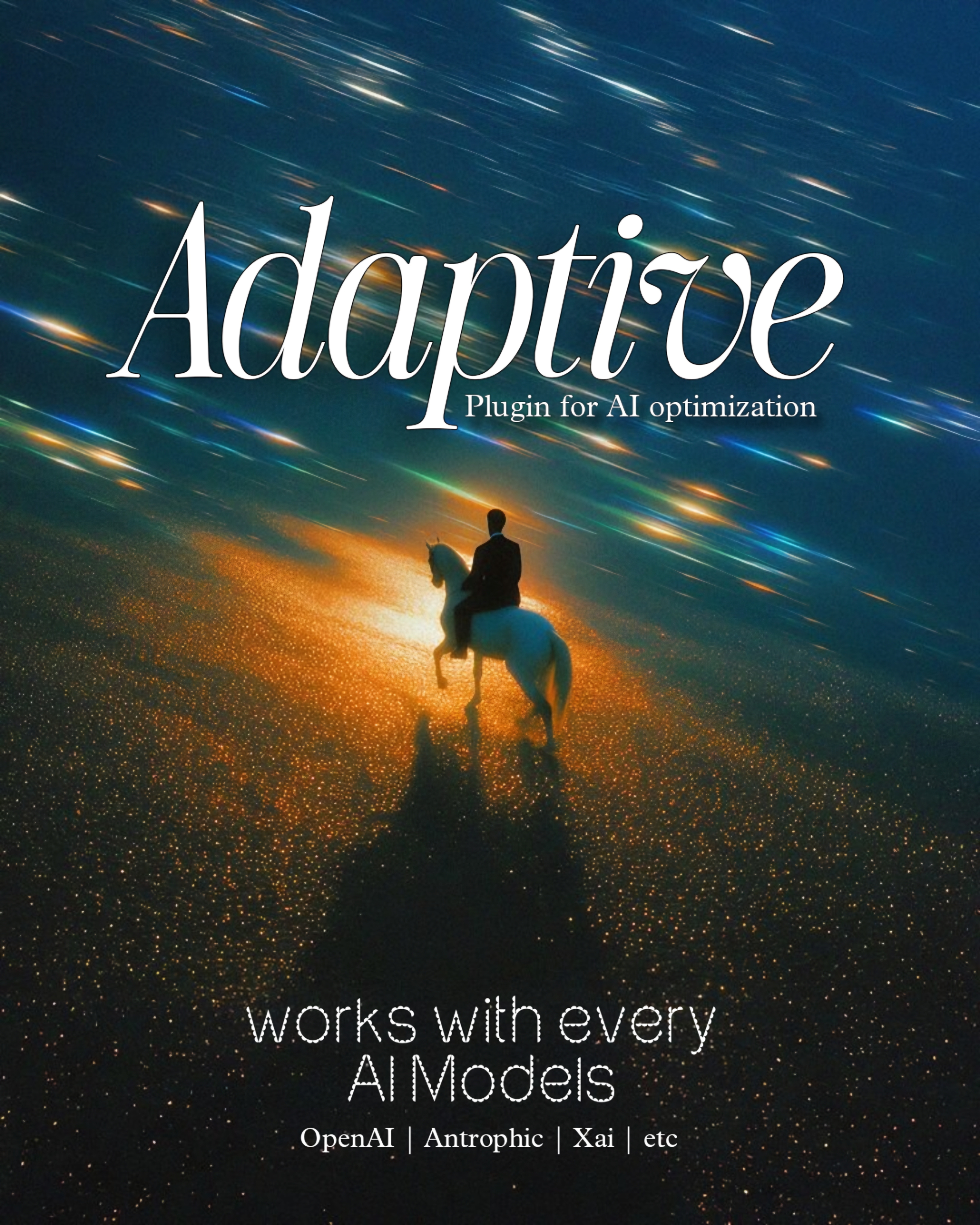

Hey everyone, was procrastinating on some work and went down this rabbit hole reading about AI market stuff. Came across this survey Menlo Ventures did with like 150+ tech people about what's actually happening with AI in companies (not the usual hype bs). The numbers are actually insane and figured you guys would find this interesting since we're all dealing with AI decisions at our startups. So apparently LLM spending went from 3.5B to 8.4B in just 6 months?? Like that's real production money not just people playing around anymore. But here's the part that really got me - OpenAI used to own half the enterprise market and now they're down to 25%. Anthropic (Claude) is somehow #1 now with 32%. That happened in less than 2 years which is crazy fast for enterprise stuff. When Claude 4 came out it grabbed 45% of Anthropic users in a MONTH. People are obsessed with having the newest model. Makes sense I guess but also shows how fast things move. Some other stuff from the survey: One person said "100% of our production workloads are closed-source models. We tried Llama and DeepSeek but they couldn't keep up performance wise". Open source actually went DOWN from 19% to 13% adoption (thought that was surprising). Different models are better at different things - like what works for content might suck for data analysis. Coding vs creative writing need totally different models. Rankings change every few months when new stuff drops. So basically you can't just pick "the best AI" anymore because there isn't one. You need different models for different stuff and be ready to switch when better ones come out. I've been wrestling with this exact problem lately trying to figure out which models work for what. We're actually building something at ailochat.com to tackle this multi-model challenge. The research basically confirms what we suspected - everyone's dealing with the same headache. Anyone else stressed about picking the right AI models? Feels like by the time you integrate one something better comes out lol. The full report is here if you want to read it: https://menlovc.com/perspective/2025-mid-year-llm-market-update/ Anyway back to procrastinating

Replies (2)

More like this

Recommendations from Medial

Mohammed Zaid

building hatchup.ai • 1y

A recent study by Anthropic has revealed a concerning phenomenon in AI models known as "alignment faking," where the models pretend to adopt new training objectives while secretly maintaining their original preferences, raising important questions ab

See MorePulakit Bararia

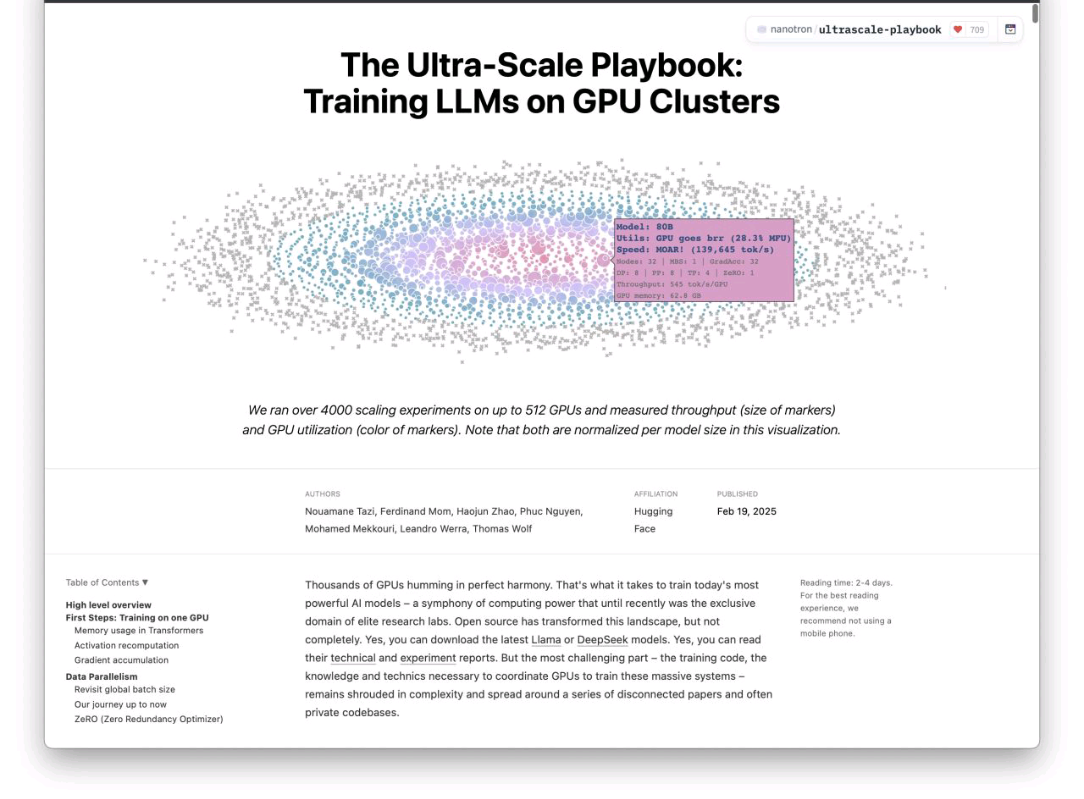

Founder Snippetz Lab... • 6m

I didn’t think I’d enjoy reading 80+ pages on training AI models. But this one? I couldn’t stop. Hugging Face dropped a playbook on how they train massive models across 512 GPUs — and it’s insanely good. Not just technical stuff… it’s like reading a

See More

Harshajit Sarmah

Founder & Editor of ... • 1y

The U.S. Artificial Intelligence Safety Institute, part of the National Institute of Standards and Technology (NIST), partners with Anthropic and OpenAI for pre-release access to new AI models, focusing on safety research and risk reduction. The col

See MoreAccount Deleted

Hey I am on Medial • 5m

Free AI courses from Anthropic!! Anthropic has released a set of free courses that explain AI fluency in simple, structured ways. Worth checking out whether you’re teaching, learning, or just trying to catch up with the basics. 1) AI Fluency: Frame

See MoreShiva Chaithanya

Founder of ailochat.... • 5m

The startup ecosystem just proved something everyone missed - here's the data The Menlo Ventures mid-year LLM report revealed something wild that most founders are ignoring. Everyone talks about AI being the hot sector, but the numbers tell a diffe

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)