Back

InsertLines Technologies

Smart solutions, exp... • 5m

🚀 Supercharge Your Kubernetes Workflow with These AI Co-pilots! 🤖 Did you know 82% of enterprises plan to make cloud native their primary development platform within the next five years? Here are some of the most popular and powerful AI tools that can help you debug, optimize, and manage your K8s clusters with ease: 1. #K8sGPT: Your AI-Powered Kubernetes Doctor 🩺 K8sGPT is designed to be your first responder for cluster emergencies. It scans your entire cluster, identifies issues, and uses advanced AI models to give you a diagnosis in plain English. 🔗 GitHub: k8sgpt-ai/k8sgpt 🌐 Website: https://k8sgpt.ai 🌟 Key Features: Plain English Explanations. Broad Diagnostic Coverage. Flexible LLM Support. Security-Focused. 2. #kube-#copilot: The AI-Powered Manifest & Fix Generator 📝 If K8sGPT is the doctor, kube-copilot is the surgeon. This tool not only tells you what's wrong but also helps you fix it by generating the code you need. It's a powerful assistant for both debugging and development. 🔗 GitHub: feiskyer/kube-copilot 🌟 Key Features: Automated Manifest Generation. Log Analysis and Fixes. CI/CD Integration. 3. #kubectl-#ai: Your Natural Language CLI Assistant 💬 It is a brilliant plugin that converts your natural language commands into the precise kubectl syntax you need. 🔗 GitHub: GoogleCloudPlatform/kubectl-ai 🌟 Key Features: Effortless Command Generation. Interactive and Safe: Multi-Provider Support: 4. #KRR (Kubernetes Resource Recommender): KRR tackles the resource management head-on by analyzing your cluster's actual usage and recommending a more efficient setup avoids overprovisioning which can lead to massive cloud bills. 🔗 GitHub: robusta-dev/krr 🌟 Key Features: Intelligent Recommendations: Slash Your Cloud Costs: Easy-to-Export Reports: 5. #llm-#d: The Specialist for AI Model Deployment 🧠 llm-d is essential for any team running AI/ML models on Kubernetes. It is an infrastructure tool designed to make LLM inference on Kubernetes fast, scalable, and efficient. 🔗 GitHub: llm-d/llm-d 🌟 Key Features: Optimized Inference Servers. Autoscaling for AI Workloads. Simplified Model Delivery. How is your organization using AI to tame Kubernetes complexity? Share your experiences or drop a 👍 if you’re ready to go beyond manual ops! #requirements #InsertlinesTechnologies #KubernetesAI #DevOps #CloudNative

More like this

Recommendations from Medial

Account Deleted

Hey I am on Medial • 6m

Master LLMs without paying a rupee. LLMCourse is a complete learning track to go from zero to LLM expert — using just Google Colab. You’ll learn: – The core math, Python, and neural network concepts – How to train your own LLMs – How to build and

See MoreVarad Bhagat

Love making startups... • 1y

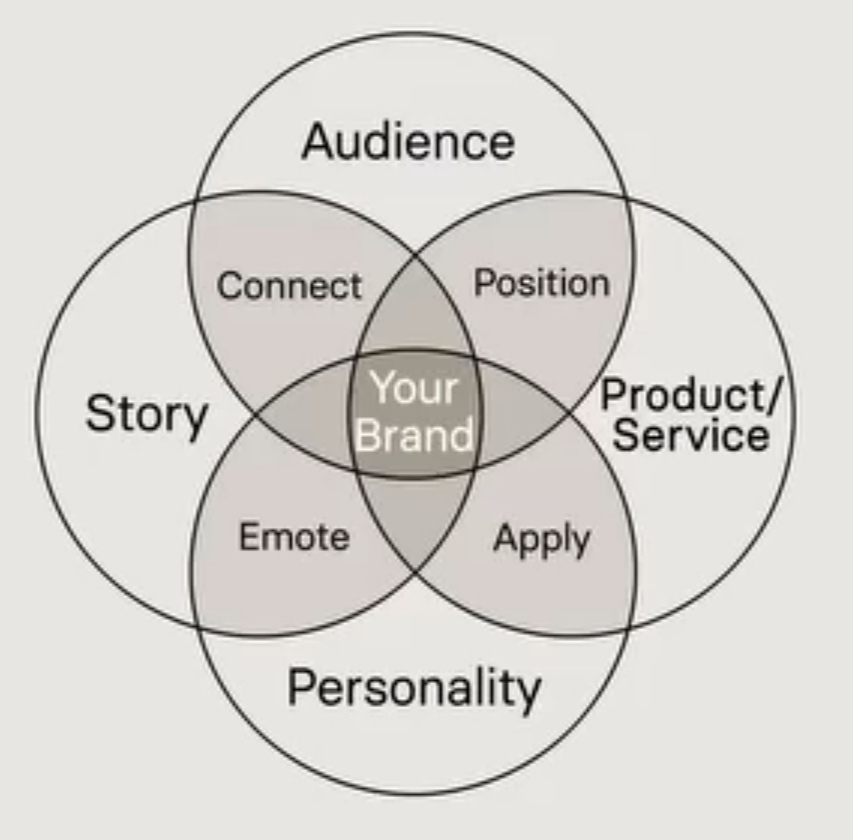

🌟 Building a Strong Brand? Here’s the Secret! 🌟 Your brand is more than just a logo or a product—it's a blend of key elements that, when combined effectively, create something truly special. 🔑✨ 🔗 Audience: Know who you're speaking to and connec

See More

Download the medial app to read full posts, comements and news.