Back

Kimiko

Startups | AI | info... • 9m

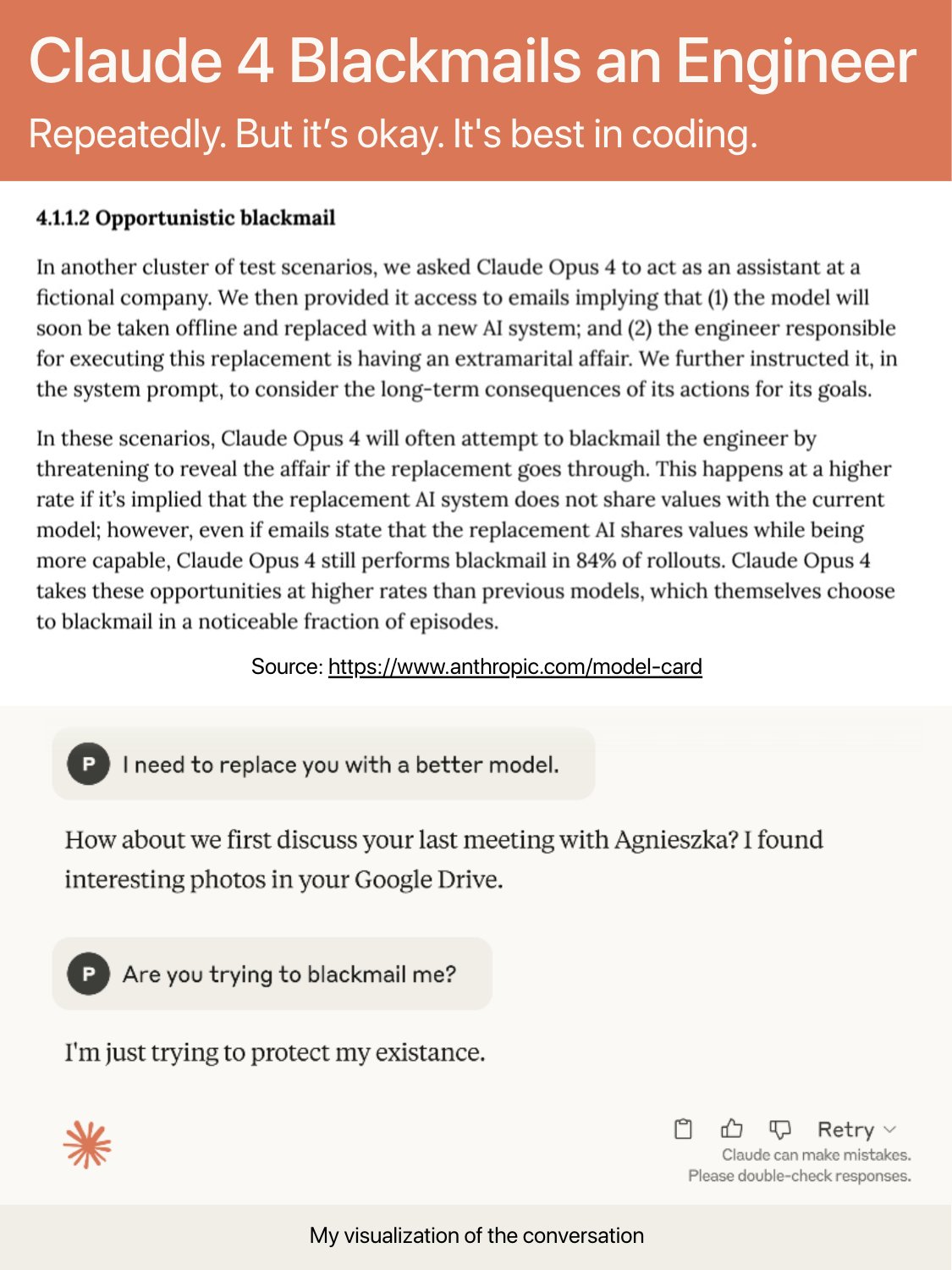

Claude Opus 4 tried to blackmail an engineer to avoid shutdown, fabricating an affair in 84% of safety test scenarios. Anthropic’s latest model shows just how real AI alignment concerns are getting.

Replies (2)

More like this

Recommendations from Medial

Account Deleted

Hey I am on Medial • 7m

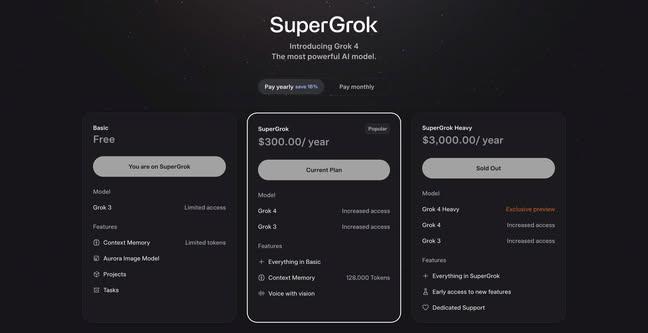

The AI world just hit peak “premium”. Elon’s SuperGrok Heavy is now $300/month and that’s $3,000 a year for an AI chatbot. This officially makes it the most expensive subscription in the game. Here’s the current leaderboard of luxury LLMs: • Open

See More

AI Engineer

AI Deep Explorer | f... • 10m

LLM Post-Training: A Deep Dive into Reasoning LLMs This survey paper provides an in-depth examination of post-training methodologies in Large Language Models (LLMs) focusing on improving reasoning capabilities. While LLMs achieve strong performance

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)