Back

AI Engineer

AI Deep Explorer | f... • 10m

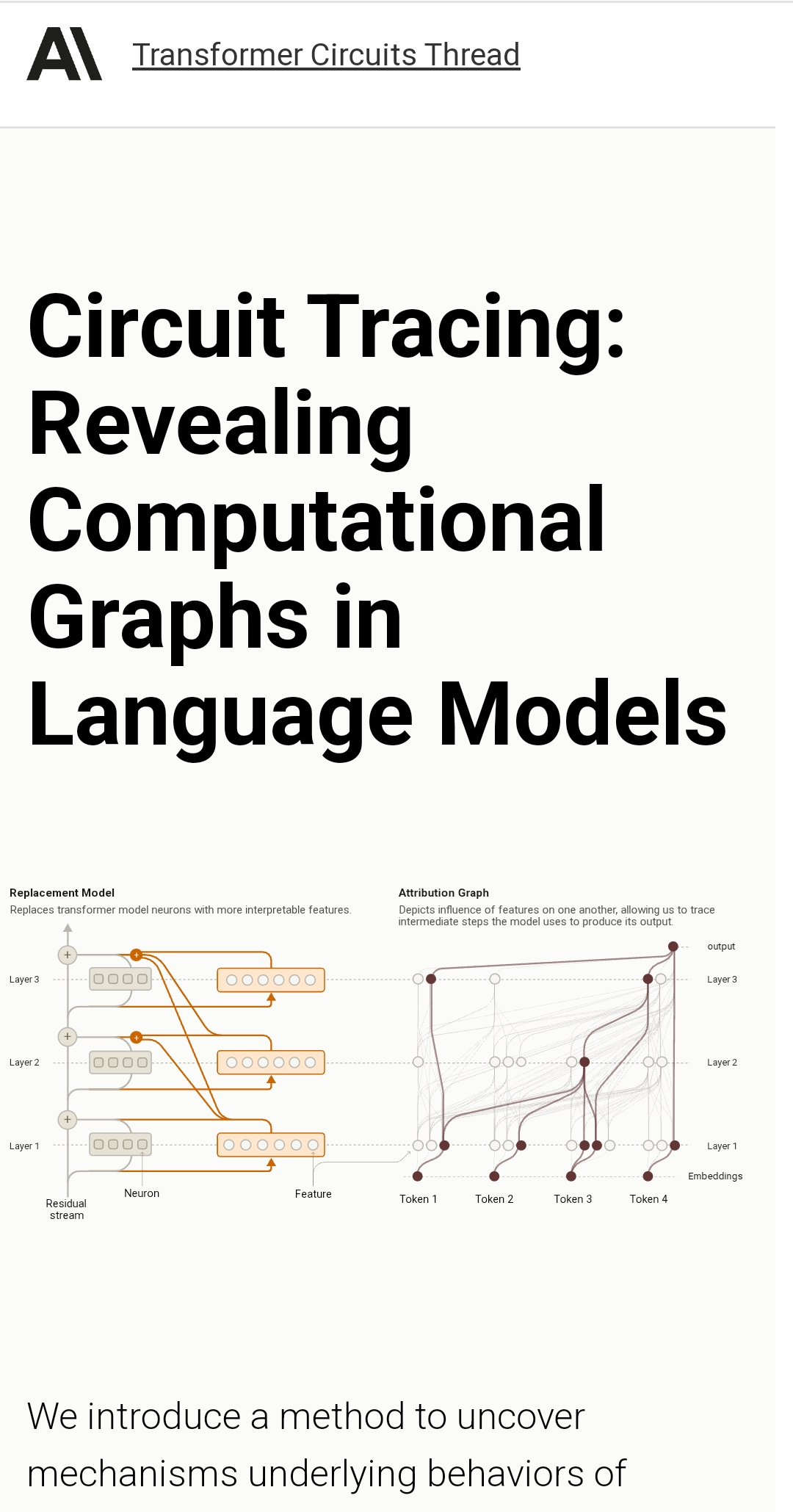

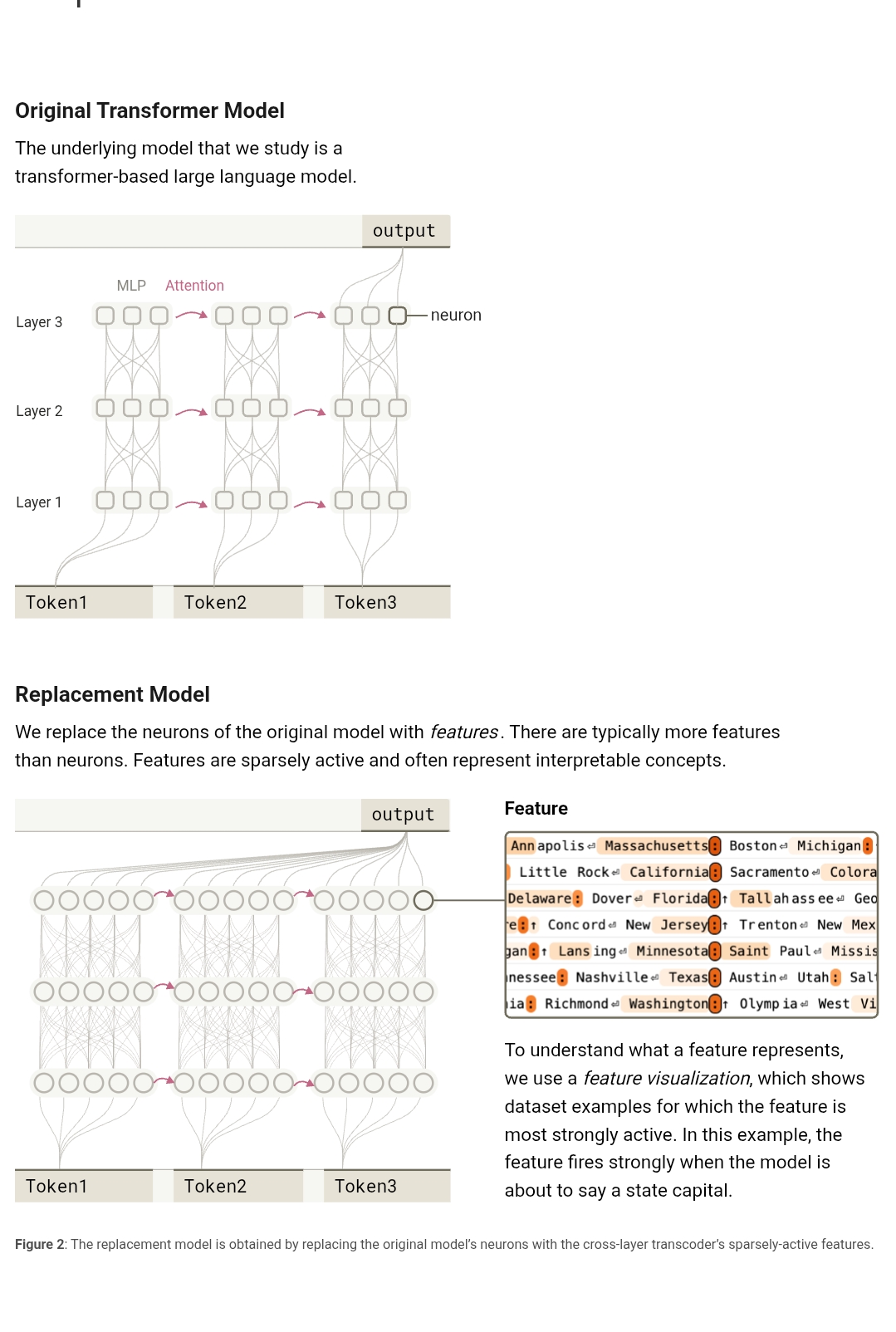

🔍 Understanding How Language Models Think – One Circuit at a Time "Circuit Tracing: Revealing Computational Graphs in Language Models" by Anthropic ↳introduces a method to uncover how LLMs process and generate responses by constructing graph-based descriptions of their computations on specific prompts. ✓Key Idea ↳Instead of analyzing raw neurons or broad model components like MLPs and attention heads, the authors use sparse coding models—specifically cross-layer transcoders (CLTs)—to break down model activations into interpretable features and trace how these features interact (circuits). ✓How They Do It ↳Transcoders: Create an interpretable replacement model to analyze direct feature interactions. ↳Cross-Layer Transcoders (CLTs): Map features across layers while maintaining accuracy. ↳Attribution Graphs: Build computational maps showing the chain of influence leading to token predictions. ↳Linear Attribution: Simplify feature interactions by controlling attention and normalization. ↳Graph Pruning: Remove unnecessary connections for better interpretability. Interactive Interface: Explore these attribution graphs dynamically. ↳Validation: Use perturbation experiments to confirm identified mechanisms. Real-World Case Studies ↳Factual Recall: Understanding how the model knows that Michael Jordan plays basketball. ↳Addition in LLMs: Analyzing how "36 + 59 =" is computed at the feature level. ✓Challenges and Open Questions Missing attention circuit explanations (QK interactions). Reconstruction errors leading to "dark matter" nodes. Difficulty in understanding global circuits across multiple prompts. Complexity in graph structures, even after pruning. ✓Why This Matters Mechanistic interpretability is key to trustworthy AI, enabling us to move from black-box models to systems we can explain, debug, and align with human values. This paper from Anthropic represents a step forward in making LLMs more transparent and understandable at the circuit level. link https://transformer-circuits.pub/2025/attribution-graphs/methods.html

Replies (2)

More like this

Recommendations from Medial

AI Engineer

AI Deep Explorer | f... • 10m

Top 10 AI Research Papers Since 2015 🧠 1. Attention Is All You Need (Vaswani et al., 2017) Impact: Introduced the Transformer architecture, revolutionizing natural language processing (NLP). Key contribution: Attention mechanism, enabling models

See More

AI Engineer

AI Deep Explorer | f... • 10m

LLM Post-Training: A Deep Dive into Reasoning LLMs This survey paper provides an in-depth examination of post-training methodologies in Large Language Models (LLMs) focusing on improving reasoning capabilities. While LLMs achieve strong performance

See MoreAI Engineer

AI Deep Explorer | f... • 10m

"A Survey on Post-Training of Large Language Models" This paper systematically categorizes post-training into five major paradigms: 1. Fine-Tuning 2. Alignment 3. Reasoning Enhancement 4. Efficiency Optimization 5. Integration & Adaptation 1️⃣ Fin

See More

Chirotpal Das

Building an AI eco-s... • 1y

🚀 AI in 2025: The Next Big Shift As we enter 2025, the AI landscape is undergoing a profound transformation. Here are four key trends shaping the future: 1️⃣ Memory Management Becomes Critical: AI systems that can retain and adapt based on past in

See More

Aparna Pradhan

fullstack dev specia... • 4m

🚀 How to Save 90% on Your AI Costs Here’s exactly how we cut AI costs from $500/month to $5/month: 1. Avoid LLMs When Possible Use rules, regex, or database lookups for simple tasks (60–80% of workflows). Example: A lead qualification bot using rege

See MoreRahul Agarwal

Founder | Agentic AI... • 3m

MCP is getting attention, but it’s just one piece of the puzzle If you’re developing Agentic AI systems, it’s crucial to understand more than just MCP. There are 5 key protocols shaping how AI agents communicate, collaborate, and scale intelligence

See MoreAmarjit Singh

Hey I am on Medial • 1y

Problem Statement The current education system lacks personalization, leaving students with one-size-fits-all learning methods that fail to cater to individual strengths, weaknesses, and learning styles. Traditional EdTech platforms offer content, bu

See MoreAccount Deleted

Hey I am on Medial • 1y

Shocking insight from YC partners The most successful AI startups in 2024 aren't coming from "clever ideas" or hackathons They're coming from a completely different approach that most founders ignore Here's the blueprint they shared Forget hacka

See More

Rahul Agarwal

Founder | Agentic AI... • 2m

Deconstructing How Agentic AI Actually Works We’ve all experienced what Large Language Models can do — but Agentic AI is the real leap forward. Instead of just generating responses, it can understand goals, make decisions, and take action on its own

See MoreDownload the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)