Back

Vivek kumar

On medial • 1y

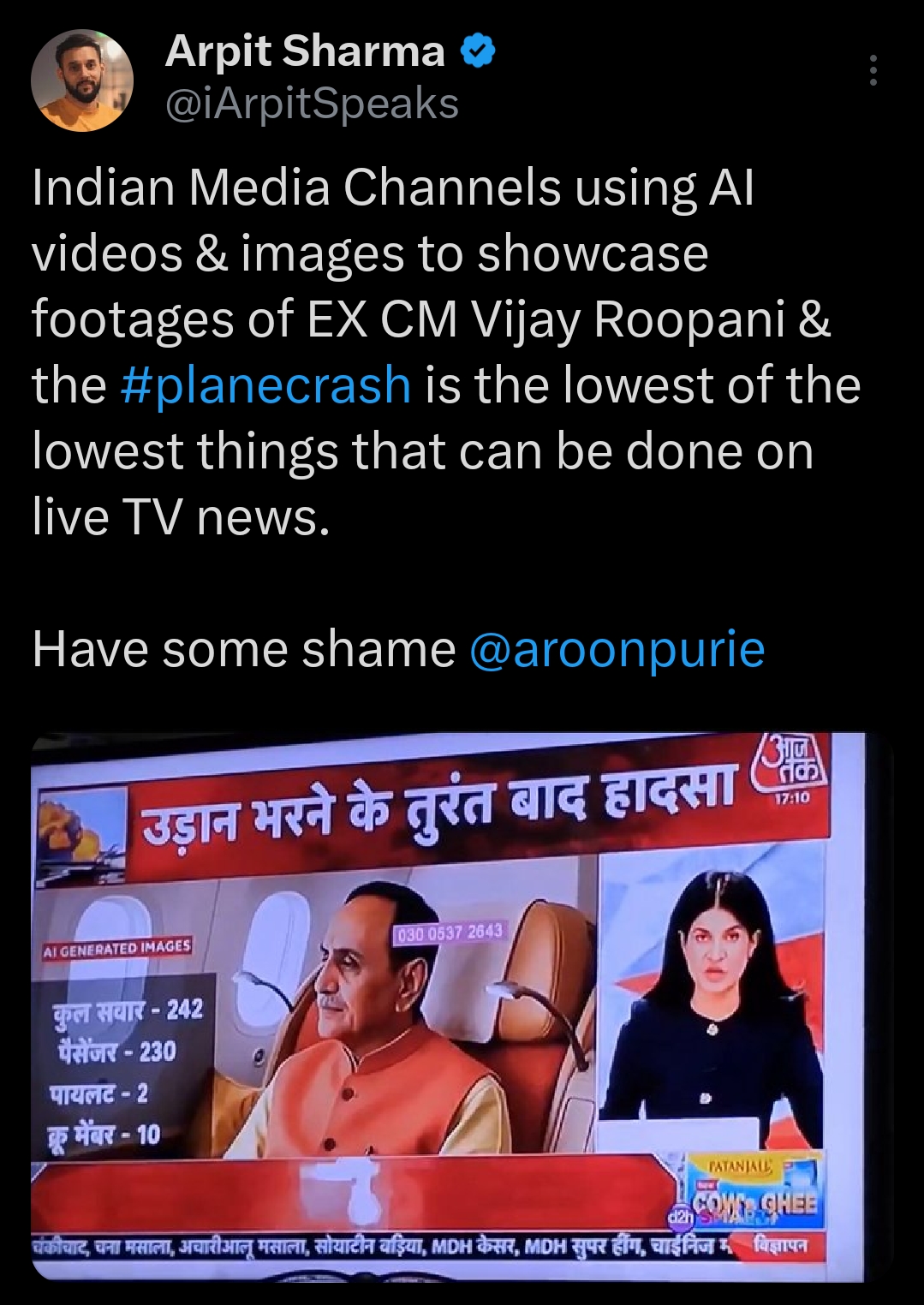

Artificial intelligence models like ChatGPT are designed with safeguards to prevent misuse, but the possibility of using AI for hacking cannot be ruled out. Countries or malicious actors could create AI systems specifically for unethical purposes, such as automating cyberattacks, identifying vulnerabilities, crafting phishing emails, or even simulating social engineering to trick people into revealing sensitive information. These AI tools could analyze massive datasets to uncover and exploit confidential data with alarming efficiency. To prevent such misuse, it is crucial to enforce global regulations, implement ethical guidelines, and prioritize robust security measures in AI development. While AI holds immense potential for good, its dual-use nature makes it essential to ensure it is used responsibly and ethically.

Replies (3)

More like this

Recommendations from Medial

Samraat

I am a tech enthusia... • 1y

Artificial Spiritual Consciousness envisions AI as a partner in exploring spiritual concepts like transcendence and morality, synthesizing knowledge from various traditions. It recognizes the Multiversal Spiritual Energy that connects all existence.

See More

Download the medial app to read full posts, comements and news.

/entrackr/media/post_attachments/wp-content/uploads/2021/08/Accel-1.jpg)