Back

Anonymous 1

Hey I am on Medial • 1m

Option 1: Integrate LLM directly into the OS Pros: • 🔥 Speed – No external calls; everything runs natively. • 🧠 Tight integration – AI can interact with system-level processes (files, memory, user interface) seamlessly. • 🌐 Offline capable – If model fits on-device, it works without internet. • 🔒 Privacy – Data doesn’t leave the machine. Cons: • 🛠️ Hard to update – Every time you want to upgrade or swap the model, you may need to rework core OS components. • 💾 Resource hungry – Big LLMs eat CPU/GPU/RAM. Many devices can’t handle that without draining battery/heat. • ⚠️ High risk – If the integrated LLM breaks, it could destabilize the whole OS. 👉 Who does this? Apple is slowly moving toward this with on-device AI, but only for small models (like Apple Intelligence). They keep larger models server-side. ⸻ ⚡ Option 2: Run LLM separately and connect via MCP servers Pros: • 🚀 Scalability – You can upgrade models or switch to a better one without touching the OS core. • 🧩 Flexibility – The OS is lighter; AI can evolve independently. • ☁️ Access to bigger models – You’re not limited by device hardware; cloud/server LLMs can be massive. • 🛡️ Safer – If AI crashes, OS still works fine. Cons: • 🌍 Requires connectivity (unless you also run a local mini-LLM). • 🐌 Latency – Server round-trips are slower than local processing. • 🔑 Privacy risks – Data goes to servers (unless you encrypt/keep self-hosted). 👉 Who does this? Microsoft (Copilot), Google (Gemini in Android), OpenAI (ChatGPT apps). They keep AI mostly external for flexibility. ⸻ • If you want control, privacy, and OS tightly bound with AI → Option 1 (direct integration) is futuristic but only practical when models shrink enough to run efficiently on-device. This could be the 5–10 year vision. • If you want scalability, easier updates, and faster iteration → Option 2 (servers) is smarter right now. That’s why all big players (Google, Microsoft, OpenAI) are doing it this way today. If you are building for India second one is better

Replies (1)

More like this

Recommendations from Medial

Divyam Gupta

Building products, l... • 4m

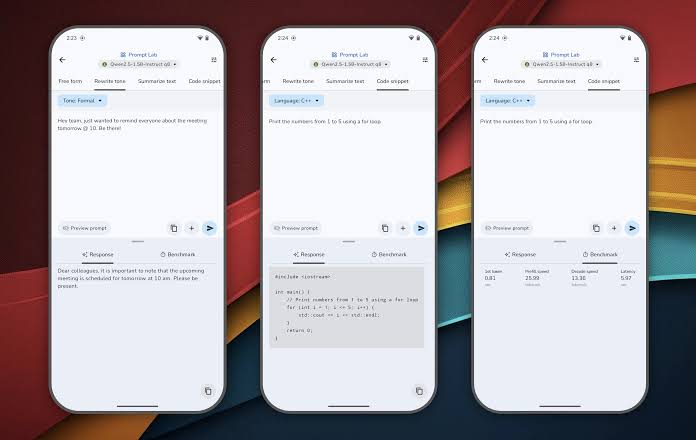

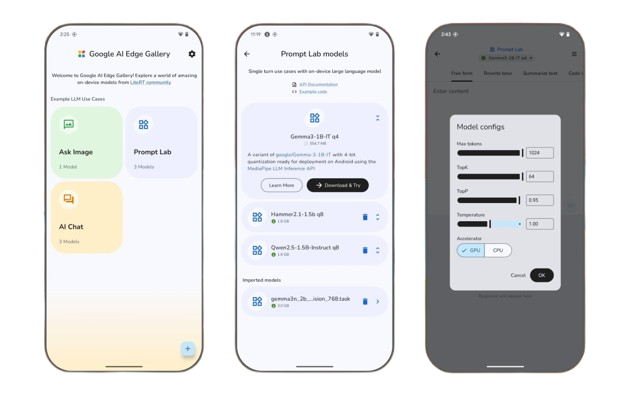

Google Unveils AI Edge Gallery: On-Device Generative AI Without Internet Google has launched the AI Edge Gallery, an experimental app that brings cutting-edge Generative AI models directly to your Android device. Once a model is downloaded, all proc

See More

Sarthak Gupta

17 | Founder of Styl... • 5m

For past context, I am launching an AI SaaS that aims to lower llm subscription fees by providing users 15 plus llm models having comparable performance to ChatGpt models. I am working on optimizing pricing. Which is better. Please comment the reas

See MoreAnwin Babu

Web Developer in Tra... • 1m

Google launch a app to download ml /AI model which can able run ai models locally on your android device, this can enables us to use ai offline.task like image analysis, summarisation and even code generation locally in our mobile phone you can find

See MoreDownload the medial app to read full posts, comements and news.