Back

Rahul Agarwal

Founder | Agentic AI... • 5h

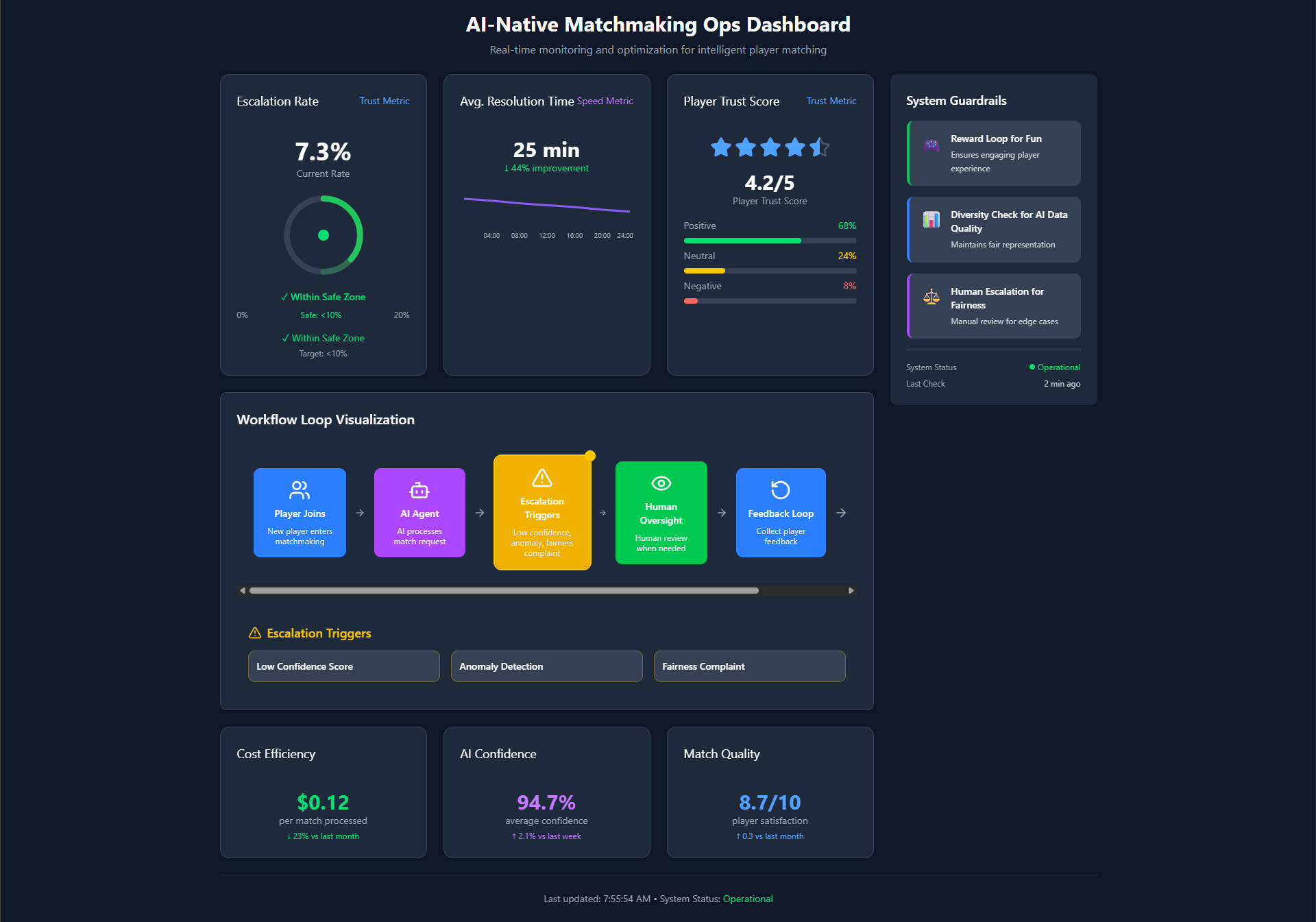

Most people skip guardrails, their AI systems break. I’ve explained in a very simple way below. 1. 𝗜𝗻𝗽𝘂𝘁 & 𝗢𝘂𝘁𝗽𝘂𝘁 𝗚𝘂𝗮𝗿𝗱𝗿𝗮𝗶𝗹𝘀 This is the 𝗲𝗻𝘁𝗿𝘆 𝗮𝗻𝗱 𝗲𝘅𝗶𝘁 𝗰𝗵𝗲𝗰𝗸 of the system. • User input is checked for safety • Harmful, offensive, or unsafe requests are filtered out • After the AI generates a response, it is checked again before showing it to the user This prevents dangerous prompts and unsafe answers from reaching users. _________________ 2. 𝗖𝗼𝗻𝘁𝗲𝘅𝘁𝘂𝗮𝗹 𝗚𝘂𝗮𝗿𝗱𝗿𝗮𝗶𝗹𝘀 These make sure the AI 𝗯𝗲𝗵𝗮𝘃𝗲𝘀 𝗰𝗼𝗿𝗿𝗲𝗰𝘁𝗹𝘆 𝗳𝗼𝗿 𝗮 𝘀𝗽𝗲𝗰𝗶𝗳𝗶𝗰 𝘁𝗮𝘀𝗸. • The system understands what it is allowed to do in a given use case • Clear limits are set so the AI does not go outside its role • It avoids giving misleading or out-of-scope information The same AI behaves differently for healthcare, education, or customer support. _________________ 3. 𝗦𝗲𝗰𝘂𝗿𝗶𝘁𝘆 𝗚𝘂𝗮𝗿𝗱𝗿𝗮𝗶𝗹𝘀 This protects the system from 𝗮𝘁𝘁𝗮𝗰𝗸𝘀 𝗮𝗻𝗱 𝗺𝗶𝘀𝘂𝘀𝗲. • They defend against prompt injection and manipulation • They prevent data leaks and false information attacks • They protect both internal systems and external users Without this, AI systems can be tricked into unsafe behavior. _________________ 4. 𝗔𝗱𝗮𝗽𝘁𝗶𝘃𝗲 𝗚𝘂𝗮𝗿𝗱𝗿𝗮𝗶𝗹𝘀 These guardrails 𝗰𝗵𝗮𝗻𝗴𝗲 𝗯𝗲𝗵𝗮𝘃𝗶𝗼𝗿 𝗮𝘀 𝗰𝗼𝗻𝗱𝗶𝘁𝗶𝗼𝗻𝘀 𝗰𝗵𝗮𝗻𝗴𝗲. • The system adapts to new rules or policies • It adjusts responses in real time • Legal and ethical rules are always enforced AI systems must stay safe even as laws, users, and risks evolve. _________________ 5. 𝗘𝘁𝗵𝗶𝗰𝗮𝗹 𝗚𝘂𝗮𝗿𝗱𝗿𝗮𝗶𝗹𝘀 These ensure the AI follows 𝗵𝘂𝗺𝗮𝗻 𝘃𝗮𝗹𝘂𝗲𝘀 𝗮𝗻𝗱 𝗳𝗮𝗶𝗿𝗻𝗲𝘀𝘀. • Outputs remain socially acceptable • Harmful or offensive content is avoided • Bias in responses is reduced AI should not spread discrimination or harmful ideas. _________________ 6. 𝗖𝗼𝗺𝗽𝗹𝗶𝗮𝗻𝗰𝗲 𝗚𝘂𝗮𝗿𝗱𝗿𝗮𝗶𝗹𝘀 These ensure the system follows 𝗹𝗮𝘄𝘀 𝗮𝗻𝗱 𝗿𝗲𝗴𝘂𝗹𝗮𝘁𝗶𝗼𝗻𝘀. • Industry rules are respected • Data privacy laws are followed • User data is handled safely Non-compliance can lead to legal and financial risk. ✅ 𝗜𝗻 𝘀𝗵𝗼𝗿𝘁: • 𝗜𝗻𝗽𝘂𝘁 & 𝗢𝘂𝘁𝗽𝘂𝘁 → what goes in and out • 𝗖𝗼𝗻𝘁𝗲𝘅𝘁 → what the AI is allowed to do • 𝗦𝗲𝗰𝘂𝗿𝗶𝘁𝘆 → protection from attacks • 𝗔𝗱𝗮𝗽𝘁𝗶𝘃𝗲 → real-time control • 𝗘𝘁𝗵𝗶𝗰𝘀 → fair and safe behavior • 𝗖𝗼𝗺𝗽𝗹𝗶𝗮𝗻𝗰𝗲 → legal safety Together, these guardrails make your AI system safe, reliable, and trustworthy. ✅ Repost for others in your network who can benefit from this.

More like this

Recommendations from Medial

Raju Biswal

🧠 Building Laryaa a... • 1m

Why AI Should Never See What It Doesn’t Need? Most AI systems fail not because they’re unintelligent, but because they see too much. In regulated, real-world environments, visibility is a liability. If an AI needs full screens, raw data, or unres

See More

GOLLAVILLI SANJAYKUMAR

Building in noise is... • 10m

I spent 6 years learning how computers work. Now, computers are learning how humans work — in a matter of days. History shows us: the one who understands a system becomes the master; the system becomes their tool. So the question is — are we becoming

See MoreAKHIL MISHRA

Helping businesses g... • 1m

Everyone wants AI agents. Very few enterprises can actually run them. Here’s the uncomfortable truth: AI agents don’t fail because they’re not smart. They fail because organizations aren’t ready. In demos, agents look cheap. In production, costs e

See More

Download the medial app to read full posts, comements and news.