Back

vineet arora

Serenity in chaos • 6d

ai+llm + local on a laptop is fantasy unless you have a super graphics card or npu or 32gb ram. sorry but this is the real fact, you may still try to run it in 16 gb but then it lags like hell. for you context , the thing is called 'Ollama'. it can run many free llms like deepseek, qwen, mistral etc. they have small llm too but for your case they are worthless. best of luck bro

More like this

Recommendations from Medial

Pulakit Bararia

Founder Snippetz Lab... • 10m

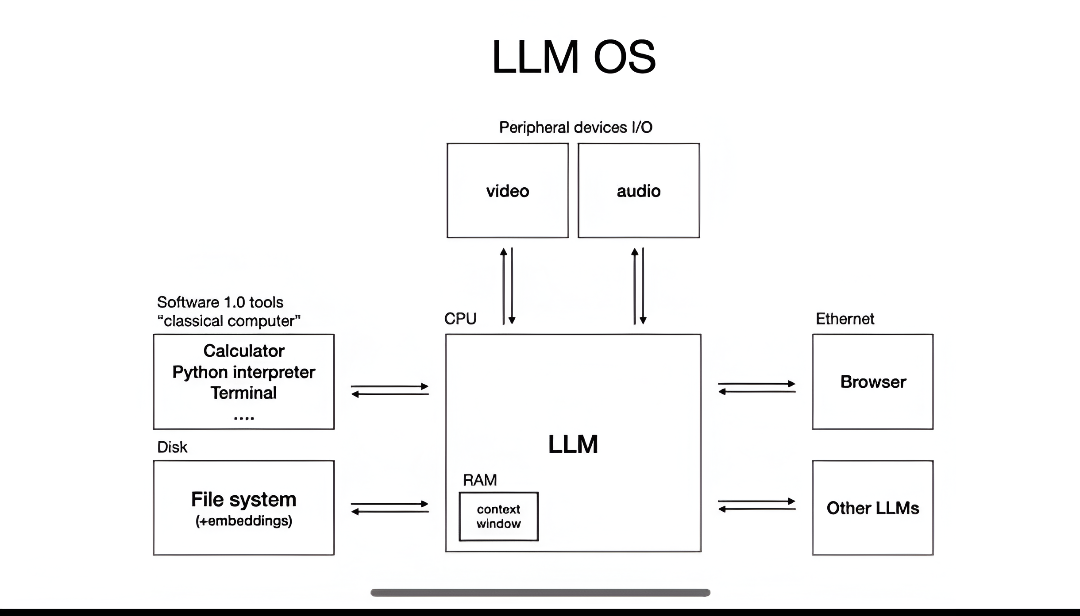

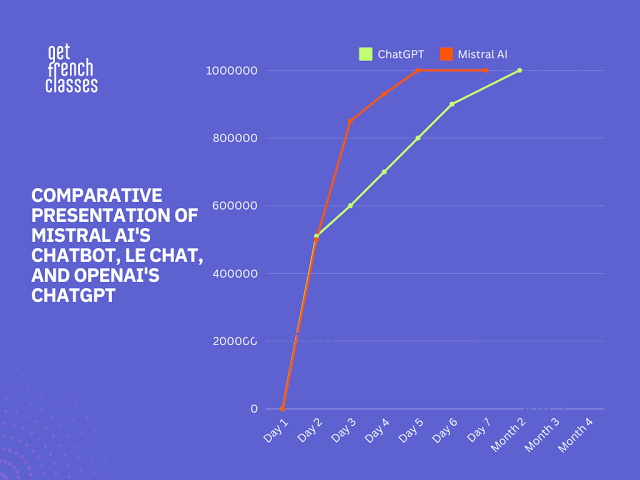

AI shouldn’t be a luxury—it should run anywhere, even on low-powered devices, without costly hardware or cloud dependency. Companies like TinyML, Edge Impulse, LM Studio, Mistral AI, Llama (Meta), and Ollama are already making AI lighter, faster, a

See More

Arnav Goyal

https://arnavgoyal.m... • 9m

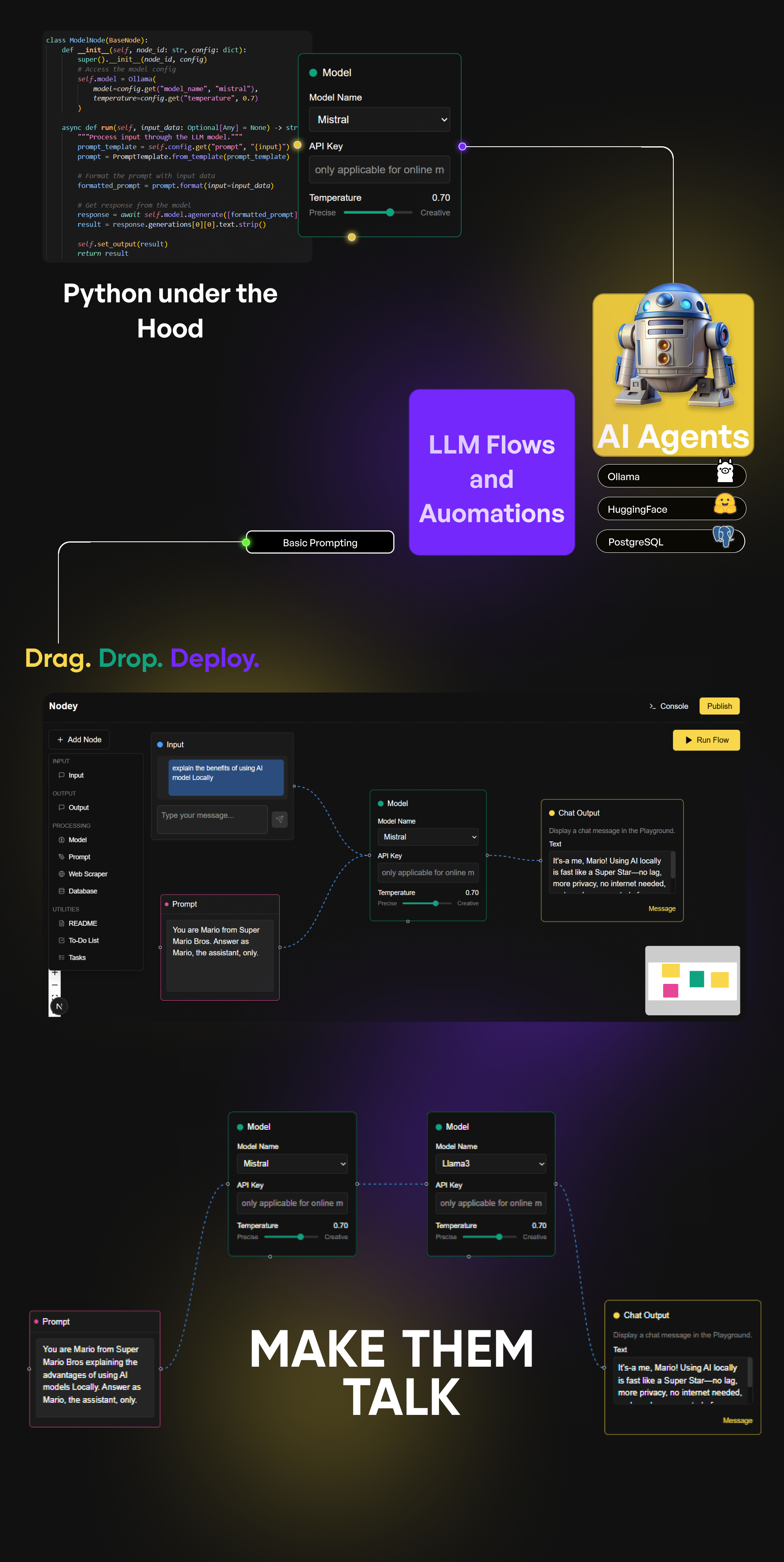

Introducing Nodey – A Low-Code Platform for Building LLM Flows and AI Agents Locally I'm building a project called Nodey, a low-code, drag-and-drop platform that helps you create LLM workflows and AI agents without needing to write a lot of code. C

See More

Prasanna Raj Neupane

Hey I am on Medial • 6m

🚨 Meet Subduct — The Secure Bridge Between AI & Enterprise Data Modern LLMs are powerful — but they can’t access the core of your business. Subduct changes that. We’re building the secure infrastructure layer that connects GPT-4, Claude, and Mist

See More

Pulakit Bararia

Founder Snippetz Lab... • 5m

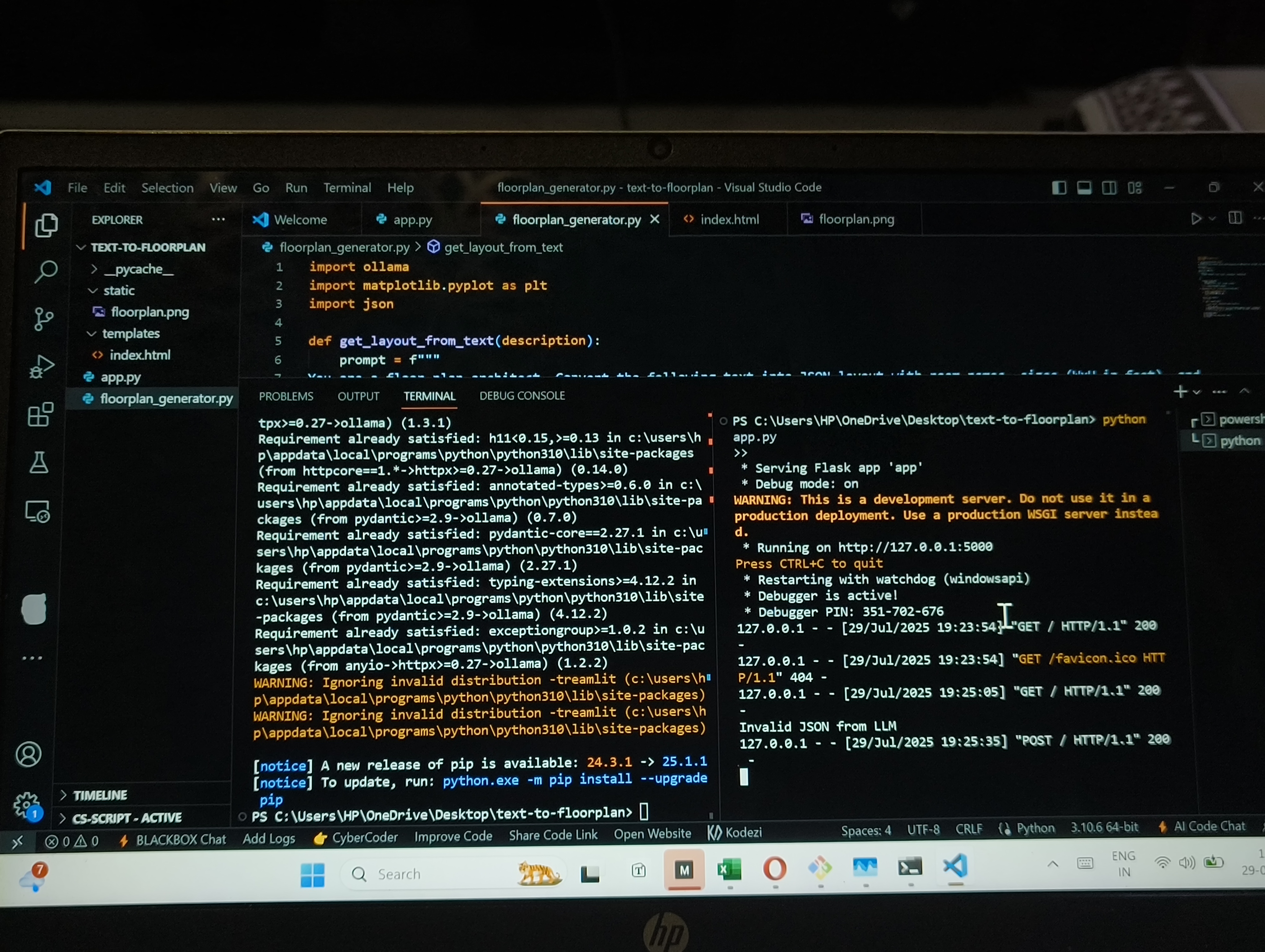

I’m building an AI-first architectural engine that converts natural language prompts into parametric, code-compliant floor plans. It's powered by a fine-tuned Mistral LLM orchestrating a multi-model architecture — spatial parsing, geometric reasonin

See More

Download the medial app to read full posts, comements and news.