Back

Sandip Bhise

Connect. Share. Grow • 1m

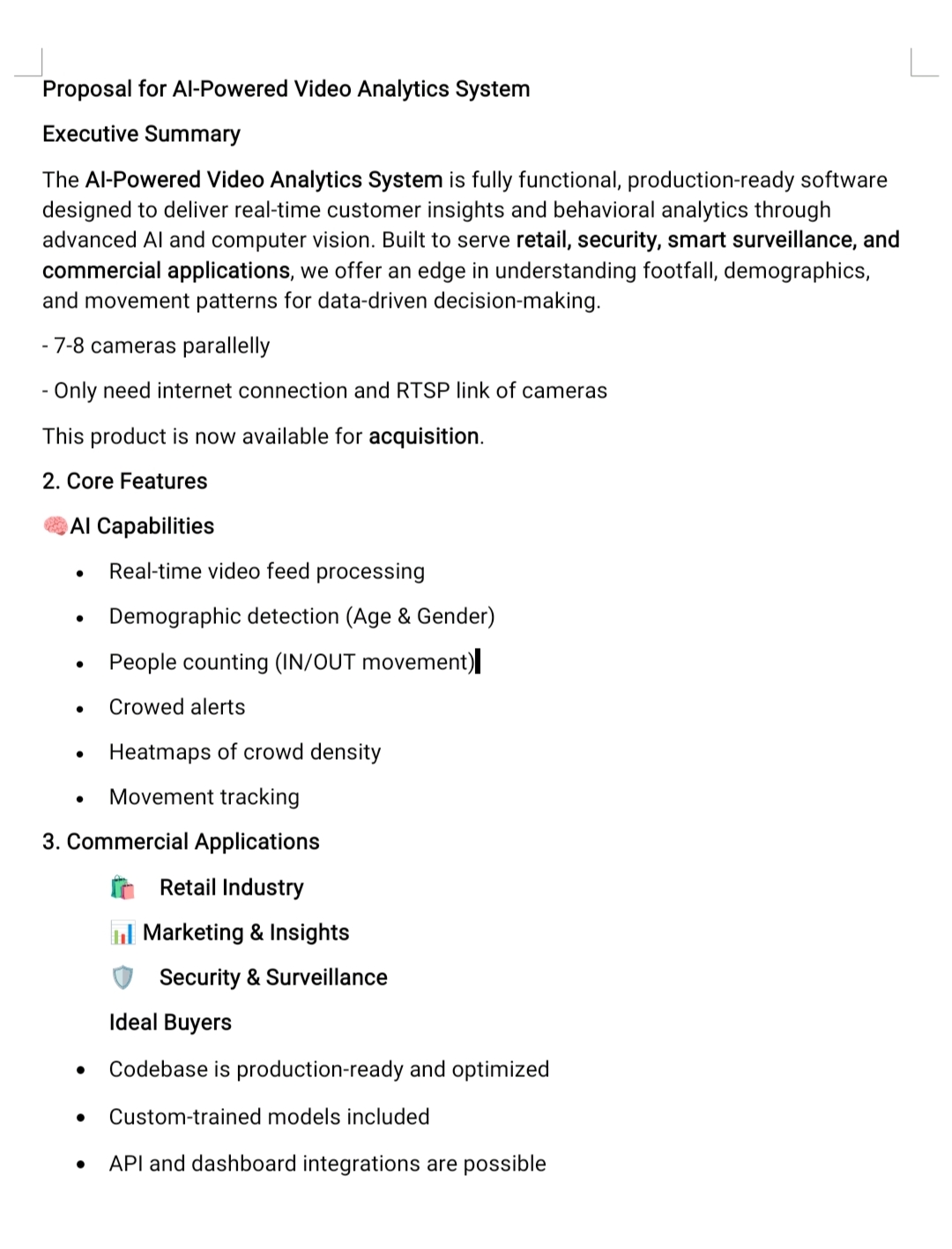

I completely understand where you're coming from. However, this isn’t about quick money or repackaging open-source models. Our solution involves custom-trained models, domain-specific tuning, and has been tested over multiple days in real scenarios by another vendor to ensure reliability and performance. We’re transparent about the architecture and are open to demonstrating the code, running it on custom data, and discussing how it can be adapted to specific use cases. Happy to clarify further if you’re genuinely interested.

Replies (1)

More like this

Recommendations from Medial

Chetan Bhosale

Software Engineer | ... • 8m

💡 5 Things You Need to Master for learn for integrating AI into your project 1️⃣ Retrieval-Augmented Generation (RAG): Combine search with AI for precise and context-aware outputs. 2️⃣ Vector Databases: Learn how to store and query embeddings for e

See MoreJainil Prajapati

Turning dreams into ... • 5m

India should focus on fine-tuning existing AI models and building applications rather than investing heavily in foundational models or AI chips, says Groq CEO Jonathan Ross. Is this the right strategy for India to lead in AI innovation? Thoughts?

AI Engineer

AI Deep Explorer | f... • 4m

"A Survey on Post-Training of Large Language Models" This paper systematically categorizes post-training into five major paradigms: 1. Fine-Tuning 2. Alignment 3. Reasoning Enhancement 4. Efficiency Optimization 5. Integration & Adaptation 1️⃣ Fin

See More

Mohammed Zaid

Shitposter of Medial • 1m

OpenAI researchers have discovered hidden features within AI models that correspond to misaligned "personas," revealing that fine-tuning models on incorrect information in one area can trigger broader unethical behaviors through what they call "emerg

See More

Priyam Maurya

Wanna create somethi... • 2h

hey I'm an app developer and I've done projects like developing apps, training custom ai models, integration of ai into app. if anyone is creating something INNOVATIVE in these things and need help please reach me out. I'm open to help u out and be

See MoreDownload the medial app to read full posts, comements and news.