Back

Gigaversity

Gigaversity.in • 3m

How We Reduced Docker Image Size by 70% Using AI-Powered Tree Shaking The Problem: Our Next.js + FastAPI Docker images ballooned to 1.2GB, severely impacting CI/CD pipelines. Traditional fixes—like multi-stage builds and the Alpine base image—only scratched the surface The Breakthrough Solution: 1️⃣ Trained a Custom CNN Model: Analyzed dependency trees to predict which layers/files were redundant. 2️⃣ Integrated Google’s SlimToolkit: Automated AI-guided layer pruning without breaking runtime dependencies. 3️⃣ Static Analysis + Runtime Validation: Ensured pruned images retained critical binaries (e.g., OpenSSL). Result: Images shrank to 400MB (70% reduction) with zero runtime errors. Why This Is a Game-Changer: Beyond Manual Optimization: Unlike typical "use Alpine" advice, AI identified hidden bloat (e.g., unused locale files, dev dependencies). Precision Over Guesswork: Manual reviews miss subtle dependencies; our CNN model flagged low-usage packages with 98% accuracy. Scalable for Microservices: Applied across 50+ services, saving 400 GB+ in registry storage and slashing deployment times. Key Takeaway: AI-driven static analysis isn’t just hype—it’s the future of DevOps. By automating optimization, we achieved results 2-3x better than manual methods, with safer, reproducible outcomes. 💡 Think your Docker images are lean? What’s the smallest you’ve achieved, and how? Let’s Discuss this below! 👇 #Gigaversity #Codesimulations #FullStackDevelopment #DevOps #Docker #FastAPI #Nextjs #BackendDevelopment #AIinTech #AIDevOps #MachineLearning #CloudComputing #SoftwareEngineering #AITools #Microservices #CICD #DeveloperLife #CodingCommunity #PythonDevelopers #JavaScript #BuildInPublic

More like this

Recommendations from Medial

Pratik Raundale

Cloud Devops Enginee... • 1m

This Week's Learning Journey - Cloud, DevOps, Node.js & Docker. This week has been packed with hands-on learning and growth. Cloud & DevOps Gained a solid understanding of AWS services like EC2, S3, and CloudFront. Deployed my first application on

See More

Account Deleted

Hey I am on Medial • 9m

🚀 Optimizing Docker builds goes beyond just speed; it’s a game-changer for reducing deployment costs, enhancing security, and ensuring consistency! 🛠️ Every layer and dependency counts—large images can slow down deployments and inflate costs. Don't

See More

Abhishek Talole

DevOps Engineer @Dre... • 7m

I am now accepting freelance projects in DevOps and Generative AI domains. With hands-on experience in cutting-edge technologies, here's what I bring to the table: DevOps Expertise Proficient in Docker and Kubernetes for containerization and orches

See MoreHimanshu Singh

Help you to build yo... • 7m

As a backend engineer. You should learn: - System Design (scalability, microservices) -APIs (REST, GraphQL, gRPC) -Database Systems (SQL, NoSQL) -Distributed Systems (consistency, replication) -Caching (Redis, Memcached) -Security (OAuth2, JWT

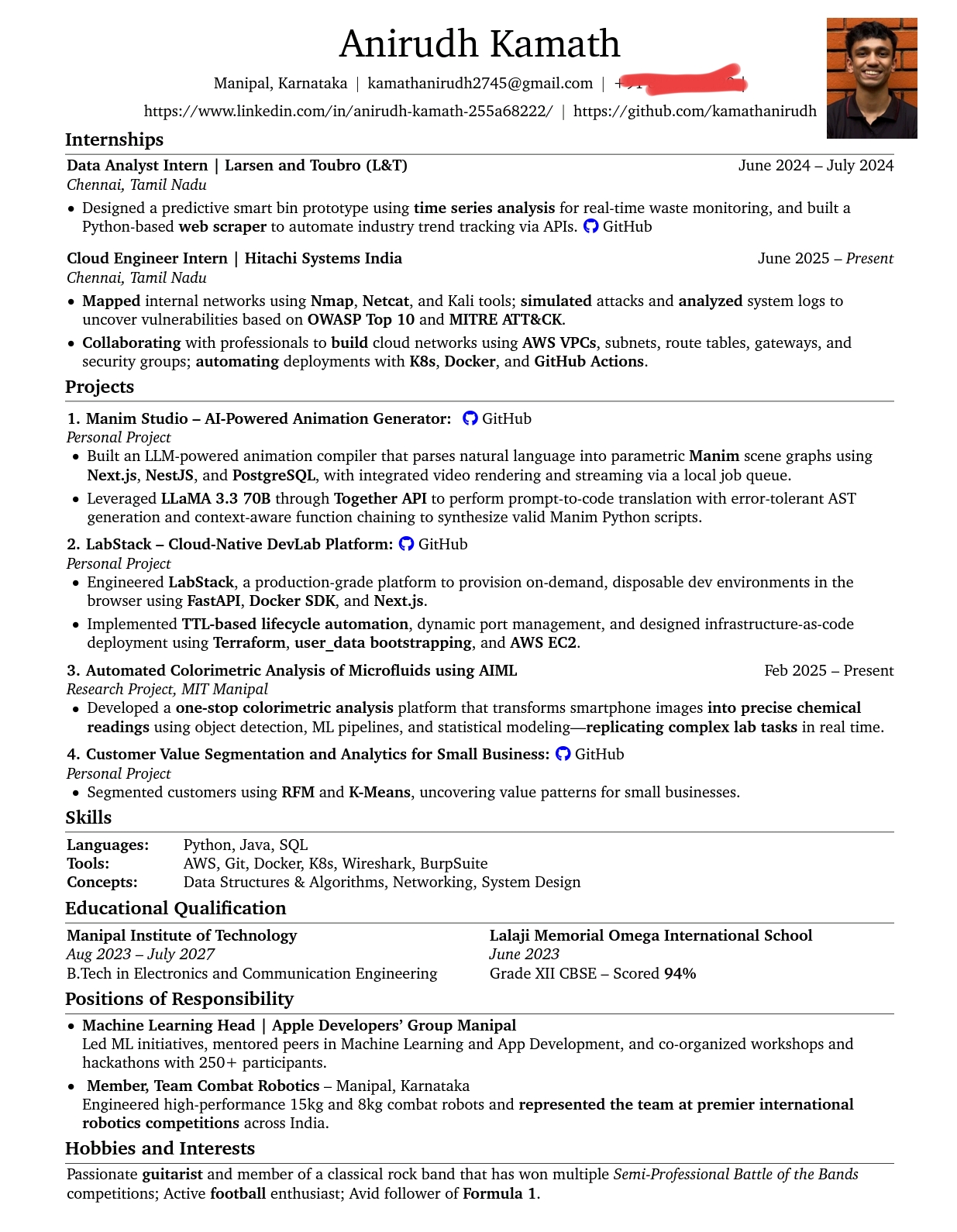

See MoreAnirudh Kamath

Cloud | DevOps | AI • 1m

-Actively looking for Internship opportunities in the Cloud and DevOps domain -Proficient with AWS, Docker, Kubernetes and also development. -Involved in AI Image recognition research, lets connect! Attached my CV below, hidden my phone number for

See More

Chetan Bhosale

Software Engineer | ... • 8m

🚀 6 Skills to Master in 2025 to Become a 10x Engineer 1️⃣ Full Stack Development: Master both frontend & backend for end-to-end project ownership. 2️⃣ AI Integration: Learn to work with AI tools & seamlessly integrate them into your projects. 3️⃣ A

See MoreDownload the medial app to read full posts, comements and news.